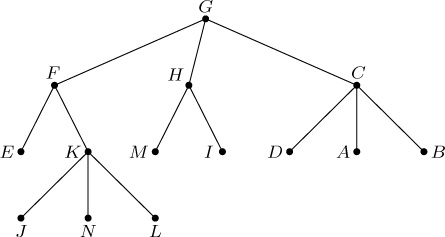

1. ${\tt INFO(T)}=A$, ${\tt INFO(RLINK(T))}=C$, etc.; the answer is H.

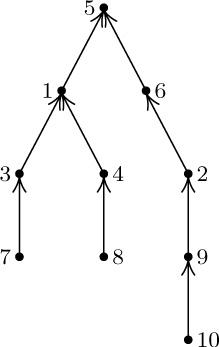

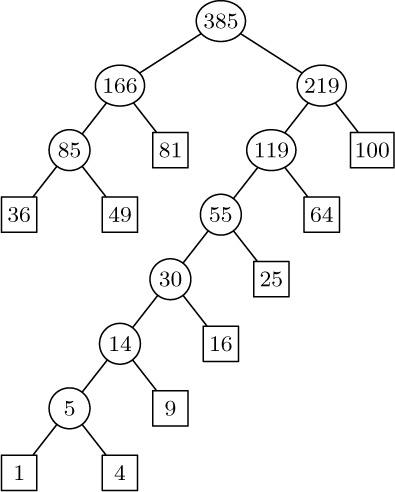

2. Preorder: 1245367; symmetric order: 4251637; postorder: 4526731.

3. The statement is true; notice, for example, that nodes 4, 5, 6, 7 always appear in this order in exercise 2. The result is immediately proved by induction on the size of the binary tree.

4. It is the reverse of postorder. (This is easily proved by induction.)

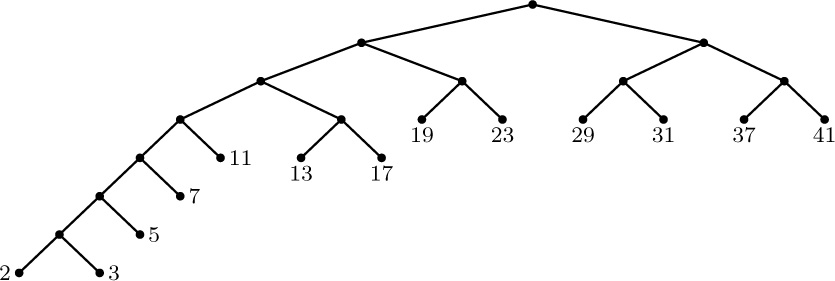

5. In the tree of exercise 2, for example, preorder is 1, 10, 100, 101, 11, 110, 111, using binary notation (which is in this case equivalent to the Dewey system). The strings of digits have been sorted, like words in a dictionary.

In general, the nodes will be listed in preorder if they are sorted lexicographically from left to right, with “blank” < 0 < 1. The nodes will be listed in postorder if they are sorted lexicographically with 0 < 1 < “blank”. For inorder, use 0 < “blank” < 1.

(Moreover, if we imagine the blanks at the left and treat the Dewey labels as ordinary binary numbers, we get level order; see 2.3.3–(8).)

6. The fact that $p_{1}p_{2}\ldots p_{n}$ is obtainable with a stack is readily proved by induction on n, or in fact we may observe that Algorithm T does precisely what is required in its stack actions. (The corresponding sequence of S’s and X’s, as in exercise 2.2.1–3, is the same as the sequence of 1s and 2s as subscripts in double order; see exercise 18.)

Conversely, if $p_{1}p_{2}\ldots p_{n}$ is obtainable with a stack and if $p_{k}=1$, then $p_{1}\ldots p_{k-1}$ is a permutation of $\{2,\ldots,k\}$ and $p_{k+1}\ldots p_{n}$ is a permutation of $\{k+1,\ldots,n\}$; these are the permutations corresponding to the left and right subtrees, and both are obtainable with a stack. The proof now proceeds by induction.

7. From the preorder, the root is known; then from the inorder, we know the left subtree and the right subtree; and in fact we know the preorder and inorder of the nodes in the latter subtrees. Hence the tree is readily constructed (and indeed it is quite amusing to construct a simple algorithm that links the tree together in the normal fashion, starting with the nodes linked together in preorder in LLINK and in inorder in RLINK). Similarly, postorder and inorder together characterize the structure. But preorder and postorder do not; there are two binary trees having $AB$ as preorder and $BA$ as postorder. If all nonterminal nodes of a binary tree have both branches nonempty, its structure is characterized by preorder and postorder.

8. (a) Binary trees with all $\tt LLINK\rm s$ null. (b) Binary trees with zero or one nodes. (c) Binary trees with all $\tt RLINK\rm s$ null.

9. T1 once, T2 $2n{+}1$ times, T3 n times, T4 $n{+}1$ times, T5 n times. These counts can be derived by induction or by Kirchhoff’s law, or by examining Program T.

10. A binary tree with all $\tt RLINK\rm s$ null will cause all n node addresses to be put in the stack before any are removed.

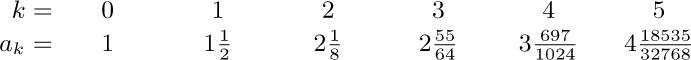

11. Let $a_{nk}$ be the number of binary trees with n nodes for which the stack in Algorithm T never contains more than k items. If $g_{k}(z)=∑_{n}a_{nk}z^{n}$, we find $g_{1}(z)=1/(1-z)$, $g_{2}(z)=1/(1-z/(1-z))=$ $(1-z)/(1-2z),\ldots,g_{k}(z)=$ $1/(1-zg_{k-1}(z))=q_{k-1}(z)/q_{k}(z)$ where $q_{-1}(z)=q_{0}(z)=1$, $q_{k+1}(z)=q_{k}(z)-zq_{k-1}(z)$; hence $g_{k}(z)=(f_{1}(z)^{k+1}-f_{2}(z)^{k+1})/(f_{1}(z)^{k+2}-f_{2}(z)^{k+2})$ where $f_{j}(z)=\frac{1}{2}(1\pm\sqrt{1-4z})$. It can now be shown that $a_{nk}=[u^{n}](1-u)(1+u)^{2n}(1-u^{k+1})/(1-u^{k+2})$; hence $s_{n}=∑_{k\ge1}k(a_{nk}-a_{n(k-1)})$ is $[u^{n+1}](1-u)^{2}(1+u)^{2n}∑_{j\ge1}u^{j}/(1-u^{j})$, minus $a_{nn}$. The technique of exercise 5.2.2–52 now yields the asymptotic series

$s_{n}/a_{nn}=\sqrt{\pi n}-\frac{3}{2}-\frac{13}{24}\sqrt{\frac{\pi}{n}}+\frac{1}{2n}+O(n^{-3/2})$

[N. G. de Bruijn, D. E. Knuth, and S. O. Rice, in Graph Theory and Computing, ed. by R. C. Read (New York: Academic Press, 1972), 15–22.]

When the binary tree represents a forest as described in Section 2.3.2, the quantity analyzed here is the height of that forest (the furthest distance between a node and a root, plus one). Generalizations to many other varieties of trees have been obtained by Flajolet and Odlyzko [j. Computer and System Sci. 25 (1982), 171–213]; the asymptotic distribution of heights, both near the mean and far away, was subsequently analyzed by Flajolet, Gao, Odlyzko, and Richmond [Combinatorics, Probability, and Computing 2 (1993), 145–156].

12. Visit $\tt NODE(P)$ between steps T2 and T3, instead of in step T5. For the proof, demonstrate the validity of the statement “Starting at step T2 with $\ldots$ original value ${\tt A[}1{\tt]\ldots A[}m\tt]$,” essentially as in the text.

13. (Solution by S. Araújo, 1976.) Let steps T1 through T4 be unchanged, except that a new variable Q is initialized to Λ in step T1′; Q will point to the last node visited, if any. Step T5 becomes two steps:

T5′. [Right branch done?] If ${\tt RLINK(P)}=\varLambda$ or ${\tt RLINK(P)}=\tt Q$, go on to T6; otherwise set $\tt A\Leftarrow P$, $\tt P←RLINK(P)$ and return to T2′.

T6′. [Visit P.] Visit $\tt NODE(P)$, set $\tt Q←P$, and return to T4′.

A similar proof applies. (Steps T4′ and T5′ can be streamlined so that nodes are not taken off the stack and immediately reinserted.)

14. By induction, there are always exactly n + 1 Λ links (counting T when it is null). There are n nonnull links, counting T, so the remark in the text about the majority of null links is justified.

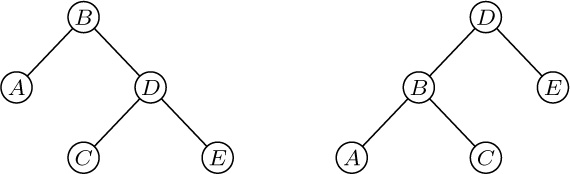

15. There is a thread LLINK or RLINK pointing to a node if and only if it has a nonempty right or left subtree, respectively. (See Fig. 24.)

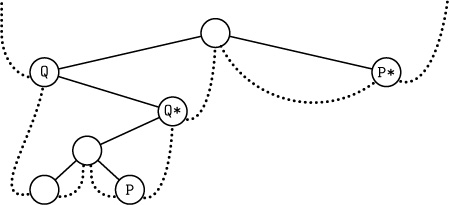

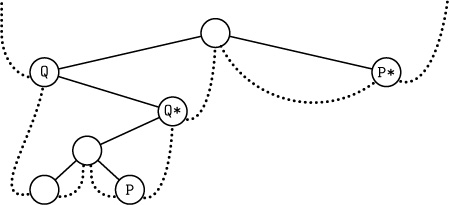

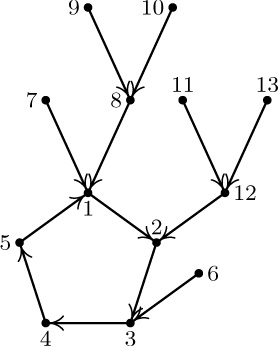

16. If ${\tt LTAG(Q)}=0$, Q* is $\tt LLINK(Q)$; thus Q* is one step down and to the left. Otherwise Q* is obtained by going upwards in the tree (if necessary) repeatedly until the first time it is possible to go down to the right without retracing steps; typical examples are the trips from P to P* and from Q to Q* in the following tree:

17. If ${\tt LTAG(P)}=0$, set $\tt Q←LLINK(P)$ and terminate. Otherwise set $\tt Q←P$, then set $\tt Q←RLINK(Q)$ zero or more times until finding ${\tt RTAG(Q)}=0$; finally set $\tt Q←RLINK(Q)$ once more.

18. Modify Algorithm T by inserting a step T2.5, “Visit $\tt NODE(P)$ the first time”; in step T5, we are visiting $\tt NODE(P)$ the second time.

Given a threaded tree the traversal is extremely simple:

$({\tt P},1)^\varDelta=({\tt LLINK(P)},1)$ if ${\tt LTAG(P)}=0$, otherwise $({\tt P},2)$;

$({\tt P},2)^\varDelta=({\tt RLINK(P)},1)$ if ${\tt RTAG(P)}=0$, otherwise $({\tt RLINK(P)},2)$.

In each case, we move at most one step in the tree; in practice, therefore, double order and the values of d and e are embedded in a program and not explicitly mentioned.

Suppressing all the first visits gives us precisely Algorithms T and S; suppressing all the second visits gives us the solutions to exercises 12 and 17.

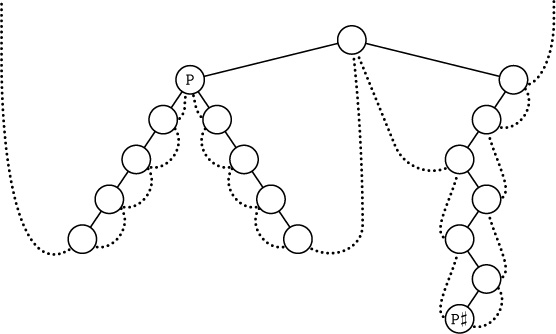

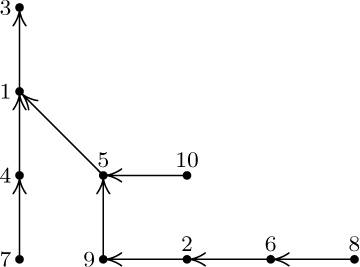

19. The basic idea is to start by finding the parent Q of P. Then if $\tt P\neq LLINK(Q)$ we have ${\tt P\sharp}=\tt Q$; otherwise we can find $\tt P\sharp$ by repeatedly setting $\tt Q←Q\$$ zero or more times until ${\tt RTAG(Q)}=1$. (See, for example, P and $\tt P\sharp$ in the tree shown.)

There is no efficient algorithm to find the parent of P in a general right-threaded tree, since a degenerate right-threaded tree in which all left links are null is essentially a circular list in which the links go the wrong way. Therefore we cannot traverse a right-threaded tree in postorder with the same efficiency as the stack method of exercise 13, if we keep no history of how we have reached the current node P.

But if the tree is threaded in both directions, we can find $\tt P\rm\unicode{39}s$ parent efficiently:

F1. Set $\tt Q←P$ and $\tt R←P$.

F2. If ${\tt LTAG(Q)}={\tt RTAG(R)}=0$, set $\tt Q←LLINK(Q)$ and $\tt R←RLINK(R)$ and repeat this step. Otherwise go to F4 if ${\tt RTAG(R)}=1$.

F3. Set $\tt Q←LLINK(Q)$, and terminate if $\tt P=RLINK(Q)$. Otherwise set $\tt R←RLINK(R)$ zero or more times until ${\tt RTAG(R)}=1$, then set $\tt Q←RLINK(R)$ and terminate.

F4. Set $\tt R←RLINK(R)$, and terminate with $\tt Q←R$ if $\tt P=LLINK(R)$. Otherwise set $\tt Q←LLINK(Q)$ zero or more times until ${\tt LTAG(Q)}=1$, then set $\tt Q←LLINK(Q)$ and terminate.

The average running time of Algorithm F is $O(1)$ when P is a random node of the tree. For if we count only the steps $\tt Q←LLINK(Q)$ when P is a right child, or only the steps $\tt R←RLINK(R)$ when P is a left child, each link is traversed for exactly one node P.

20.

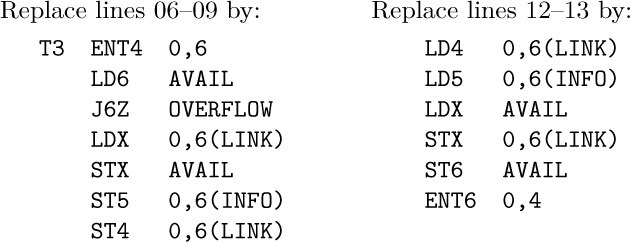

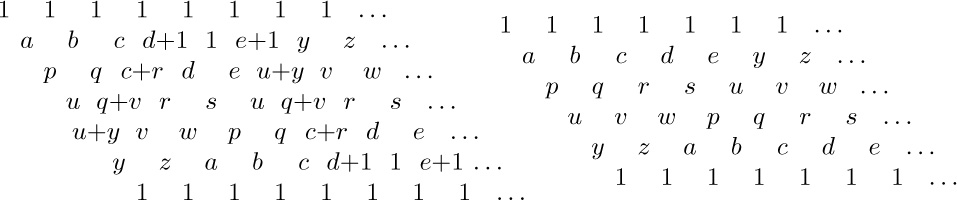

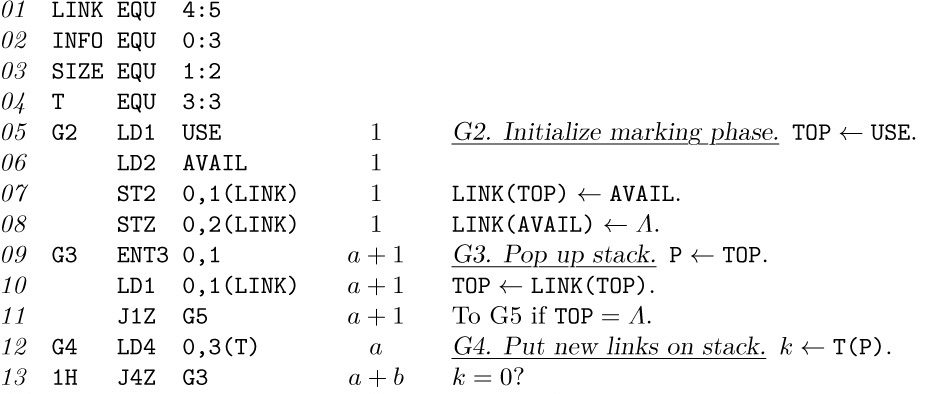

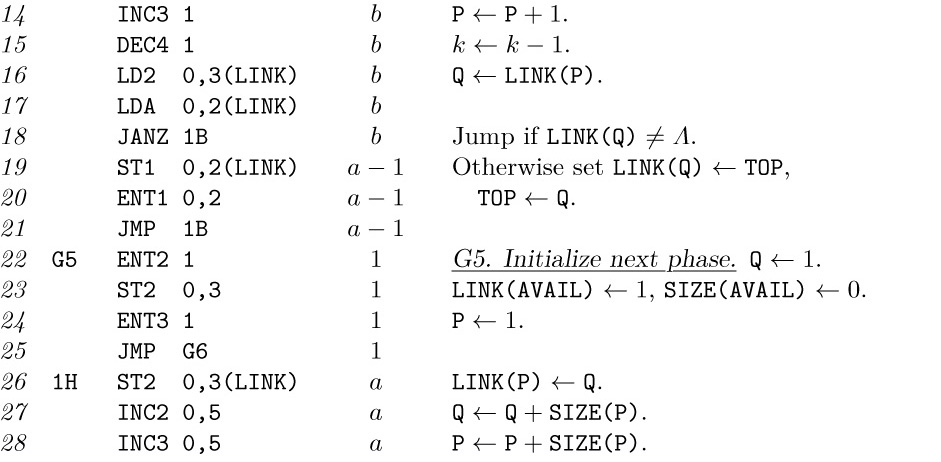

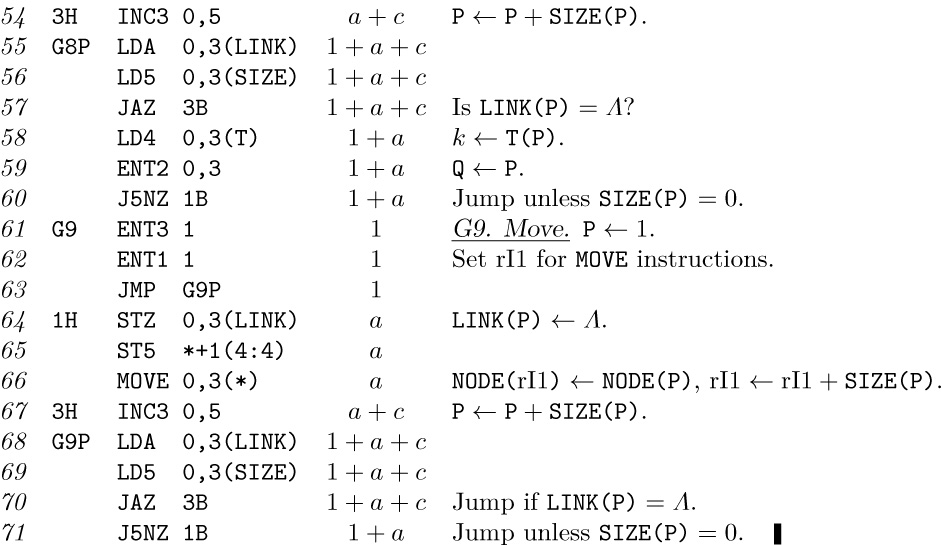

If two more lines of code are added at line 06

with appropriate changes in lines 10 and 11, the running time goes down from $(30n+a+4)u$ to $(27a+6n-22)u$. (This same device would reduce the running time of Program T to $(12a+6n-7)u$, which is a slight improvement, if we set $a=(n+1)/2.)$

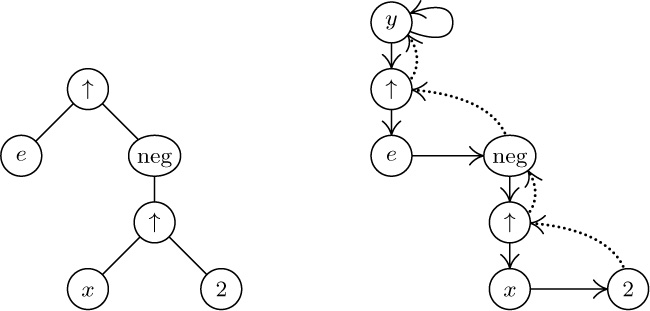

21. The following solution by Joseph M. Morris [Inf. Proc. Letters 9 (1979), 197–200] traverses also in preorder (see exercise 18).

U1. [Initialize.] Set $\tt P←T$ and $\tt R←\varLambda$.

U2. [Done?] If ${\tt P}=\varLambda$, the algorithm terminates.

U3. [Look left.] Set $\tt Q←LLINK(P)$. If ${\tt Q}=\varLambda$, visit $\tt NODE(P)$ in preorder and go to U6.

U4. [Search for thread.] Set $\tt Q←RLINK(Q)$ zero or more times until either Q = R or ${\tt RLINK(Q)}=\varLambda$.

U5. [Insert or remove thread.] If $\tt Q\neq R$, set $\tt RLINK(Q)←P$ and go to U8. Otherwise set $\tt RLINK(Q)←\varLambda$ (it had been changed temporarily to P, but we’ve now traversed $\tt P\rm\unicode{39}s$ left subtree).

U6. [Inorder visit.] Visit $\tt NODE(P)$ in inorder.

U7. [Go to right or up.] Set $\tt R←P$, $\tt P←RLINK(P)$, and return to U2.

U8. [Preorder visit.] Visit $\tt NODE(P)$ in preorder.

U9. [Go to left.] Set $\tt P←LLINK(P)$ and return to step U3.

Morris also suggested a slightly more complicated way to traverse in postorder.

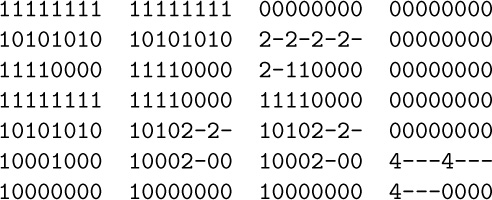

A completely different solution was found by J. M. Robson [Inf. Proc. Letters 2 (1973), 12–14]. Let’s say that a node is “full” if its LLINK and RLINK are nonnull, “empty” if its LLINK and RLINK are both empty. Robson found a way to maintain a stack of pointers to the full nodes whose right subtrees are being visited, using the link fields in empty nodes!

Yet another way to avoid an auxiliary stack was discovered independently by G. Lindstrom and B. Dwyer, Inf. Proc. Letters 2 (1973), 47–51, 143–145. Their algorithm traverses in triple order—it visits every node exactly three times, once in each of preorder, inorder, and postorder—but it does not know which of the three is currently being done.

W1. [Initialize.] Set $\tt P←T$ and $\tt Q←S$, where S is a sentinel value — any number that is known to be different from any link in the tree (e.g., $-1$).

W2. [Bypass null.] If ${\tt P}=\varLambda$, set $\tt P←Q$ and $\tt Q←\varLambda$.

W3. [Done?] If P = S, terminate the algorithm. (We will have Q = T at termination.)

W4. [Visit.] Visit $\tt NODE(P)$.

W5. [Rotate.] Set $\tt R←LLINK(P)$, $\tt LLINK(P)←RLINK(P)$, $\tt RLINK(P)←Q$, $\tt Q←P$, $\tt P←R$, and return to W2.

Correctness follows from the fact that if we start at W2 with P pointing to the root of a binary tree T and Q pointing to X, where X is not a link in that tree, the algorithm will traverse the tree in triple order and reach step W3 with P = X and Q = T.

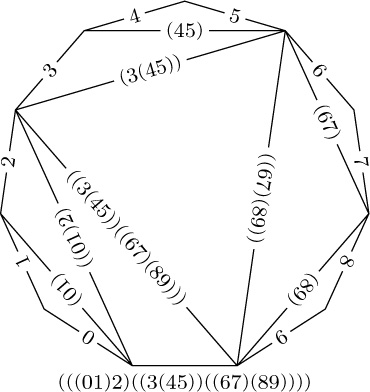

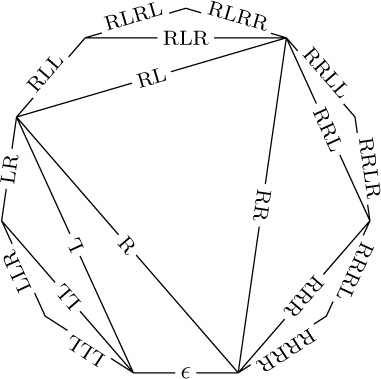

If $\alpha({\tt T})=x_{1}x_{2}\ldots x_{3n}$ is the resulting sequence of nodes in triple order, we have $\alpha({\tt T})={\tt T}\;\alpha({\tt LLINK(T)})\;{\tt T}\;\alpha({\tt RLINK(T)})\tt\;T$. Therefore, as Lindstrom observed, the three subsequences $x_{1}x_{4}\ldots x_{3n-2},x_{2}x_{5}\ldots x_{3n-1},x_{3}x_{6}\ldots x_{3n}$ each include every tree node just once. (Since $x_{j+1}$ is either the parent or child of $x_{j}$, these subsequences visit the nodes in such a way that each is at most three links away from its predecessor. Section 7.2.1.6 describes a general traversal scheme called prepostorder that has this property not only for binary trees but for trees in general.)

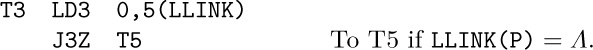

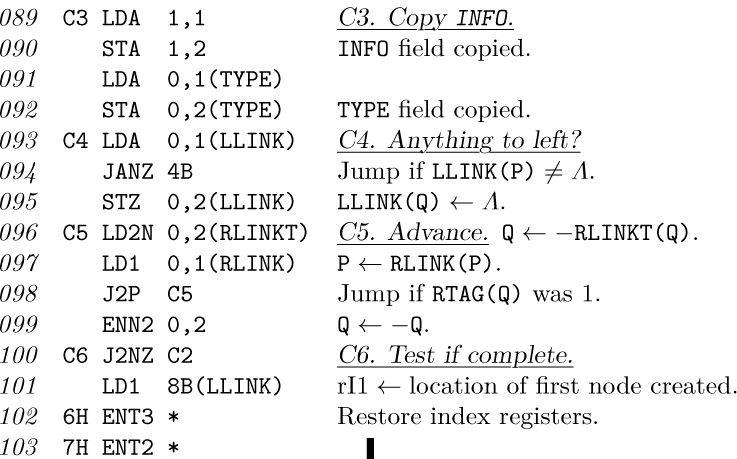

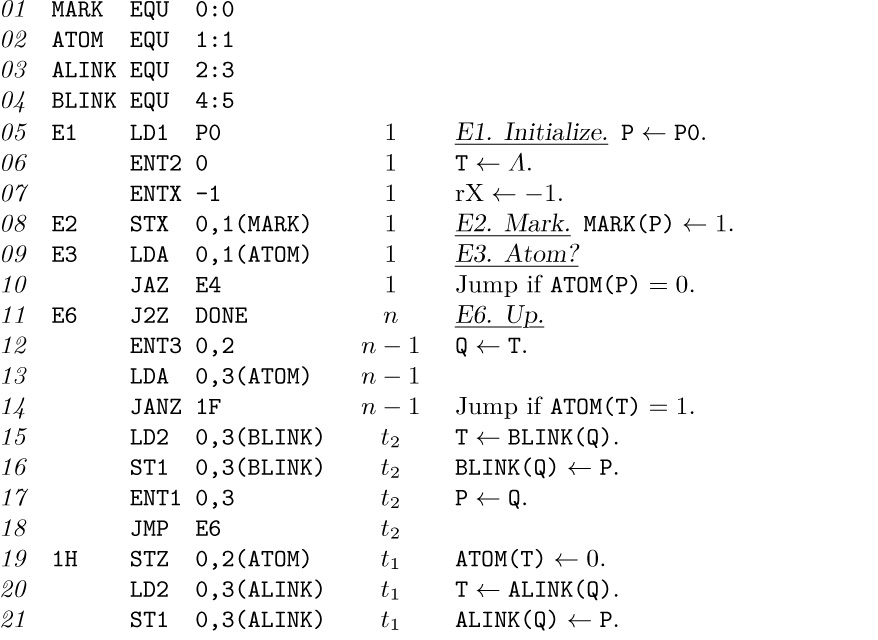

22. This program uses the conventions of Programs T and S, with Q in rI6 and/or rI4. The old-fashioned MIX computer is not good at comparing index registers for equality, so variable R is omitted and the test “Q = R” is changed to “${\tt RLINK(Q)}=\tt P$”.

The total running time is $21n+6a-3-14b$, where n is the number of nodes, a is the number of null RLINKs (hence $a-1$ is the number of nonnull LLINKs), and b is the number of nodes on the tree’s “right spine” T, $\tt RLINK(T)$, $\tt RLINK(RLINK(T))$, etc.

23. Insertion to the right: $\tt RLINKT(Q)←RLINKT(P)$, $\tt RLINK(P)←Q$, ${\tt RTAG(P)}←0$, $\tt LLINK(Q)←\varLambda$. Insertion to the left, assuming ${\tt LLINK(P)}=\varLambda$: Set $\tt LLINK(P)←Q$, $\tt LLINK(Q)←\varLambda$, $\tt RLINK(Q)←P$, ${\tt RTAG(Q)}←1$. Insertion to the left, between P and $\tt LLINK(P)\neq\varLambda$: Set $\tt R←LLINK(P)$, $\tt LLINK(Q)←R$, and then set $\tt R←RLINK(R)$ zero or more times until ${\tt RTAG(R)}=1$; finally set $\tt RLINK(R)←Q$, $\tt LLINK(P)←Q$, $\tt RLINK(Q)←P$, ${\tt RTAG(Q)}←1$.

(A more efficient algorithm for the last case can be used if we know a node F such that $\tt P=LLINK(F)$ or $\tt P=RLINK(F)$; assuming the latter, for example, we could set $\tt INFO(P)\leftrightarrow INFO(Q)$, $\tt RLINK(F)←Q$, $\tt LLINK(Q)←P$, $\tt RLINKT(Q)←RLINKT(P)$, $\tt RLINK(P)←Q$, ${\tt RTAG(P)}←1$. This takes a fixed amount of time, but it is generally not recommended because it switches nodes around in memory.)

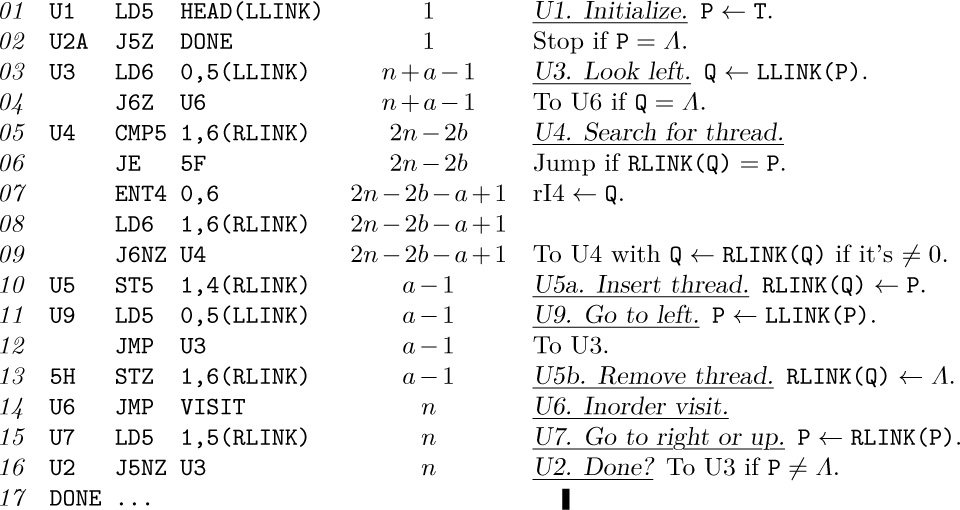

24. No:

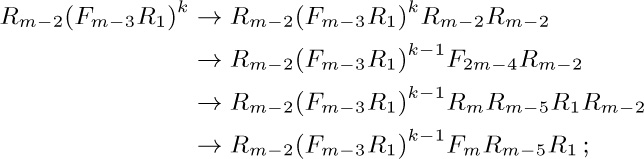

25. We first prove (b), by induction on the number of nodes in T, and similarly (c). Now (a) breaks into several cases; write $T\preceq_{1}T'$ if (i) holds, $T\preceq_{2}T'$ if (ii) holds, etc. Then $T\preceq_{1}T'$ and $T'\preceq T''$ implies $T\preceq_{1}T''$; $T\preceq_{2}T'$ and $T'\preceq T''$ implies $T\preceq_{2}T''$; and the remaining two cases are treated by proving (a) by induction on the number of nodes in T.

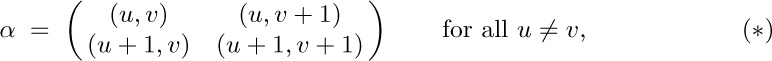

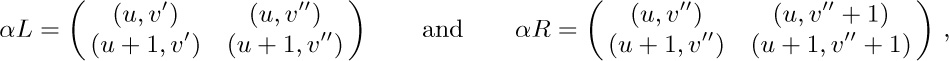

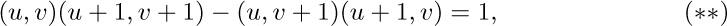

26. If the double order of T is $(u_{1},d_{1}),(u_{2},d_{2}),\ldots,(u_{2n},d_{2n})$ where the $u\rm\unicode{39}s$ are nodes and the $d\rm\unicode{39}s$ are 1 or 2, form the “trace” of the tree $(v_{1},s_{1}),(v_{2},s_{2}),\ldots,(v_{2n},s_{2n})$, where $v_{j}={\rm info}(u_{j})$, and $s_{j}=l(u_{j})$ or $r(u_{j})$ according as $d_{j}=1\;\rm or\;2$. Now $T\preceq T'$ if and only if the trace of T (as defined here) lexicographically precedes or equals the trace of $T'$. Formally, this means that we have either $n≤n'$ and $(v_{j},s_{j})=(v'_{j},s'_{j})$ for $1≤j≤2n$, or else there is a k for which $(v_{j},s_{j})=(v'_{j},s'_{j})$ for $1≤j\lt k$ and either $v_{k}\prec v'_{k}$ or $v_{k}=v'_{k}$ and $s_{k}\lt s'_{k}$.

27. R1. [Initialize.] Set $\tt P←HEAD$, $\tt P'←HEAD'$; these are the respective list heads of the given right-threaded binary trees. Go to R3.

R2. [Check INFO.] If ${\tt INFO(P)\prec INFO(P')}$, terminate $(T\prec T')$; if ${\tt INFO(P)\succ INFO(P')}$, terminate $(T\succ T')$.

R3. [Go to left.] If ${\tt LLINK(P)}=\varLambda={\tt LLINK(P')}$, go to R4; if ${\tt LLINK(P)}=\varLambda\neq{\tt LLINK(P')}$, terminate $(T\prec T')$; if ${\tt LLINK(P)\neq\varLambda=LLINK(P')}$, terminate $(T\succ T')$; otherwise set $\tt P←LLINK(P)$, $\tt P'←LLINK(P')$, and go to R2.

R4. [End of tree?] If $\tt P=HEAD$ (or, equivalently, if $\tt P'=HEAD'$), terminate (T is equivalent to $T'$).

R5. [Go to right.] If ${\tt RTAG(P)}=1={\tt RTAG(P')}$, set $\tt P←RLINK(P)$, $\tt P'←RLINK(P')$, and go to R4. If ${\tt RTAG(P)}=1\neq\tt RTAG(P')$, terminate $(T\prec T')$. If ${\tt RTAG(P)}\neq1={\tt RTAG(P')}$, terminate $(T\succ T')$. Otherwise, set $\tt P←RLINK(P)$, $\tt P'←RLINK(P')$, and go to R2.

To prove the validity of this algorithm (and therefore to understand how it works), one may show by induction on the size of the tree $T_{0}$ that the following statement is valid: Starting at step R2 with P and $\tt P'$ pointing to the roots of two nonempty right-threaded binary trees $T_{0}$ and $T'_{0}$, the algorithm will terminate if $T_{0}$ and $T'_{0}$ are not equivalent, indicating whether $T_{0}\prec T'_{0}$ or $T_{0}\succ T'_{0}$; the algorithm will reach step R4 if $T_{0}$ and $T'_{0}$ are equivalent, with P and $\tt P'$ then pointing respectively to the successor nodes of $T_{0}$ and $T'_{0}$ in symmetric order.

28. Equivalent and similar.

29. Prove by induction on the size of T that the following statement is valid: Starting at step C2 with P pointing to the root of a nonempty binary tree T and with Q pointing to a node that has empty left and right subtrees, the procedure will ultimately arrive at step C6 after setting $\tt INFO(Q)←INFO(P)$ and attaching copies of the left and right subtrees of $\tt NODE(P)$ to $\tt NODE(Q)$, and with P and Q pointing respectively to the preorder successor nodes of the trees T and $\tt NODE(Q)$.

30. Assume that the pointer T in (2) is $\tt LLINK(HEAD)$ in (10). Thus ${\tt LOC(T)}=\tt HEAD$, and $\tt HEAD\$$ is the first node of the binary tree in symmetric order.

L1. [Initialize.] Set $\tt Q←HEAD$, $\tt RLINK(Q)←Q$.

L2. [Advance.] Set $\tt P←Q\$$. (See below.)

L3. [Thread.] If ${\tt RLINK(Q)}=\varLambda$, set $\tt RLINK(Q)←P$, $\tt RTAG(Q)←1$; otherwise set ${\tt RTAG(Q)}←0$. If ${\tt LLINK(P)}=\varLambda$, set $\tt LLINK(P)←Q$, ${\tt LTAG(P)}←1$; otherwise set ${\tt LTAG(P)}←0$.

L4. [Done?] If $\tt P\neq HEAD$, set $\tt Q←P$ and return to L2.

Step L2 of this algorithm implies the activation of an inorder traversal coroutine like Algorithm T, with the additional proviso that Algorithm T visits HEAD after it has fully traversed the tree. This notation is a convenient simplification in the description of tree algorithms, since we need not repeat the stack mechanisms of Algorithm T over and over again. Of course Algorithm S cannot be used during step L2, since the tree hasn’t been threaded yet. But Algorithm U in answer 21 can be used in step L2; it provides us with a very pretty method that threads a tree without using any auxiliary stack.

31. X1. Set $\tt P←HEAD$.

X2. Set $\tt Q←P\$$ (using, say, Algorithm S, modified for a right-threaded tree).

X3. If $\tt P\neq HEAD$, set $\tt AVAIL\Leftarrow P$.

X4. If $\tt Q\neq HEAD$, set $\tt P←Q$ and go back to X2.

X5. Set $\tt LLINK(HEAD)←\varLambda$.

Other solutions that decrease the length of the inner loop are clearly possible, although the order of the basic steps is somewhat critical. The stated procedure works because we never return a node to available storage until after Algorithm S has looked at both its LLINK and its RLINK; as observed in the text, each of these links is used precisely once during a complete tree traversal.

32. $\tt RLINK(Q)←RLINK(P)$, $\tt SUC(Q)←SUC(P)$, $\tt SUC(P)←RLINK(P)←Q$, $\tt PRED(Q)←P$, $\tt PRED(SUC(Q))←Q$.

33. Inserting $\tt NODE(Q)$ just to the left and below $\tt NODE(P)$ is quite simple: Set $\tt LLINKT(Q)←LLINKT(P)$, $\tt LLINK(P)←Q$, ${\tt LTAG(P)}←0$, $\tt RLINK(Q)←\varLambda$. Insertion to the right is considerably harder, since it essentially requires finding $*\tt Q$, which is of comparable difficulty to finding $\tt Q\sharp$ (see exercise 19); the node-moving technique discussed in exercise 23 could perhaps be used. So general insertions are more difficult with this type of threading. But the insertions required by Algorithm C are not as difficult as insertions are in general, and in fact the copying process is slightly faster for this kind of threading:

C1. Set $\tt P←HEAD$, $\tt Q←U$, go to C4. (The assumptions and philosophy of Algorithm C in the text are being used throughout.)

C2. If $\tt RLINK(P)\neq\varLambda$, set $\tt R\Leftarrow AVAIL$, $\tt LLINK(R)←LLINK(Q)$, ${\tt LTAG(R)}←1$, $\tt RLINK(R)←\varLambda$, $\tt RLINK(Q)←LLINK(Q)←R$.

C3. Set $\tt INFO(Q)←INFO(P)$.

C4. If ${\tt LTAG(P)}=0$, set $\tt R\Leftarrow AVAIL$, $\tt LLINK(R)←LLINK(Q)$, ${\tt LTAG(R)}←1$, $\tt RLINK(R)←\varLambda$, $\tt LLINK(Q)←R$, ${\tt LTAG(Q)}←0$.

C5. Set $\tt P←LLINK(P)$, $\tt Q←LLINK(Q)$.

C6. If $\tt P\neq HEAD$, go to C2.

The algorithm now seems almost too simple to be correct!

Algorithm C for threaded or right-threaded binary trees takes slightly longer due to the extra time to calculate P*, Q* in step C5.

It would be possible to thread $\tt RLINK\rm s$ in the usual way or to put $\tt\sharp P$ in $\tt RLINK(P)$, in conjunction with this copying method, by appropriately setting the values of $\tt RLINK(R)$ and $\tt RLINKT(Q)$ in steps C2 and C4.

34. A1. Set $\tt Q←P$, and then repeatedly set $\tt Q←RLINK(Q)$ zero or more times until ${\tt RTAG(Q)}=1$.

A2. Set $\tt R←RLINK(Q)$. If ${\tt LLINK(R)}=\tt P$, set $\tt LLINK(R)←\varLambda$. Otherwise set $\tt R←LLINK(R)$, then repeatedly set $\tt R←RLINK(R)$ zero or more times until ${\tt RLINK(R)}=\tt P$; then finally set $\tt RLINKT(R)←RLINKT(Q)$. (This step has removed $\tt NODE(P)$ and its subtrees from the original tree.)

A3. Set $\tt RLINK(Q)←HEAD$, $\tt LLINK(HEAD)←P$.

(The key to inventing and/or understanding this algorithm is the construction of good “before and after” diagrams.)

36. No; see the answer to exercise 1.2.1–15(e).

37. If ${\tt LLINK(P)}={\tt RLINK(P)}=\varLambda$ in the representation (2), let ${\tt LINK(P)}=\varLambda$; otherwise let ${\tt LINK(P)}=\tt Q$ where $\tt NODE(Q)$ corresponds to $\tt NODE(LLINK(P))$ and $\tt NODE(Q+1)$ to $\tt NODE(RLINK(P))$. The condition $\tt LLINK(P)$ or ${\tt RLINK(P)}=\varLambda$ is represented by a sentinel in $\tt NODE(Q)$ or $\tt NODE(Q+1)$ respectively. This representation uses between n and $2n-1$ memory positions; under the stated assumptions, (2) would require 18 words of memory, compared to 11 in the present scheme. Insertion and deletion operations are approximately of equal efficiency in either representation. But this representation is not quite as versatile in combination with other structures.

1. If B is empty, $F(B)$ is an empty forest. Otherwise, $F(B)$ consists of a tree T plus the forest $F({\rm right}(B))$, where ${\rm root}(T)={\rm root}(B)$ and ${\rm subtrees}(T)=F({\rm left}(B))$.

2. The number of zeros in the binary notation is the number of decimal points in the decimal notation; the exact formula for the correspondence is

$\large a_{1}.a_{2}.\cdots.a_{k}\leftrightarrow 1^{a_{1}}01^{a_{2}-1}0\ldots01^{a_{k}-1}$,

where $1^{a}$ denotes a ones in a row.

3. Sort the Dewey decimal notations for the nodes lexicographically (from left to right, as in a dictionary), placing a shorter sequence $a_{1}.\cdots.a_{k}$ in front of its extensions $a_{1}.\cdots.a_{k}.\cdots.a_{r}$ for preorder, and behind its extensions for postorder. Thus, if we were sorting words instead of sequences of numbers, we would place the words cat, cataract in the usual dictionary order, to get preorder; we would reverse the order of initial subwords $(cataract,cat)$, to get postorder. These rules are readily proved by induction on the size of the tree.

4. True, by induction on the number of nodes.

5. (a) Inorder. (b) Postorder. It is interesting to formulate rigorous induction proofs of the equivalence of these traversal algorithms.

6. We have ${\rm preorder}(T)={\rm preorder}(T')$, and ${\rm postorder}(T)={\rm inorder}(T')$, even if T has nodes with only one child. The remaining two orders are not in any simple relation; for example, the root of T comes at the end in one case and about in the middle in the other.

7. (a) Yes; (b) no; (c) no; (d) yes. Note that reverse preorder of a forest equals postorder of the left-right reversed forest (in the sense of mirror reflection).

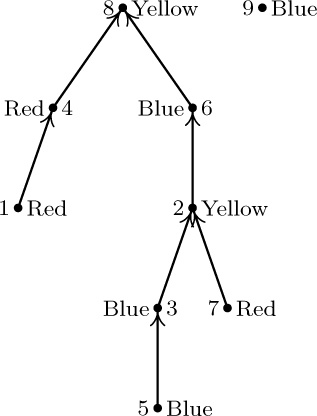

8. $T\preceq T'$ means that either ${\rm info}({\rm root}(T))\prec{\rm info}({\rm root}(T'))$, or these info’s are equal and the following condition holds: Suppose the subtrees of ${\rm root}(T)$ are $T_{1},\ldots,T_{n}$ and the subtrees of ${\rm root}(T')$ are $T'_{1},\ldots,T'_{n'}$, and let $k\ge0$ be as large as possible such that $T_{j}$ is equivalent to $T'_{j}$ for $1≤j≤k$. Then either $k=n$ or $k\lt n$ and $T_{k+1}\preceq T'_{k+1}$.

9. The number of nonterminal nodes is one less than the number of right links that are Λ, in a nonempty forest, because the null right links correspond to the rightmost child of each nonterminal node, and also to the root of the rightmost tree in the forest. (This fact gives another proof of exercise 2.3.1–14, since the number of null left links is obviously equal to the number of terminal nodes.)

10. The forests are similar if and only if $n=n'$ and $d(u_{j})=d(u'_{j})$, for $1≤j≤n$; they are equivalent if and only if in addition ${\rm info}(u_{j})={\rm info}(u'_{j})$, $1≤j≤n$. The proof is similar to the previous proof, by generalizing Lemma 2.3.1P; let $f(u)=d(u)-1$.

11.

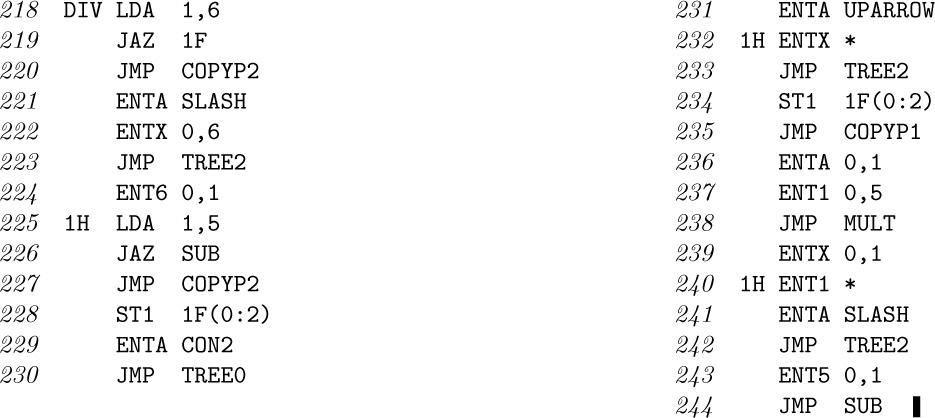

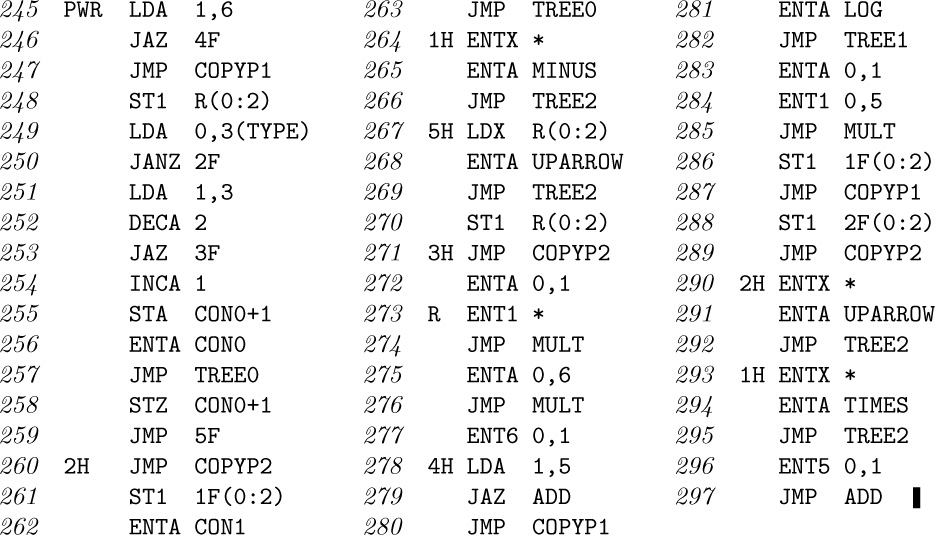

12. If ${\tt INFO(Q1)}\neq0$: Set $\tt R←COPY(P1)$; then if ${\tt TYPE(P2)}=0$ and ${\tt INFO(P2)}\neq2$, set ${\tt R←TREE(“\uparrow”,R,TREE(INFO(P2)}-1\tt))$; if ${\tt TYPE(P2)}\neq0$, set ${\tt R←TREE(“\uparrow”,R,TREE(“-”,COPY(P2),TREE(}1\tt)))$; then set $\tt Q1←MULT(Q1,MULT(COPY(P2),R))$.

If ${\tt INFO(Q)}\neq0$: Set $\tt Q←TREE(“\times”,MULT(TREE(“\ln”,COPY(P1)),Q),TREE(“\uparrow”,COPY(P1),COPY(P2)))$.

Finally go to ${\tt DIFF[}4\tt]$.

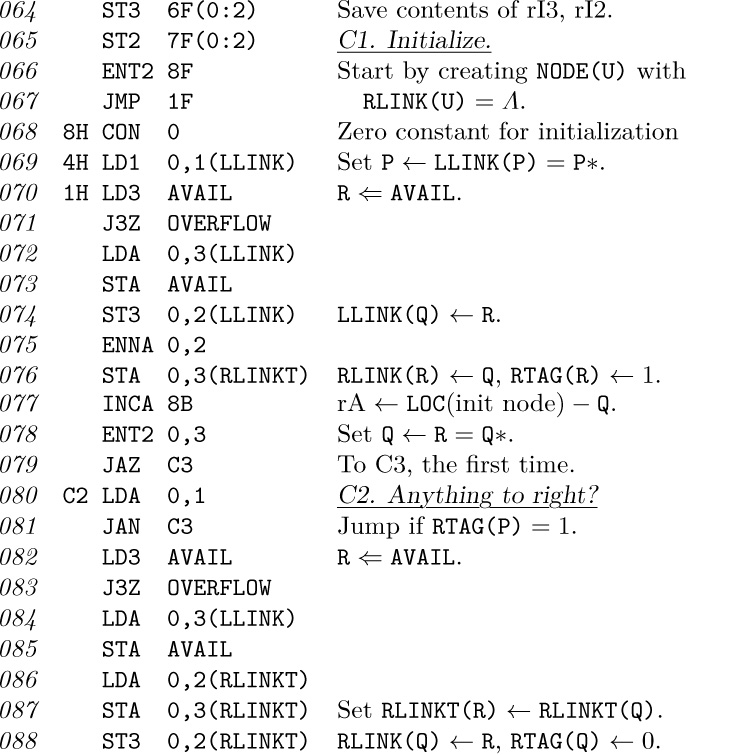

13. The following program implements Algorithm 2.3.1C with $\rm rI1≡\tt P$, $\rm rI2≡\tt Q$, $\rm rI3≡\tt R$, and with appropriate changes to the initialization and termination conditions:

14. Let a be the number of nonterminal (operator) nodes copied. The number of executions of the various lines in the previous program is as follows: $064{-}067,1$; $069,a$; $070{-}079,a+1$; $080{-}081,n-1$; $082{-}088,n-1-a$; $089{-}094,n$; $095,n-a$; $096{-}098,n+1$; $099{-}100,n-a$; $101{-}103,1$. The total time is $(36n+22)u$; we use about 20% of the time to get available nodes, 40% to traverse, and 40% to copy the INFO and LINK information.

15. Comments are left to the reader.

16. Comments are left to the reader.

17. References to early work on such problems can be found in a survey article by J. Sammet, CACM 9 (1966), 555–569.

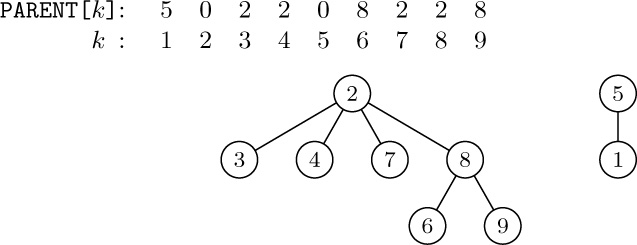

18. First set ${\tt LLINK[}j{\tt]←RLINK[}j{\tt]}←j$ for all j, so that each node is in a circular list of length 1. Then for $j=n$, $n-1,\ldots,1$ (in this order), if ${\tt PARENT[}j{\tt]}=0$ set $r←j$, otherwise insert the circular list starting with j into the circular list starting with ${\tt PARENT[}j\tt]$ as follows: $k←{\tt PARENT[}j\tt]$, $l←{\tt RLINK[}k\tt]$, $i←{\tt LLINK[}j\tt]$, ${\tt LLINK[}j{\tt]}←k$, ${\tt RLINK[}k{\tt]}←j$, ${\tt LLINK[}l{\tt]}←i$, ${\tt RLINK[}i{\tt]}←l$. This works because (a) each nonroot node is always preceded by its parent or by a descendant of its parent; (b) nodes of each family appear in their parent’s list, in order of location; (c) preorder is the unique order satisfying (a) and (b).

20. If u is an ancestor of v, it is immediate by induction that u precedes v in preorder and follows v in postorder. Conversely, suppose u precedes v in preorder and follows v in postorder; we must show that u is an ancestor of v. This is clear if u is the root of the first tree. If u is another node of the first tree, v must be also, since u follows v in postorder; so induction applies. Similarly if u is not in the first tree, v must not be either, since u precedes v in preorder. (This exercise also follows easily from the result of exercise 3. It gives us a quick test for ancestorhood, if we know each node’s position in preorder and postorder.)

21. If $\tt NODE(P)$ is a binary operator, pointers to its two operands are ${\tt P1}=\tt LLINK(P)$ and ${\tt P2}={\tt RLINK(P1)}=\tt\$P$. Algorithm D makes use of the fact that ${\tt P2\$}=\tt P$, so that $\tt RLINK(P1)$ may be changed to Q1, a pointer to the derivative of $\tt NODE(P1)$; then $\tt RLINK(P1)$ is reset later in step D3. For ternary operations, we would have, say, ${\tt P1}=\tt LLINK(P)$, ${\tt P2}=\tt RLINK(P1)$, ${\tt P3}={\tt RLINK(P2)}=\tt\$P$, so it is difficult to generalize the binary trick. After computing the derivative Q1, we could set $\tt RLINK(P1)←Q1$ temporarily, and then after computing the next derivative Q2 we could set $\tt RLINK(Q2)←Q1$ and $\tt RLINK(P2)←Q2$ and reset $\tt RLINK(P1)←P2$. But this is certainly inelegant, and it becomes progressively more so as the degree of the operator becomes higher. Therefore the device of temporarily changing $\tt RLINK(P1)$ in Algorithm D is definitely a trick, not a technique. A more aesthetic way to control a differentiation process, because it generalizes to operators of higher degree and does not rely on isolated tricks, can be based on Algorithm 2.3.3F; see exercise 2.3.3–3.

22. From the definition it follows immediately that the relation is transitive; that is, if $T\subseteq T'$ and $T'\subseteq T''$ then $T\subseteq T''$. (In fact the relation is easily seen to be a partial ordering.) If we let f be the function taking nodes into themselves, clearly $l(T)\subseteq T$ and $r(T)\subseteq T$. Therefore if $T\subseteq l(T')$ or $T\subseteq r(T')$ we must have $T\subseteq T'$.

Suppose $f_{l}$ and $f_{r}$ are functions that respectively show $l(T)\subseteq l(T')$ and $r(T)\subseteq r(T')$. Let $f(u)=f_{l}(u)$ if u is in $l(T)$, $f(u)={\rm root}(T')$ if u is ${\rm root}(T)$, otherwise $f(u)=f_{r}(u)$. Now it follows easily that f shows $T\subseteq T'$; for example, if we let $r'(T)$ denote $r(T)\setminus{\rm root}(T)$ we have ${\rm preorder}(T)={\rm root}(T)\;{\rm preorder}(l(T))\;{\rm preorder}(r'(T))$; ${\rm preorder}(T')=f({\rm root}(T))\;{\rm preorder}(l(T'))\;{\rm preorder}(r'(T'))$.

The converse does not hold: Consider the subtrees with roots b and $b'$ in Fig. 25.

1. Yes, we can reconstruct them just as (3) is deduced from (4), but interchanging LTAG and RTAG, LLINK and RLINK, and using a queue instead of a stack.

2. Make the following changes in Algorithm F: Step F1, change to “last node of the forest in preorder.” Step F2, change “$f(x_{d}),\ldots,f(x_{1})$” to “$f(x_{1}),\ldots,f(x_{d})$” in two places. Step F4, “If P is the first node in preorder, terminate the algorithm. (Then the stack contains $f({\rm root}(T_{1})),\ldots,f({\rm root}(T_{m}))$, from top to bottom, where $T_{1},\ldots,T_{m}$ are the trees of the given forest, from left to right.) Otherwise set P to its predecessor in preorder (${\tt P←P}-c$ in the given representation), and return to F2.”

3. In step D1, also set $\tt S←\varLambda$. (S is a link variable that links to the top of the stack.) Step D2 becomes, for example, “If $\tt NODE(P)$ denotes a unary operator, set $\tt Q←S$, $\tt S←RLINK(Q)$, ${\tt P1←LLINK(P)}$; if it denotes a binary operator, set $\tt Q←S$, $\tt Q1←RLINK(Q)$, ${\tt S←RLINK(Q1)}$, $\tt P1←LLINK(P)$, $\tt P2←RLINK(P1)$. Then perform $\tt DIFF[TYPE(P)]$.” Step D3 becomes “Set $\tt RLINK(Q)←S$, $\tt S←Q$.” Step D4 becomes “Set $\tt P←P\$$.” The operation $\tt LLINK(DY)←Q$ may be avoided in step D5 if we assume that $\tt S≡LLINK(DY)$. This technique clearly generalizes to ternary and higher-order operators.

4. A representation like (10) takes $n-m$ LLINKs and $n+(n-m)$ RLINKs. The difference in total number of links is $n-2m$ between the two forms of representation. Arrangement (10) is superior when the LLINK and INFO fields require about the same amount of space in a node and when m is rather large, namely when the nonterminal nodes have rather large degrees.

5. It would certainly be silly to include threaded RLINKs, since an RLINK thread just points to $\tt PARENT$ anyway. Threaded LLINKs as in 2.3.2–(4) would be useful if it is necessary to move leftward in the tree, for example if we wanted to traverse a tree in reverse postorder, or in family order; but these operations are not significantly harder without threaded LLINKs unless the nodes tend to have very high degrees.

6. L1. Set $\tt P←FIRST$, $\tt FIRST←\varLambda$.

L2. If ${\tt P}=\varLambda$, terminate. Otherwise set $\tt Q←RLINK(P)$.

L3. If ${\tt PARENT(P)}=\varLambda$, set $\tt RLINK(P)←FIRST$, $\tt FIRST←P$; otherwise set $\tt R←PARENT(P)$, $\tt RLINK(P)←LCHILD(R)$, $\tt LCHILD(R)←P$.

L4. Set $\tt P←Q$ and return to L2.

7. $\{1,5\}\{2,3,4,7\}\{6,8,9\}$.

8. Perform step E3 of Algorithm E, then test if $j=k$.

9.

10. One idea is to set $\tt PARENT$ of each root node to the negative of the number of nodes in its tree (these values being easily kept up to date); then if $|{\tt PARENT[}j{\tt]}|\gt|{\tt PARENT[}k{\tt]}|$ in step E4, the roles of j and k are interchanged. This technique (due to M. D. McIlroy) ensures that each operation takes $O(\log n)$ steps.

For still more speed, we can use the following suggestion due to Alan Tritter: In step E4, set ${\tt PARENT[}x{\tt]}←k$ for all values $x\neq k$ that were encountered in step E3. This makes an extra pass up the trees, but it collapses them so that future searches are faster. (See Section 7.4.1.)

11. It suffices to define the transformation that is done for each input $({\tt P},j,{\tt Q},k)$:

T1. If $\tt PARENT(P)\neq\varLambda$, set $j←j+\tt DELTA(P)$, $\tt P←PARENT(P)$, and repeat this step.

T2. If $\tt PARENT(Q)\neq\varLambda$, set $k←k+\tt DELTA(Q)$, $\tt Q←PARENT(Q)$, and repeat this step.

T3. If P = Q, check that $j=k$ (otherwise the input erroneously contains contradictory equivalences). If $\tt P\neq Q$, set ${\tt DELTA(Q)}←j-k$, $\tt PARENT(Q)←P$, ${\tt LBD(P)}←\min({\tt LBD(P)},{\tt LBD(Q)}+{\tt DELTA(Q)})$, and ${\tt UBD(P)}←\max({\tt UBD(P)},{\tt UBD(Q)}+{\tt DELTA(Q)})$.

Note: It is possible to allow the ${\tt ARRAY\;X[}l:u\tt]$ declarations to occur intermixed with equivalences, or to allow assignment of certain addresses of variables before others are equivalenced to them, etc., under suitable conditions that are not difficult to understand. For further development of this algorithm, see CACM 7 (1964), 301–303, 506.

12. (a) Yes. (If this condition is not required, it would be possible to avoid the loops on S that appear in steps A2 and A9.) (b) Yes.

13. The crucial fact is that the UP chain leading upward from P always mentions the same variables and the same exponents for these variables as the UP chain leading upward from Q, except that the latter chain may include additional steps for variables with exponent zero. (This condition holds throughout most of the algorithm, except during the execution of steps A9 and A10.) Now we get to step A8 either from A3 or from A10, and in each case it was verified that ${\tt EXP(Q)}\neq0$. Therefore ${\tt EXP(P)}\neq0$, and in particular it follows that $\tt P\neq\varLambda$, $\tt Q\neq\varLambda$, $\tt UP(P)\neq\varLambda$, $\tt UP(Q)\neq\varLambda$; the result stated in the exercise now follows. Thus the proof depends on showing that the UP chain condition stated above is preserved by the actions of the algorithm.

14, 15. See Martin Ward and Hussain Zedan, “Provably correct derivation of algorithms using FermaT,” Formal Aspects of Computing 27 (2015), to appear.

16. We prove (by induction on the number of nodes in a single tree T) that if P is a pointer to T, and if the stack is initially empty, steps F2 through F4 will end with the single value $f({\rm root}(T))$ on the stack. This is true for n = 1. If $n\gt1$, there are $0\lt d={\tt DEGREE}({\rm root}(T))$ subtrees $T_{1},\ldots,T_{d}$; by induction and the nature of a stack, and since postorder consists of $T_{1},\ldots,T_{d}$ followed by ${\rm root}(T)$, the algorithm computes $f(T_{1}),\ldots,f(T_{d})$, and then $f({\rm root}(T))$, as desired. The validity of Algorithm F for forests follows.

17. G1. Set the stack empty, and let P point to the root of the tree (the last node in postorder). Evaluate $f({\tt NODE(P)})$.

G2. Push $\tt DEGREE(P)$ copies of $f({\tt NODE(P)})$ onto the stack.

G3. If P is the first node in postorder, terminate the algorithm. Otherwise set P to its predecessor in postorder (this would be simply ${\tt P←P}-c$ in (9)).

G4. Evaluate $f({\tt NODE(P)})$ using the value at the top of the stack, which is equal to $f({\tt NODE(PARENT(P))})$. Pop this value off the stack, and return to G2.

Note: An algorithm analogous to this one can be based on preorder instead of postorder as in exercise 2. In fact, family order or level order could be used; in the latter case we would use a queue instead of a stack.

18. The $\tt INFO1$ and RLINK tables, together with the suggestion for computing LTAG in the text, give us the equivalent of a binary tree represented in the usual manner. The idea is to traverse this tree in postorder, counting degrees as we go:

P1. Let R, D, and I be stacks that are initially empty; then set ${\tt R}\Leftarrow n+1$, ${\tt D}\Leftarrow 0$, $j←0$, $k←0$.

P2. If ${\rm top}({\tt R})\gt j+1$, go to P5. (If an LTAG field were present, we could have tested ${\tt LTAG[}j{\tt]}=0$ instead of ${\rm top}({\tt R})\gt j+1$.)

P3. If I is empty, terminate the algorithm; otherwise set $i\Leftarrow\tt I$, $k←k+1$, ${\tt INFO2[}k{\tt]←INFO1[}i\tt]$, ${\tt DEGREE[}k{\tt]\Leftarrow D}$.

P4. If ${\tt RLINK[}i{\tt]}=0$, go to P3; otherwise delete the top of R (which will equal ${\tt RLINK[}i\tt]$).

P5. Set ${\rm top}({\tt D})←{\rm top}({\tt D})+1$, $j←j+1$, ${\tt I}\Leftarrow j$, ${\tt D}\Leftarrow 0$, and if ${\tt RLINK[}j{\tt]}\neq0$ set ${\tt R}\Leftarrow{\tt RLINK[}j\tt]$. Go to P2.

19. (a) This property is equivalent to saying that SCOPE links do not cross each other.

(b) The first tree of the forest contains $d_{1}+1$ elements, and we can proceed by induction.

(c) The condition of (a) is preserved when we take minima.

Notes: By exercise 2.3.2–20, it follows that $d_{1}d_{2}\ldots d_{n}$ can also be interpreted in terms of inversions: If the $k\rm th$ node in postorder is the $p_{k}\rm th$ node in preorder, then $d_{k}$ is the number of elements $\gt k$ that appear to the left of k in $p_{1}p_{2}\ldots p_{n}$.

A similar scheme, in which we list the number of descendants of each node in postorder of the forest, leads to sequences of numbers $c_{1}c_{2}\ldots c_{n}$ characterized by the properties (i) $0≤c_{k}\lt k$ and (ii) $k\ge j\ge k-c_{k}$ implies $j-c_{j}\ge k-c_{k}$. Algorithms based on such sequences have been investigated by J. M. Pallo, Comp. J. 29 (1986), 171–175. Notice that $c_{k}$ is the size of the left subtree of the $k\rm th$ node in symmetric order of the corresponding binary tree. We can also interpret $d_{k}$ as the size of the right subtree of the $k\rm th$ node in symmetric order of a suitable binary tree, namely the binary tree that corresponds to the given forest by the dual method of exercise 2.3.2–5.

The relation $d_{k}≤d'_{k}$ for $1≤k≤n$ defines an interesting lattice ordering of forests and binary trees, first introduced in another way by D. Tamari [Thèse (Paris, 1951)]; see exercise 6.2.3–32.

1. $(B,A,C,D,B)$, $(B,A,C,D,E,B)$, $(B,D,C,A,B)$, $(B,D,E,B)$, $(B,E,D,B)$, $(B,E,D,C,A,B)$.

2. Let $(V_{0},V_{1},\ldots,V_{n})$ be a walk of smallest possible length from V to $V'$. If now $V_{j}=V_{k}$ for some $j\lt k$, then $(V_{0},\ldots,V_{j},V_{k+1},\ldots,V_{n})$ would be a shorter walk.

3. (The fundamental path traverses $e_{3}$ and $e_{4}$ once, but cycle $C_{2}$ traverses them $-1$ times, giving a net total of zero.) Traverse the following edges: $e_{1}$, $e_{2}$, $e_{6}$, $e_{7}$, $e_{9}$, $e_{10}$, $e_{11}$, $e_{12}$, $e_{14}$.

4. If not, let $G''$ be the subgraph of $G'$ obtained by deleting each edge $e_{j}$ for which $E_{j}=0$. Then $G''$ is a finite graph that has no cycles and at least one edge, so by the proof of Theorem A there is at least one vertex, V, that is adjacent to exactly one other vertex, $V'$. Let $e_{j}$ be the edge joining V to $V'$; then Kirchhoff’s equation (1) at vertex V is $E_{j}=0$, contradicting the definition of $G''$.

5. $A=1+E_{8}$, $B=1+E_{8}-E_{2}$, $C=1+E_{8}$, $D=1+E_{8}-E_{5}$, $E=1+E_{17}-E_{21}$, $F=1+E''_{13}+E_{17}-E_{21}$, $G=1+E''_{13}$, $H=E_{17}-E_{21}$, $J=E_{17}$, $K=E''_{19}+E_{20}$, $L=E_{17}+E''_{19}+E_{20}-E_{21}$, $P=E_{17}+E_{20}-E_{21}$, $Q=E_{20}$, $R=E_{17}-E_{21}$, $S=E_{25}$.

Note: In this case it is also possible to solve for $E_{2},E_{5},\ldots,E_{25}$ in terms of $A,B,\ldots,S$; hence there are nine independent solutions, explaining why we eliminated six variables in Eq. 1.3.3–(8).

6. (The following solution is based on the idea that we may print out each edge that does not make a cycle with the preceding edges.) Use Algorithm 2.3.3E, with each pair $(a_{i},b_{i})$ representing $a_{i}≡b_{i}$ in the notation of that algorithm. The only change is to print $(a_{i},b_{i})$ if $j\neq k$ in step E4.

To show that this algorithm is valid, we must prove that (a) the algorithm prints out no edges that form a cycle, and (b) if G contains at least one free subtree, the algorithm prints out $n-1$ edges. Define $j≡k$ if there exists a path from $V_{j}$ to $V_{k}$ or if $j=k$. This is clearly an equivalence relation, and moreover $j≡k$ if and only if this relation can be deduced from the equivalences $a_{1}≡b_{1},\ldots,a_{m}≡b_{m}$. Now (a) holds because the algorithm prints out no edges that form a cycle with previously printed edges; (b) is true because ${\tt PARENT[}k{\tt]}=0$ for precisely one k if all vertices are equivalent.

A more efficient algorithm can, however, be based on depth-first search; see Algorithm 2.3.5A and Section 7.4.1.

7. Fundamental cycles: $C_{0}=e_{0}+e_{1}+e_{4}+e_{9}$ (fundamental path is $e_{1}+e_{4}+e_{9}$); $C_{5}=e_{5}+e_{3}+e_{2}$; $C_{6}=e_{6}-e_{2}+e_{4}$; $C_{7}=e_{7}-e_{4}-e_{3}$; $C_{8}=e_{8}-e_{9}-e_{4}-e_{3}$. Therefore we find $E_{1}=1$, $E_{2}=E_{5}-E_{6}$, $E_{3}=E_{5}-E_{7}-E_{8}$, $E_{4}=1+E_{6}-E_{7}-E_{8}$, $E_{9}=1-E_{8}$.

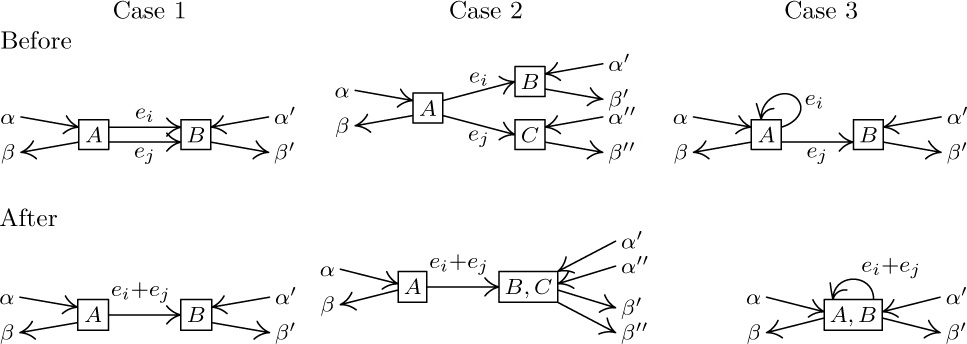

8. Each step in the reduction process combines two arrows $e_{i}$ and $e_{j}$ that start at the same box, and it suffices to prove that such steps can be reversed. Thus we are given the value of $e_{i}+e_{j}$ after combination, and we must assign consistent values to $e_{i}$ and $e_{j}$ before the combination. There are three essentially different situations:

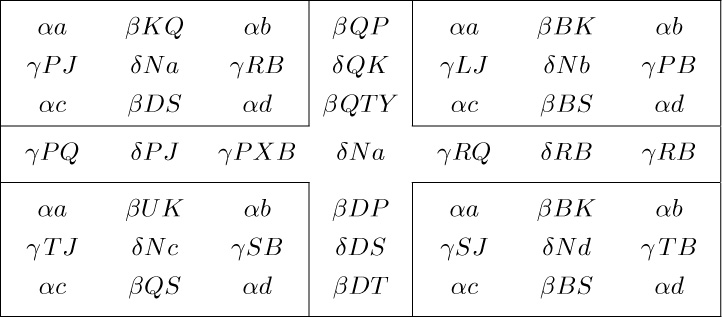

Here A, B, and C stand for vertices or supervertices, and the $\alpha\rm\unicode{39}s$ and $\beta\rm\unicode{39}s$ stand for the other given flows besides $e_{i}+e_{j}$; these flows may each be distributed among several edges, although only one is shown. In Case 1 ($e_{i}$ and $e_{j}$ lead to the same box), we may choose $e_{i}$ arbitrarily, then $e_{j}←(e_{i}+e_{j})-e_{i}$. In Case 2 ($e_{i}$ and $e_{j}$ lead to different boxes), we must set $e_{i}←\beta'-\alpha'$, $e_{j}←\beta''-\alpha''$. In Case 3 ($e_{i}$ is a loop but $e_{j}$ is not), we must set $e_{j}←\beta'-\alpha'$, $e_{i}←(e_{i}+e_{j})-e_{j}$. In each case we have reversed the combination step as desired.

The result of this exercise essentially proves that the number of fundamental cycles in the reduced flow chart is the minimum number of vertex flows that must be measured to determine all the others. In the given example, the reduced flow chart reveals that only three vertex flows (e.g., a, c, d) need to be measured, while the original chart of exercise 7 has four independent edge flows. We save one measurement every time Case 1 occurs during the reduction.

A similar reduction procedure could be based on combining the arrows flowing into a given box, instead of those flowing out. It can be shown that this would yield the same reduced flow chart, except that the supervertices would contain different names.

The construction in this exercise is based on ideas due to Armen Nahapetian and F. Stevenson. For further comments, see A. Nahapetian, Acta Informatica 3 (1973), 37–41; D. E. Knuth and F. Stevenson, BIT 13 (1973), 313–322.

9. Each edge from a vertex to itself becomes a “fundamental cycle” all by itself. If there are $k+1$ edges $e,e',\ldots,e^{(k)}$ between vertices V and $V'$, make k fundamental cycles $e'±e,\ldots,e^{(k)}±e$ (choosing + or − according as the edges go in the opposite or the same direction), and then proceed as if only edge e were present.

Actually this situation would be much simpler conceptually if we had defined a graph in such a way that multiple edges are allowed between vertices, and edges are allowed from a vertex to itself; paths and cycles would be defined in terms of edges instead of vertices. Such a definition is, in fact, made for directed graphs in Section 2.3.4.2.

10. If the terminals have all been connected together, the corresponding graph must be connected in the technical sense. A minimum number of wires will involve no cycles, so we must have a free tree. By Theorem A, a free tree contains $n-1$ wires, and a graph with n vertices and $n-1$ edges is a free tree if and only if it is connected.

11. It is sufficient to prove that when $n\gt1$ and $c(n-1,n)$ is the minimum of the $c(i,n)$, there exists at least one minimum cost tree in which $T_{n-1}$ is wired to $T_{n}$. (For, any minimum cost tree with $n\gt1$ terminals and with $T_{n-1}$ wired to $T_{n}$ must also be a minimum cost tree with $n-1$ terminals if we regard $T_{n-1}$ and $T_{n}$ as “common,” using the convention stated in the algorithm.)

To prove the statement above, suppose we have a minimum cost tree in which $T_{n-1}$ is not wired to $T_{n}$. If we add the wire $T_{n-1}\;—\;T_{n}$ we obtain a cycle, and any of the other wires in that cycle may be removed; removing the other wire touching $T_{n}$ gives us another tree, whose total cost is not greater than the original, and $T_{n-1}\;—\;T_{n}$ appears in that tree.

12. Keep two auxiliary tables, $a(i)$ and $b(i)$, for $1≤i\lt n$, representing the fact that the cheapest connection from $T_{i}$ to a chosen terminal is to $T_{b(i)}$, and its cost is $a(i)$; initially $a(i)=c(i,n)$ and $b(i)=n$. Then do the following operation $n-1$ times: Find i such that $a(i)=\min_{1≤j\lt n}a(j)$; connect $T_{i}$ to $T_{b(i)}$; for $1≤j\lt n$ if $c(i,j)\lt a(j)$ set $a(j)←c(i,j)$ and $b(j)←i$; and set $a(i)←\infty$. Here $c(i,j)$ means $c(j,i)$ when $j\lt i$.

(It is somewhat more efficient to avoid the use of ∞, keeping instead a oneway linked list of those j that have not yet been chosen. With or without this straightforward improvement, the algorithm takes $O(n^{2})$ operations.) See also E. W. Dijkstra, Proc. Nederl. Akad. Wetensch. A63 (1960), 196–199; D. E. Knuth, The Stanford GraphBase (New York: ACM Press, 1994), 460–497. Significantly better algorithms to find a minimum-cost spanning tree are discussed in Section 7.5.4.

13. If there is no path from $V_{i}$ to $V_{j}$, for some $i\neq j$, then no product of the transpositions will move i to j. So if all permutations are generated, the graph must be connected. Conversely if it is connected, remove edges if necessary until we have a free tree. Then renumber the vertices so that $V_{n}$ is adjacent to only one other vertex, namely $V_{n-1}$. (See the proof of Theorem A.) Now the transpositions other than $(n-1 n)$ form a free tree with $n-1$ vertices; so by induction if π is any permutation of $\{1,2,\ldots,n\}$ that leaves n fixed, π can be written as a product of those transpositions. If π moves n to j then $\pi(j\;\;n{-}1)(n{-}1\;\;n)=\rho$ fixes n; hence $\pi=\rho(n{-}1\;\;n)(j\;\;n{-}1)$ can be written as a product of the given transpositions.

1. Let $(e_{1},\ldots,e_{n})$ be an oriented walk of smallest possible length from V to $V'$. If now ${\rm init}(e_{j})={\rm init}(e_{k})$ for $j\lt k$, $(e_{1},\ldots,e_{j-1},e_{k},\ldots,e_{n})$ would be a shorter walk; a similar argument applies if ${\rm fin}(e_{j})={\rm fin}(e_{k})$ for $j\lt k$. Hence $(e_{1},\ldots,e_{n})$ is simple.

2. Those cycles in which all signs are the same: $C_{0}$, $C_{8}$, $C''_{13}$, $C_{17}$, $C''_{19}$, $C_{20}$.

3. For example, use three vertices A, B, C, with arcs from A to B and A to C.

4. If there are no oriented cycles, Algorithm 2.2.3T topologically sorts G. If there is an oriented cycle, topological sorting is clearly impossible. (Depending on how this exercise is interpreted, oriented cycles of length 1 could be excluded from consideration.)

5. Let k be the smallest integer such that ${\rm fin}(e_{k})={\rm init}(e_{j})$ for some $j≤k$. Then $(e_{j},\ldots,e_{k})$ is an oriented cycle.

6. False (on a technicality), just because there may be several different arcs from one vertex to another.

7. True for finite directed graphs: If we start at any vertex V and follow the only possible oriented path, we never encounter any vertex twice, so we must eventually reach the vertex R (the only vertex with no successor). For infinite directed graphs the result is obviously false since we might have vertices $R,V_{1},V_{2},V_{3},\ldots$ and arcs from $V_{j}$ to $V_{j+1}$ for $j\ge1$.

9. All arcs point upward.

10. G1. Set $k←P[j]$, $P[j]←0$.

G2. If $k=0$, stop; otherwise set $m←P[k]$, $P[k]←j$, $j←k$, $k←m$, and repeat step G2.

11. This algorithm combines Algorithm 2.3.3E with the method of the preceding exercise, so that all oriented trees have arcs that correspond to actual arcs in the directed graph; $S[j]$ is an auxiliary table that tells whether an arc goes from j to $P[j] (S[j]=+1)$ or from $P[j]$ to $j (S[j]=-1)$. Initially $P[1]=\cdots=P[n]=0$. The following steps may be used to process each arc $(a,b)$:

C1. Set $j←a$, $k←P[j]$, $P[j]←0$, $s←S[j]$.

C2. If $k=0$, go to C3; otherwise set $m←P[k]$, $t←S[k]$, $P[k]←j$, $S[k]←-s$, $s←t$, $j←k$, $k←m$, and repeat step C2.

C3. (Now a appears as the root of its tree.) Set $j←b$, and then if $P[j]\neq0$ repeatedly set $j←P[j]$ until $P[j]=0$.

C4. If $j=a$, go to C5; otherwise set $P[a]←b$, $S[a]←+1$, print $(a,b)$ as an arc belonging to the free subtree, and terminate.

C5. Print “CYCLE” followed by “$(a,b)$”.

C6. If $P[b]=0$ terminate. Otherwise if $S[b]=+1$, print “$+(b,P[b])$”, else print “$-(P[b],b)$”; set $b←P[b]$ and repeat step C6.

Note: This algorithm will take at most $O(m \log n)$ steps if we incorporate the suggestion of McIlroy in answer 2.3.3–10. But there is a much better solution that needs only $O(m)$ steps: Use depth-first search to construct a “palm tree,” with one fundamental cycle for each “frond” [R. E. Tarjan, SICOMP 1 (1972), 146–150].

12. It equals the in-degree; the out-degree of each vertex can be only 0 or 1.

13. Define a sequence of oriented subtrees of G as follows: $G_{0}$ is the vertex R alone. $G_{k+1}$ is $G_{k}$, plus any vertex V of G that is not in $G_{k}$ but for which there is an arc from V to $V'$ where $V'$ is in $G_{k}$, plus one such arc $e[V]$ for each such vertex. It is immediate by induction that $G_{k}$ is an oriented tree for all $k\ge0$, and that if there is an oriented path of length k from V to R in G then V is in $G_{k}$. Therefore $G_\infty$, the set of all V and $e[V]$ in any of the $G_{k}$, is the desired oriented subtree of G.

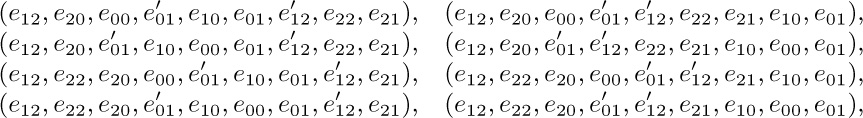

14.

in lexicographic order; the eight possibilities come from the independent choices of which of $e_{00}$ or $e'_{01}$, $e_{10}$ or $e'_{12}$, $e_{20}$ or $e_{22}$, should precede the other.

15. True for finite graphs: If it is connected and balanced and has more than one vertex, it has an Eulerian trail that touches all the vertices. (But false in general.)

16. Consider the directed graph G with vertices $V_{1},\ldots,V_{13}$ and with an arc from $V_{j}$ to $V_{k}$ for each k in pile j; this graph is balanced. Winning the game is equivalent to tracing out an Eulerian trail in G, because the game ends when the fourth arc to $V_{13}$ is encountered (namely, when the fourth king turns up). Now if the game is won, the stated digraph is an oriented subtree by Lemma E. Conversely if the stated digraph is an oriented tree, the game is won by Theorem D.

17. $\frac{1}{13}$. This answer can be obtained, as the author first obtained it, by laborious enumeration of oriented trees of special types and the application of generating functions, etc., based on the methods of Section 2.3.4.4. But such a simple answer deserves a simple, direct proof, and indeed there is one [see Tor B. Staver, Norsk Matematisk Tidsskrift 28 (1946), 88–89]. Define an order for turning up all cards of the deck, as follows: Obey the rules of the game until getting stuck, then “cheat” by turning up the first available card (find the first pile that is not empty, going clockwise from pile 1) and continue as before, until eventually all cards have been turned up. The cards in the order of turning up are in completely random order (since the value of a card need not be specified until after it is turned up). So the problem is just to calculate the probability that in a randomly shuffled deck the last card is a king. More generally the probability that k cards are still face down when the game is over is the probability that the last king in a random shuffle is followed by k cards, namely $4!\,\mybinomf{51-k}{3}\frac{48!}{52!}$. Hence a person playing this game without cheating will turn up an average of exactly 42.4 cards per game. Note: Similarly, it can be shown that the probability that the player will have to “cheat” k times in the process described above is exactly given by the Stirling number ${13\brack k+1}/13!$. (See Eq. 1.2.10–(9) and exercise 1.2.10–7; the case of a more general card deck is considered in exercise 1.2.10–18.)

18. (a) If there is a cycle $(V_{0},V_{1},\ldots,V_{k})$, where necessarily $3≤k≤n$, the sum of the k rows of A corresponding to the k edges of this cycle, with appropriate signs, is a row of zeros; so if G is not a free tree the determinant of $A_{0}$ is zero.

But if G is a free tree we may regard it as an ordered tree with root $V_{0}$, and we can rearrange the rows and columns of $A_{0}$ so that columns are in preorder and so that the kth row corresponds to the edge from the kth vertex (column) to its parent. Then the matrix is triangular with $±1\rm\unicode{39}s$ on the diagonal, so the determinant is $±1$.

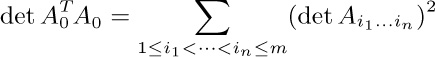

(b) By the Binet–Cauchy formula (exercise 1.2.3–46) we have

where $A_{\large i_{1}\ldots i_{n}}$ represents a matrix consisting of rows $i_{1},\ldots,i_{n}$ of $A_{0}$ (thus corresponding to a choice of n edges of G). The result now follows from (a).

[See S. Okada and R. Onodera, Bull. Yamagata Univ. 2 (1952), 89–117.]

19. (a) The conditions $a_{00}=0$ and $a_{jj}=1$ are just conditions (a), (b) of the definition of oriented tree. If G is not an oriented tree there is an oriented cycle (by exercise 7), and the rows of $A_{0}$ corresponding to the vertices in this oriented cycle will sum to a row of zeros; hence $\det A_{0}=0$. If G is an oriented tree, assign an arbitrary order to the children of each family and regard G as an ordered tree. Now permute rows and columns of $A_{0}$ until they correspond to preorder of the vertices. Since the same permutation has been applied to the rows as to the columns, the determinant is unchanged; and the resulting matrix is triangular with $+1$ in every diagonal position.

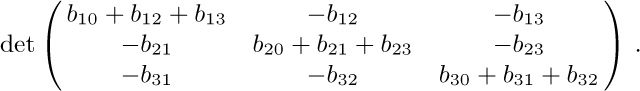

(b) We may assume that $a_{0j}=0$ for all j, since no arc emanating from $V_{0}$ can participate in an oriented subtree. We may also assume that $a_{jj}\gt0$ for all $j\ge1$ since otherwise the whole $j\,\rm th$ row is zero and there obviously are no oriented subtrees. Now use induction on the number of arcs: If $a_{jj}\gt1$ let e be some arc leading from $V_{j}$; let $B_{0}$ be a matrix like $A_{0}$ but with arc e deleted, and let $C_{0}$ be the matrix like $A_{0}$ but with all arcs except e that lead from $V_{j}$ deleted. Example: If $A_{0}=\mybinom{3&-2}{-1&2}$, $j=1$, and e is an arc from $V_{1}$ to $V_{0}$, then $B_{0}=\mybinom{2&-2}{-1&2}$, $C_{0}=\mybinom{1&0}{-1&2}$. In general we have $\det A_{0}=\det B_{0}+\det C_{0}$, since the matrices agree in all rows except row j, and $A_{0}$ is the sum of $B_{0}$ and $C_{0}$ in that row. Moreover, the number of oriented subtrees of G is the number of subtrees that do not use e (namely, $\det B_{0}$, by induction) plus the number that do use e (namely, $\det C_{0}$).

Notes: The matrix A is often called the Laplacian of the graph, by analogy with a similar concept in the theory of partial differential equations. If we delete any set S of rows from the matrix A, and the same set of columns, the determinant of the resulting matrix is the number of oriented forests whose roots are the vertices $\{V_{k}\mid k\in S\}$ and whose arcs belong to the given digraph. The matrix tree theorem for oriented trees was stated without proof by J. J. Sylvester in 1857 (see exercise 28), then forgotten for many years until it was independently rediscovered by W. T. Tutte [Proc. Cambridge Phil. Soc. 44 (1948), 463–482, §3]. The first published proof in the special case of undirected graphs, when the matrix A is symmetric, was given by C. W. Borchardt [Crelle 57 (1860), 111–121]. Several authors have ascribed the theorem to Kirchhoff, but Kirchhoff proved a quite different (though related) result.

20. Using exercise 18 we find $B=A^{T}_{0}A_{0}$. Or, using exercise 19, B is the matrix $A_{0}$ for the directed graph $G'$ with two arcs (one in each direction) in place of each edge of G; each free subtree of G corresponds uniquely to an oriented subtree of $G'$ with root $V_{0}$, since the directions of the arcs are determined by the choice of root.

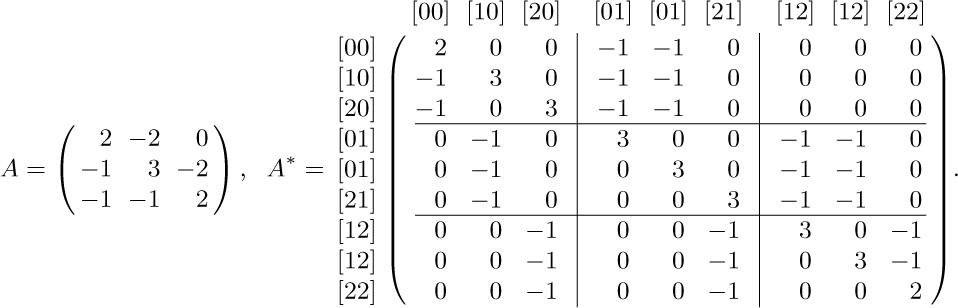

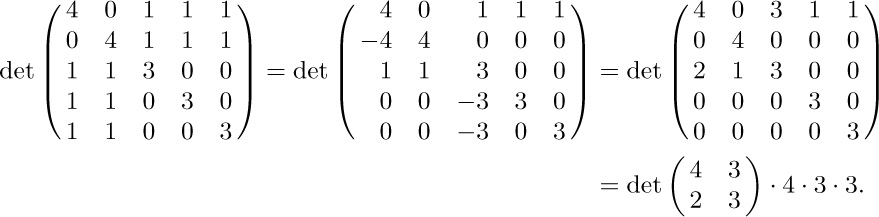

21. Construct the matrices A and $A^{*}$ as in exercise 19. For the example graphs G and $G^{*}$ in Figs. 36 and 37,

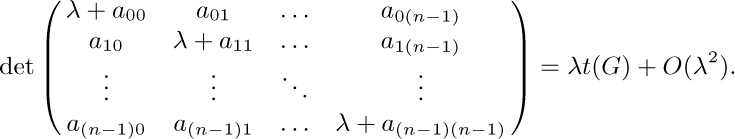

Add the indeterminate λ to every diagonal element of A and $A^{*}$. If $t(G)$ and $t(G^{*})$ are the numbers of oriented subtrees of G and $G^{*}$, we then have $\det A=\lambda t(G)+O(\lambda^{2})$, $\det A^{*}=\lambda t(G^{*})+O(\lambda^{2})$. (The number of oriented subtrees of a balanced graph is the same for any given root, by exercise 22, but we do not need that fact.)

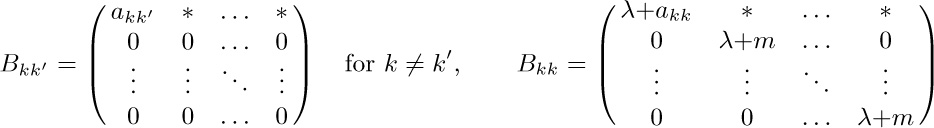

If we group vertices $V_{jk}$ for equal k the matrix $A^{*}$ can be partitioned as shown above. Let $B_{kk'}$ be the submatrix of $A^{*}$ consisting of the rows for $V_{jk}$ and the columns for $V_{j'k'}$, for all j and $j'$ such that $V_{jk}$ and $V_{j'k'}$ are in $G^{*}$. By adding the $2nd,\ldots,mth$ columns of each submatrix to the first column and then subtracting the first row of each submatrix from the $2nd,\ldots,mth$ rows, the matrix $A^{*}$ is transformed so that

The asterisks in the top rows of the transformed submatrices turn out to be irrelevant, because the determinant of $A^{*}$ is now seen to be $(\lambda+m)^{(m-1)n}$ times

Notice that when $n=1$ and there are m arcs from $V_{0}$ to itself, we find in particular that exactly $m^{m-1}$ oriented trees are possible on m labeled nodes. This result will be obtained by quite different methods in Section 2.3.4.4.

This derivation can be generalized to determine the number of oriented subtrees of $G^{*}$ when G is an arbitrary directed graph; see R. Dawson and I. J. Good, Ann. Math. Stat. 28 (1957), 946–956; D. E. Knuth, Journal of Combinatorial Theory 3 (1967), 309–314. An alternative, purely combinatorial proof has been given by J. B. Orlin, Journal of Combinatorial Theory B25 (1978), 187–198.

22. The total number is $(\sigma_{1}+\cdots+\sigma_{n})$ times the number of Eulerian trails starting with a given edge $e_{1}$, where ${\rm init}(e_{1})=V_{1}$. Each such trail determines an oriented subtree with root $V_{1}$ by Lemma E, and for each of the T oriented subtrees there are $\prod_{j=1}^{n}(\sigma_{j}-1)!$ walks satisfying the three conditions of Theorem D, corresponding to the different order in which the arcs $\{e\mid{\rm init}(e)=V_{j},e\neq e[V_{j}],e\neq e_{1}\}$ are entered into P. (Exercise 14 provides a simple example.)

23. Construct the directed graph $G_{k}$ with $m^{k-1}$ vertices as in the hint, and denote by $[x_{1},\ldots,x_{k}]$ the arc mentioned there. For each function that has maximum period length, we can define a unique corresponding Eulerian trail, by letting $f(x_{1},\ldots,x_{k})=x_{k+1}$ if arc $[x_{1},\ldots,x_{k}]$ is followed by $[x_{2},\ldots,x_{k+1}]$. (We regard Eulerian trails as being the same if one is just a cyclic permutation of the other.) Now $G_{k}=G^{*}_{k-1}$ in the sense of exercise 21, so $G_{k}$ has $m^{\large m^{k-1}-m^{k-2}}$ times as many oriented subtrees as $G_{k-1}$; by induction $G_{k}$ has $m^{\large m^{k-1}-1}$ oriented subtrees, and $m^{\large m^{k-1}-k}$ with a given root. Therefore by exercise 22 the number of functions with maximum period, namely the number of Eulerian trails of $G_{k}$ starting with a given arc, is $m^{-k}(m!)^{\large m^{k-1}}$. [For $m=2$ this result is due to C. Flye Sainte-Marie, L’Intermédiaire des Mathématiciens 1 (1894), 107–110.]

24. Define a new directed graph having $E_{j}$ copies of $e_{j}$, for $0≤j≤m$. This graph is balanced, hence it contains an Eulerian trail $(e_{0},\ldots)$ by Theorem G. The desired oriented walk comes by deleting the edge $e_{0}$ from this Eulerian trail.

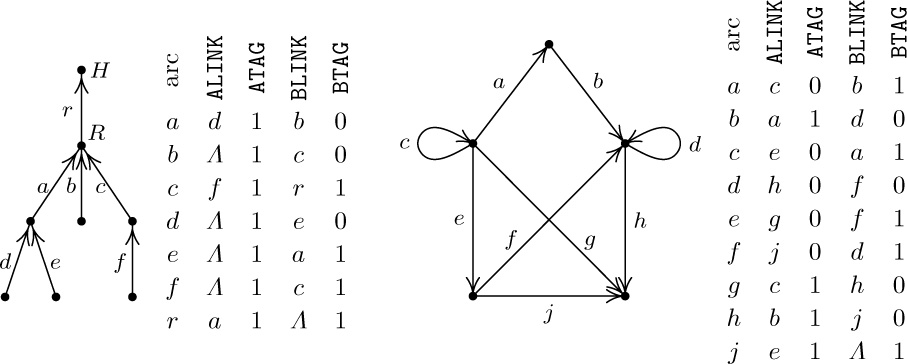

25. Assign an arbitrary order to all arcs in the sets $I_{j}=\{e\mid{\rm init}(e)=V_{j}\}$ and $F_{j}=\{e\mid{\rm fin}(e)=V_{j}\}$. For each arc e in $I_{j}$, let ${\tt ATAG}(e)=0$ and ${\tt ALINK}(e)=e'$ if $e'$ follows e in the ordering of $I_{j}$; also let ${\tt ATAG(e)}=1$ and ${\tt ALINK}(e)=e'$ if e is last in $I_{j}$ and $e'$ is first in $F_{j}$. Let ${\tt ALINK}(e)=\varLambda$ in the latter case if $F_{j}$ is empty. Define BLINK and BTAG by the same rules, reversing the roles of init and fin.

Examples (using alphabetic order in each set of arcs):

Note: If in the oriented tree representation we add another arc from H to itself, we get an interesting situation: Either we get the standard conventions 2.3.1–(8) with LLINK, LTAG, RLINK, RTAG interchanged in the list head, or (if the new arc is placed last in the ordering) we get the standard conventions except ${\tt RTAG}=0$ in the node associated with the root of the tree.

This exercise is based on an idea communicated to the author by W. C. Lynch. Can tree traversal algorithms like Algorithm 2.3.1S be generalized to classes of digraphs that are not oriented trees, using such a representation?

27. Let $a_{ij}$ be the sum of $p(e)$ over all arcs e from $V_{i}$ to $V_{j}$. We are to prove that $t_{j}=∑_{i}a_{ij}t_{i}$ for all j. Since $∑_{i}a_{ji}=1$, we must prove that $∑_{i}a_{ji}t_{j}=∑_{i}a_{ij}t_{i}$. But this is not difficult, because both sides of the equation represent the sum of all products $p(e_{1})\ldots p(e_{n})$ taken over subgraphs $\{e_{1},\ldots,e_{n}\}$ of G such that ${\rm init}(e_{i})=V_{i}$ and such that there is a unique oriented cycle contained in $\{e_{1},\ldots,e_{n}\}$, where this cycle includes $V_{j}$. Removing any arc of the cycle yields an oriented tree; the left-hand side of the equation is obtained by factoring out the arcs that leave $V_{j}$, while the right-hand side corresponds to those that enter $V_{j}$.

In a sense, this exercise is a combination of exercises 19 and 26.

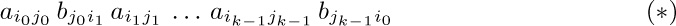

28. Every term in the expansion is $\large a_{1p_{1}}\ldots a_{mp_{m}}b_{1q_{1}}\ldots b_{nq_{n}}$, where $0≤p_{i}≤n$ for $1≤i≤m$ and $0≤q_{j}≤m$ for $1≤j≤n$, times some integer coefficient. Represent this product as a directed graph on the vertices $\{0,u_{1},\ldots,u_{m},v_{1},\ldots,v_{n}\}$, with arcs from $u_{i}$ to $v_{pi}$ and from $v_{j}$ to $u_{qj}$, where $u_{0}=v_{0}=0$.

If the digraph contains a cycle, the integer coefficient is zero. For each cycle corresponds to a factor of the form

where the indices $(i_{0},i_{1},\ldots,i_{k-1})$ are distinct and so are the indices $(j_{0},j_{1},\ldots,j_{k-1})$. The sum of all terms containing $(*)$ as a factor is $(*)$ times the determinant obtained by setting $\large a_{i_{l}j}←[j=j_{l}]$ for $0≤j≤n$ and $\large b_{j_{l}i}←[i=i_{(l+1)\bmod k}]$ for $0≤i≤m$, for $0≤l\lt k$, leaving the variables in the other $m+n-2k$ rows unchanged. This determinant is identically zero, because the sum of rows $i_{0},i_{1},\ldots,i_{k-1}$ in the top section equals the sum of rows $j_{0},j_{1},\ldots,j_{k-1}$ in the bottom section.

On the other hand, if the directed graph contains no cycles, the integer coefficient is $+1$. This follows because each factor $\large a_{ip_{i}}$ and $\large b_{jq_{j}}$ must have come from the diagonal of the determinant: If any off-diagonal element $\large a_{i_{0}j_{0}}$ is chosen in row $i_{0}$ of the top section, we must choose some off-diagonal $\large b_{j_{0}i_{1}}$ from row $j_{0}$ of the bottom section, hence we must choose some off-diagonal $\large a_{i_{1}j_{1}}$ from row $i_{1}$ of the top section, etc., forcing a cycle.

Thus the coefficient is $+1$ if and only if the corresponding digraph is an oriented tree with root 0. The number of such terms (hence the number of such oriented trees) is obtained by setting each $a_{ij}$ and $b_{ji}$ to 1; for example,

In general we obtain $\det\mybinomf{n+1&n}{m&m+1}\cdot(n+1)^{m-1}\cdot(m+1)^{n-1}$.

Notes: J. J. Sylvester considered the special case $m=n$ and $a_{10}=a_{20}=\cdots=a_{m0}=0$ in Quarterly J. of Pure and Applied Math. 1 (1857), 42–56, where he conjectured (correctly) that the total number of terms is then $n^{n}(n+1)^{n-1}$. He also stated without proof that the $(n+1)^{n-1}$ nonzero terms present when $a_{ij}=\delta_{ij}$ correspond to all connected cycle-free graphs on $\{0,1,\ldots,n\}$. In that special case, he reduced the determinant to the form in the matrix tree theorem of exercise 19, e.g.,

Cayley quoted this result in Crelle 52 (1856), 279, ascribing it to Sylvester; thus it is ironic that the theorem about the number of such graphs is often attributed to Cayley.

By negating the first m rows of the given determinant, then negating the first m columns, we can reduce this exercise to the matrix tree theorem.

[Matrices having the general form considered in this exercise are important in iterative methods for the solution of partial differential equations, and they are said to have “Property A.” See, for example, Louis A. Hageman and David M. Young, Applied Iterative Methods (Academic Press, 1981), Chapter 9.]

1. The root is the empty sequence; arcs go from $(x_{1},\ldots,x_{n})$ to $(x_{1},\ldots,x_{n-1})$.

2. Take one tetrad type and rotate it $180^\circ$ to get another tetrad type; these two types clearly tile the plane (without further rotations), by repeating a $2\times2$ pattern.

3. Consider the set of tetrad types  for all positive integers j. The right half plane can be tiled in uncountably many ways; but whatever square is placed in the center of the plane puts a finite limit on the distance it can be continued to the left.

for all positive integers j. The right half plane can be tiled in uncountably many ways; but whatever square is placed in the center of the plane puts a finite limit on the distance it can be continued to the left.

4. Systematically enumerate all possible ways to tile an $n\times n$ block, for $n=1,2,\ldots$, looking for toroidal solutions within these blocks. If there is no way to tile the plane, the infinity lemma tells us there is an n with no $n\times n$ solutions. If there is a way to tile the plane, the assumption tells us that there is an n with an $n\times n$ solution containing a rectangle that yields a toroidal solution. Hence in either case the algorithm will terminate.

[But the stated assumption is false, as shown in the next exercise; and in fact there is no algorithm that will determine in a finite number of steps whether or not there exists a way to tile the plane with a given set of types. On the other hand, if such a tiling does exist, there is always a tiling that is quasitoroidal, in the sense that each of its $n\times n$ blocks occurs at least once in every $f(n)\times f(n)$ block, for some function f. See B. Durand, Theoretical Computer Science 221 (1999), 61–75.]

5. Start by noticing that we need classes $\scriptstyle\begin{myarray1}\alpha&\beta\\\gamma&\delta\end{myarray1}$ replicated in $2\times2$ groups in any solution. Then, step 1: Considering just the α squares, show that the pattern $\scriptstyle\begin{myarray1}a&b\\c&d\end{myarray1}$ must be replicated in $2\times2$ groups of α squares. Step $n\gt1$: Determine a pattern that must appear in a cross-shaped region of height and width $2^{n}-1$. The middle of the crosses has the pattern $\scriptstyle\begin{myarray1}Na&Nb\\Nc&Nd\end{myarray1}$ replicated throughout the plane.

For example, after step 3 we will know the contents of $7\times7$ blocks throughout the plane, separated by unit length strips, every eight units. The $7\times7$ blocks that are of class $Na$ in the center have the form

The middle column and the middle row is the “cross” just filled in during step 3; the other four $3\times3$ squares were filled in after step 2; the squares just to the right and below this $7\times7$ square are part of a $15\times15$ cross to be filled in at step 4.

For a similar construction that leads to a set of only 35 tetrad types having nothing but nontoroidal solutions, see R. M. Robinson, Inventiones Math. 12 (1971), 177–209. Robinson also exhibits a set of six squarish shapes that tile the plane only nontoroidally, even when rotations and reflections are allowed. In 1974, Roger Penrose discovered a set of only two polygons, based on the golden ratio instead of a square grid, that tile the plane only aperiodically; this led to a set of only 16 tetrad types with only nontoroidal solutions [see B. Grünbaum and G. C. Shephard, Tilings and Patterns (Freeman, 1987), Chapters 10–11; Martin Gardner, Penrose Tiles to Trapdoor Ciphers (Freeman, 1989), Chapters 1–2].

6. Let k and m be fixed. Consider an oriented tree whose vertices each represent, for some n, one of the partitions of $\{1,\ldots,n\}$ into k parts, containing no arithmetic progression of length m. A node that partitions $\{1,\ldots,n+1\}$ is a child of one for $\{1,\ldots,n\}$ if the two partitions agree on $\{1,\ldots,n\}$. If there were an infinite path to the root we would have a way to divide all integers into k sets with no arithmetic progression of length m. Hence, by the infinity lemma and van der Waerden’s theorem, this tree is finite. (If $k=2$, $m=3$, the tree can be rapidly calculated by hand, and the least value of N is 9. See Studies in Pure Mathematics, ed. by L. Mirsky (Academic Press, 1971), 251–260, for van der Waerden’s interesting account of how the proof of his theorem was discovered.)

7. The positive integers can be partitioned into two sets $S_{0}$ and $S_{1}$ such that neither set contains any infinite computable sequence (see exercise 3.5–32). So in particular there is no infinite arithmetic progression. Theorem K does not apply because there is no way to put partial solutions into a tree with finite degrees at each vertex.

8. Let a “counterexample sequence” be an infinite sequence of trees that violates Kruskal’s theorem, if such sequences exist. Assume that the theorem is false; then let $T_{1}$ be a tree with the smallest possible number of nodes such that $T_{1}$ can be the first tree in a counterexample sequence; if $T_{1},\ldots,T_{j}$ have been chosen, let $T_{j+1}$ be a tree with the smallest possible number of nodes such that $T_{1},\ldots,T_{j},T_{j+1}$ is the beginning of a counterexample sequence. This process defines a counterexample sequence $\langle T_{n}\rangle$. None of these $T\rm\unicode{39}s$ is just a root. Now, we look at this sequence very carefully:

(a) Suppose there is a subsequence $T_{n_{1}},T_{n_{2}},\ldots$ for which $l(T_{n_{1}}),l(T_{n_{2}}),\ldots$ is a counterexample sequence. This is impossible; otherwise $T_{1},\ldots,T_{n_{1}-1},l(T_{n_{1}}),l(T_{n_{2}}),\ldots$ would be a counterexample sequence, contradicting the definition of $T_{n_{1}}$.

(b) Because of (a), there are only finitely many j for which $l(T_{j})$ cannot be embedded in $l(T_{k})$ for any $k\gt j$. Therefore by taking $n_{1}$ larger than any such j we can find a subsequence for which $l(T_{n_{1}})\subseteq l(T_{n_{2}})\subseteq l(T_{n_{3}})\subseteq\cdots$.

(c) Now by the result of exercise 2.3.2–22, $r(T_{n_{j}})$ cannot be embedded in $r(T_{n_{k}})$ for any $k\gt j$, else $T_{n_{j}}\subseteq T_{n_{k}}$. Therefore $T_{1},\ldots,T_{n_{1}-1},r(T_{n_{1}}),r(T_{n_{2}}),\ldots$ is a counterexample sequence. But this contradicts the definition of $T_{n_{1}}$.

Notes: Kruskal, in Trans. Amer. Math. Soc. 95 (1960), 210–225, actually proved a stronger result, using a weaker notion of embedding. His theorem does not follow directly from the infinity lemma, although the results are vaguely similar. Indeed, K nig himself proved a special case of Kruskal’s theorem, showing that there is no infinite sequence of pairwise incomparable n-tuples of nonnegative integers, where comparability means that all components of one n-tuple are $≤$ the corresponding components of the other [Matematikai és Fizikai Lapok 39 (1932), 27–29]. For further developments, see J. Combinatorial Theory A13 (1972), 297–305. See also N. Dershowitz, Inf. Proc. Letters 9 (1979), 212–215, for applications to termination of algorithms.

nig himself proved a special case of Kruskal’s theorem, showing that there is no infinite sequence of pairwise incomparable n-tuples of nonnegative integers, where comparability means that all components of one n-tuple are $≤$ the corresponding components of the other [Matematikai és Fizikai Lapok 39 (1932), 27–29]. For further developments, see J. Combinatorial Theory A13 (1972), 297–305. See also N. Dershowitz, Inf. Proc. Letters 9 (1979), 212–215, for applications to termination of algorithms.

1. $\ln A(z)=\ln z+∑_{k\ge1}a_{k}\ln\left(\frac{1}{1-z^{k}}\right)=$ $\ln z+∑_{k,t\ge1}\frac{a_{k}z^{kt}}{t}=\ln z+∑_{t\ge1}\frac{A(z^{t})}{t}$

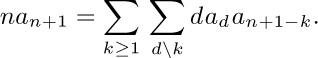

2. By differentiation, and equating the coefficients of $z^{n}$, we obtain the identity

Now interchange the order of summation.

4. (a) $A(z)$ certainly converges at least for $|z|\lt\frac{1}{4}$, since $a_{n}$ is less than the number of ordered trees $b_{n-1}$. Since $A(1)$ is infinite and all coefficients are positive, there is a positive number $\alpha≤1$ such that $A(z)$ converges for $|z|\lt\alpha$, and there is a singularity at $z=\alpha$. Let $\psi(z)=A(z)/z$; since $\psi(z)\gt e^{z\psi(z)}$, we see that $\psi(z)=m$ implies $z\lt\ln m/m$, so $\psi(z)$ is bounded and $\lim_{z→\alpha-}\psi(z)$ exists. Thus $\alpha\lt1$, and by Abel’s limit theorem $a=\alpha\cdot\exp\left(a+\frac{1}{2}A(\alpha^{2})+\frac{1}{3}A(\alpha^{3})+\cdots\right)$.

(b) $A(z^{2}),A(z^{3}),\ldots$ are analytic for $|z|\lt\sqrt\alpha$, and $\frac{1}{2}A(z^{2})+\frac{1}{3}A(z^{3})+\cdots$ converges uniformly in a slightly smaller disk.

(c) If $\partial F/\partial w=a-1\neq0$, the implicit function theorem implies that there is an analytic function $f(z)$ in a neighborhood of $(\alpha,a/\alpha)$ such that $F(z,f(z))=0$. But this implies $f(z)=A(z)/z$, contradicting the fact that $A(z)$ is singular at α.

(d) Obvious.

(e) $\partial F/\partial w=A(z)-1$ and $|A(z)|\lt A(\alpha)=1$, since the coefficients of $A(z)$ are all positive. Hence, as in (c), $A(z)$ is regular at all such points.

(f) Near $(\alpha,1/\alpha)$ we have the identity $0=\beta(z-\alpha)+(\alpha/2)(w-1/\alpha)^{2}+$ higher order terms, where $w=A(z)/z$; so w is an analytic function of $\sqrt{z-\alpha}$ here by the implicit function theorem. Consequently there is a region $|z|\lt\alpha_{1}$ minus a cut $[\alpha,\alpha_{1}]$ in which $A(z)$ has the stated form. (The minus sign is chosen since a plus sign would make the coefficients ultimately negative.)

(g) Any function of the stated form has coefficient asymptotically $\displaystyle\frac{\sqrt{2\beta}}{\alpha^{n}}{\binom{1/2}{n}}$.

Note that

For further details, and asymptotic values of the number of free trees, see R. Otter, Ann. Math. (2) 49 (1948), 583–599.

5.

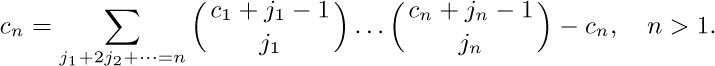

Therefore

We find $C(z)=z+z^{2}+2z^{3}+5z^{4}+12z^{5}+33z^{6}+90z^{7}+261z^{8}+766z^{9}+\cdots$. When $n\gt1$, the number of series-parallel networks with n edges is $2c_{n}$ [see P. A. MacMahon, Proc. London Math. Soc. 22 (1891), 330–339].

6. $zG(z)^{2}=2G(z)-2-zG(z^{2})$; $G(z)=1+z+z^{2}+2z^{3}+3z^{4}+6z^{5}+11z^{6}+23z^{7}+46z^{8}+98z^{9}+\cdots$. The function $F(z)=1-zG(z)$ satisfies the simpler relation $F(z^{2})=2z+F(z)^{2}$. [J. H. M. Wedderburn, Annals of Math. (2) 24 (1922), 121–140.]

7. $g_{n}=ca^{n}n^{-3/2}(1+O(1/n))$, where $c\approx0.7916031835775$, $a\approx2.483253536173$.

8.

9. If there are two centroids, by considering a path from one to the other we find that there can’t be intermediate points, so any two centroids are adjacent. A tree cannot contain three mutually adjacent vertices, so there are at most two.

10. If X and Y are adjacent, let $s(X,Y)$ be the number of vertices in the Y subtree of X. Then $s(X,Y)+s(Y,X)=n$. The argument in the text shows that if Y is a centroid, ${\rm weight}(X)=s(X,Y)$. Therefore if both X and Y are centroids, ${\rm weight}(X)={\rm weight}(Y)=n/2$.

In terms of this notation, the argument in the text goes on to show that if $s(X,Y)\ge s(Y,X)$, there is a centroid in the Y subtree of X. So if two free trees with m vertices are joined by an edge between X and Y, we obtain a free tree in which $s(X,Y)=m=s(Y,X)$, and there must be two centroids (namely X and Y).

[It is a nice programming exercise to compute $s(X,Y)$ for all adjacent X and Y in $O(n)$ steps; from this information we can quickly find the centroid(s). An efficient algorithm for centroid location was first given by A. J. Goldman, Transportation Sci. 5 (1971), 212–221.]

11. $zT(z)^{t}=T(z)-1$; thus $z+T(z)^{-t}=T(z)^{1-t}$. By Eq. 1.2.9–(21), $T(z)=∑_{n}A_{n}(1,-t)z^{n}$, so the number of t-ary trees is

12. Consider the directed graph that has one arc from $V_{i}$ to $V_{j}$ for all $i\neq j$. The matrix $A_{0}$ of exercise 2.3.4.2–19 is a combinatorial $(n-1)\times(n-1)$ matrix with $n-1$ on the diagonal and $-1$ off the diagonal. So its determinant is

$(n+(n-1)(-1))n^{n-2}=n^{n-2}$,

the number of oriented trees with a given root. (Exercise 2.3.4.2–20 could also be used.)

13.

14. True, since the root will not become a leaf until all other branches have been removed.

15. In the canonical representation, $V_{1},V_{2},\ldots,V_{n-1},f(V_{n-1})$ is a topological sort of the oriented tree considered as a directed graph, but this order would not in general be output by Algorithm 2.2.3T. Algorithm 2.2.3T can be changed so that it determines the values of $V_{1},V_{2},\ldots,V_{n-1}$ if the “insert into queue” operation of step T6 is replaced by a procedure that adjusts links so that the entries of the list appear in ascending order from front to rear; then the queue becomes a priority queue.

(However, a general priority queue isn’t needed to find the canonical representation; we only need to sweep through the vertices from 1 to n, looking for leaves, while pruning off paths from new leaves less than the sweep pointer; see the following exercise.)