I think that I shall never see

A poem lovely as a tree.

— JOYCE KILMER (1913)

Yea, from the table of my memory

I’ll wipe away all trivial fond records.

— HAMLET (Act I, Scene 5, Line 98)

COMPUTER PROGRAMS usually operate on tables of information. In most cases these tables are not simply amorphous masses of numerical values; they involve important structural relationships between the data elements.

In its simplest form, a table might be a linear list of elements, when its relevant structural properties might include the answers to such questions as: Which element is first in the list? Which is last? Which elements precede and follow a given one? How many elements are in the list? A lot can be said about structure even in this apparently simple case (see Section 2.2).

In more complicated situations, the table might be a two-dimensional array (a matrix or grid, having both a row and a column structure), or it might be an n-dimensional array for higher values of n; it might be a tree structure, representing hierarchical or branching relationships; or it might be a complex multilinked structure with a great many interconnections, such as we may find in a human brain.

In order to use a computer properly, we need to understand the structural relationships present within data, as well as the basic techniques for representing and manipulating such structure within a computer.

The present chapter summarizes the most important facts about information structures: the static and dynamic properties of different kinds of structure; means for storage allocation and representation of structured data; and efficient algorithms for creating, altering, accessing, and destroying structural information. In the course of this study, we will also work out several important examples that illustrate the application of such methods to a wide variety of problems. The examples include topological sorting, polynomial arithmetic, discrete system simulation, sparse matrix transformation, algebraic formula manipulation, and applications to the writing of compilers and operating systems. Our concern will be almost entirely with structure as represented inside a computer; the conversion from external to internal representations is the subject of Chapters 9 and 10.

Much of the material we will discuss is often called “List processing,” since a number of programming systems such as LISP have been designed to facilitate working with general kinds of structures called Lists. (When the word “list” is capitalized in this chapter, it is being used in a technical sense to denote a particular type of structure that is highlighted in Section 2.3.5.) Although List processing systems are useful in a large number of situations, they impose constraints on the programmer that are often unnecessary; it is usually better to use the methods of this chapter directly in one’s own programs, tailoring the data format and the processing algorithms to the particular application. Many people unfortunately still feel that List processing techniques are quite complicated (so that it is necessary to use someone else’s carefully written interpretive system or a prefabricated set of subroutines), and that List processing must be done only in a certain fixed way. We will see that there is nothing magic, mysterious, or difficult about the methods for dealing with complex structures; these techniques are an important part of every programmer’s repertoire, and we can use them easily whether we are writing a program in assembly language or in an algebraic language like FORTRAN, C, or Java.

We will illustrate methods of dealing with information structures in terms of the MIX computer. A reader who does not care to look through detailed MIX programs should at least study the ways in which structural information is represented in MIX’s memory.

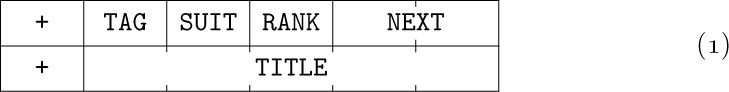

It is important at this point to define several terms and notations that we will be using frequently from now on. The information in a table consists of a set of nodes (called “records,” “entities,” or “beads” by some authors); we will occasionally say “item” or “element” instead of “node.” Each node consists of one or more consecutive words of the computer memory, divided into named parts called fields. In the simplest case, a node is just one word of memory, and it has just one field comprising that whole word. As a more interesting example, suppose the elements of our table are intended to represent playing cards; we might have two-word nodes broken into five fields, TAG, SUIT, RANK, NEXT, and TITLE:

(This format reflects the contents of two MIX words. Recall that a MIX word consists of five bytes and a sign; see Section 1.3.1. In this example we assume that the signs are + in each word.) The address of a node, also called a link, pointer, or reference to that node, is the memory location of its first word. The address is often taken relative to some base location, but in this chapter for simplicity we will take the address to be an absolute memory location.

The contents of any field within a node may represent numbers, alphabetic characters, links, or anything else the programmer may desire. In connection with the example above, we might wish to represent a pile of cards that might appear in a game of solitaire: TAG = 1 means that the card is face down, TAG = 0 means that it is face up; SUIT = 1, 2, 3, or 4 for clubs, diamonds, hearts, or spades, respectively; RANK = 1, 2, . . ., 13 for ace, deuce, . . ., king; NEXT is a link to the card below this one in the pile; and TITLE is a five-character alphabetic name of this card, for use in printouts. A typical pile might look like this:

The memory locations in the computer representation are shown here as 100, 386, and 242; they could have been any other numbers as far as this example is concerned, since each card links to the one below it. Notice the special link “Λ” in node 100; we use the capital Greek letter Lambda to denote the null link, the link to no node. The null link Λ appears in node 100 since the 10 of clubs is the bottom card of the pile. Within the machine, Λ is represented by some easily recognizable value that cannot be the address of a node. We will generally assume that no node appears in location 0; consequently, Λ will almost always be represented as the link value 0 in MIX programs.

The introduction of links to other elements of data is an extremely important idea in computer programming; links are the key to the representation of complex structures. When displaying computer representations of nodes it is usually convenient to represent links by arrows, so that example (2) would appear thus:

The actual locations 242, 386, and 100 (which are irrelevant anyway) no longer appear in representation (3). Electrical circuit notation for a “grounded” wire is used to indicate a null link, shown here at the right of the diagram. Notice also that (3) indicates the top card by an arrow from “TOP”; here TOP is a link variable, often called a pointer variable, namely a variable whose value is a link. All references to nodes in a program are made directly through link variables (or link constants), or indirectly through link fields in other nodes.

Now we come to the most important part of the notation, the means of referring to fields within nodes. This is done simply by giving the name of the field followed by a link to the desired node in parentheses; for example, in (2) and (3) with the fields of (1) we have

The reader should study these examples carefully, since such field notations will be used in many algorithms of this chapter and the following chapters. To make the ideas clearer, we will now state a simple algorithm for placing a new card face up on top of the pile, assuming that NEWCARD is a link variable whose value is a link to the new card:

A1. Set NEXT(NEWCARD) ← TOP. (This puts the appropriate link into the new card node.)

A2. Set TOP ← NEWCARD. (This keeps TOP pointing to the top of the pile.)

A3. Set TAG(TOP) ← 0. (This marks the card as “face up.”)

Another example is the following algorithm, which counts the number of cards currently in the pile:

B1. Set N ← 0, X ← TOP. (Here N is an integer variable, X is a link variable.)

B2. If X = Λ, stop; N is the number of cards in the pile.

B3. Set N ← N + 1, X ← NEXT(X), and go back to step B2.

Notice that we are using symbolic names for two quite different things in these algorithms: as names of variables (TOP, NEWCARD, N, X) and as names of fields (TAG, NEXT). These two usages must not be confused. If F is a field name and L ≠ Λ is a link, then F(L) is a variable; but F itself is not a variable — it does not possess a value unless it is qualified by a nonnull link.

Two further notations are used, to convert between addresses and the values stored there, when we are discussing low-level machine details:

a) CONTENTS always denotes a full-word field of a one-word node. Thus CONTENTS(1000) denotes the value stored in memory location 1000; it is a variable having this value. If V is a link variable, CONTENTS(V) denotes the value pointed to by V (not the value V itself).

b) If V is the name of some value held in a memory cell, LOC(V) denotes the address of that cell. Consequently, if V is a variable whose value is stored in a full word of memory, we have CONTENTS(LOC(V)) = V.

It is easy to transform this notation into MIXAL assembly language code, although MIXAL’s notation is somewhat backwards. The values of link variables are put into index registers, and the partial-field capability of MIX is used to refer to the desired field. For example, Algorithm A above could be written thus:

The ease and efficiency with which these operations can be carried out in a computer is the primary reason for the importance of the “linked memory” concept.

Sometimes we have a single variable that denotes a whole node; its value is a sequence of fields instead of just one field. Thus we might write

where NODE is a field specification just like CONTENTS, except that it refers to an entire node, and where CARD is a variable that assumes structured values like those in (1). If there are c words in a node, the notation (6) is an abbreviation for the c low-level assignments

There is an important distinction between assembly language and the notation used in algorithms. Since assembly language is close to the machine’s internal language, the symbols used in MIXAL programs stand for addresses instead of values. Thus in the left-hand columns of (5), the symbol TOP actually denotes the address where the pointer to the top card appears in memory; but in (6) and (7) and in the remarks at the right of (5), it denotes the value of TOP, namely the address of the top card node. This difference between assembly language and higher-level language is a frequent source of confusion for beginning programmers, so the reader is urged to work exercise 7. The other exercises also provide useful drills on the notational conventions introduced in this section.

Exercises

1. [04] In the situation depicted in (3), what is the value of (a) SUIT(NEXT(TOP)); (b) NEXT(NEXT(NEXT(TOP))) ?

2. [10] The text points out that in many cases CONTENTS(LOC(V)) = V. Under what conditions do we have LOC(CONTENTS(V)) = V?

3. [11] Give an algorithm that essentially undoes the effect of Algorithm A: It removes the top card of the pile (if the pile is not empty) and sets NEWCARD to the address of this card.

4. [18] Give an algorithm analogous to Algorithm A, except that it puts the new card face down at the bottom of the pile. (The pile may be empty.)

5. [21] Give an algorithm that essentially undoes the effect of exercise 4: Assuming that the pile is not empty and that its bottom card is face down, your algorithm should remove the bottom card and make

5. [21] Give an algorithm that essentially undoes the effect of exercise 4: Assuming that the pile is not empty and that its bottom card is face down, your algorithm should remove the bottom card and make NEWCARD link to it. (This algorithm is sometimes called “cheating” in solitaire games.)

6. [06] In the playing card example, suppose that CARD is the name of a variable whose value is an entire node as in (6). The operation CARD ← NODE(TOP) sets the fields of CARD respectively equal to those of the top of the pile. After this operation, which of the following notations stands for the suit of the top card? (a) SUIT(CARD); (b) SUIT(LOC(CARD)); (c) SUIT(CONTENTS(CARD)); (d) SUIT(TOP) ?

7. [04] In the text’s example

7. [04] In the text’s example MIX program, (5), the link variable TOP is stored in the MIX computer word whose assembly language name is TOP. Given the field structure (1), which of the following sequences of code brings the quantity NEXT(TOP) into register A? Explain why the other sequence is incorrect.

a) LDA TOP(NEXT)

b) LD1 TOP

LDA 0,1(NEXT)

8. [18] Write a

8. [18] Write a MIX program corresponding to steps B1–B3.

9. [23] Write a MIX program that prints out the alphabetic names of the current contents of the card pile, starting at the top card, with one card per line, and with parentheses around cards that are face down.

DATA USUALLY has much more structural information than we actually want to represent directly in a computer. For example, each “playing card” node in the preceding section had a NEXT field to specify what card was beneath it in the pile, but we provided no direct way to find what card, if any, was above a given card, or to find what pile a given card was in. And of course we totally suppressed most of the characteristic features of real playing cards: the details of the design on the back, the relation to other objects in the room where the game was being played, the individual molecules within the cards, etc. It is conceivable that such structural information would be relevant in certain computer applications, but obviously we never want to store all of the structure that is present in every situation. Indeed, for most card-playing situations we would not need all of the facts retained in the earlier example; the TAG field, which tells whether a card is face up or face down, will often be unnecessary.

We must decide in each case how much structure to represent in our tables, and how accessible to make each piece of information. To make such decisions, we need to know what operations are to be performed on the data. For each problem considered in this chapter, therefore, we consider not only the data structure but also the class of operations to be done on the data; the design of computer representations depends on the desired function of the data as well as on its intrinsic properties. Indeed, an emphasis on function as well as form is basic to design problems in general.

In order to illustrate this point further, let’s consider a related aspect of computer hardware design. A computer memory is often classified as a “random access memory,” like MIX’s main memory; or as a “read-only memory,” which is supposed to contain essentially constant information; or a “secondary bulk memory,” like MIX’s disk units, which cannot be accessed at high speed although large quantities of information can be stored; or an “associative memory,” more properly called a “content-addressed memory,” for which information is addressed by its value rather than by its location; and so on. The intended function of each kind of memory is so important that it enters into the name of the particular memory type; all of these devices are “memory” units, but the purposes to which they are put profoundly influence their design and their cost.

A linear list is a sequence of n ≥ 0 nodes X[1], X[2], . . ., X[n] whose essential structural properties involve only the relative positions between items as they appear in a line. The only things we care about in such structures are the facts that, if n > 0, X[1] is the first node and X[n] is the last; and if 1 < k < n, the kth node X[k] is preceded by X[k − 1] and followed by X[k + 1].

The operations we might want to perform on linear lists include, for example, the following.

i) Gain access to the kth node of the list to examine and/or to change the contents of its fields.

ii) Insert a new node just before or after the kth node.

iii) Delete the kth node.

iv) Combine two or more linear lists into a single list.

v) Split a linear list into two or more lists.

vi) Make a copy of a linear list.

vii) Determine the number of nodes in a list.

viii) Sort the nodes of the list into ascending order based on certain fields of the nodes.

ix) Search the list for the occurrence of a node with a particular value in some field.

In operations (i), (ii), and (iii) the special cases k = 1 and k = n are of principal importance, since the first and last items of a linear list may be easier to get at than a general element is. We will not discuss operations (viii) and (ix) in this chapter, since those topics are the subjects of Chapters 5 and 6, respectively.

A computer application rarely calls for all nine of these operations in their full generality, so we find that there are many ways to represent linear lists depending on the class of operations that are to be done most frequently. It is difficult to design a single representation method for linear lists in which all of these operations are efficient; for example, the ability to gain access to the kth node of a long list for random k is comparatively hard to do if at the same time we are inserting and deleting items in the middle of the list. Therefore we distinguish between types of linear lists depending on the principal operations to be performed, just as we have noted that computer memories are distinguished by their intended applications.

Linear lists in which insertions, deletions, and accesses to values occur almost always at the first or the last node are very frequently encountered, and we give them special names:

A stack is a linear list for which all insertions and deletions (and usually all accesses) are made at one end of the list.

A queue is a linear list for which all insertions are made at one end of the list; all deletions (and usually all accesses) are made at the other end.

A deque (“double-ended queue”) is a linear list for which all insertions and deletions (and usually all accesses) are made at the ends of the list.

A deque is therefore more general than a stack or a queue; it has some properties in common with a deck of cards, and it is pronounced the same way. We also distinguish output-restricted or input-restricted deques, in which deletions or insertions, respectively, are allowed to take place at only one end.

In some disciplines the word “queue” has been used in a much broader sense, to describe any kind of list that is subject to insertions and deletions; the special cases identified above are then called various “queuing disciplines.” Only the restricted use of the term “queue” is intended in this book, however, by analogy with orderly queues of people waiting in line for service.

Sometimes it helps to understand the mechanism of a stack in terms of an analogy from the switching of railroad cars, as suggested by E. W. Dijkstra (see Fig. 1). A corresponding picture for deques is shown in Fig. 2.

With a stack we always remove the “youngest” item currently in the list, namely the one that has been inserted more recently than any other. With a queue just the opposite is true: The “oldest” item is always removed; the nodes leave the list in the same order as they entered it.

Many people who have independently realized the importance of stacks and queues have given them other names: Stacks have been called push-down lists, reversion storages, cellars, nesting stores, piles, last-in-first-out (“LIFO”) lists, and even yo-yo lists. Queues are sometimes called circular stores or first-in-first-out (“FIFO”) lists. The terms LIFO and FIFO have been used for many years by accountants, as names of methods for pricing inventories. Still another term, “shelf,” has been applied to output-restricted deques, and input-restricted deques have been called “scrolls” or “rolls.” This multiplicity of names is interesting in itself, since it is evidence for the importance of the concepts. The words stack and queue are gradually becoming standard terminology; of all the other words listed above, only “push-down list” is still reasonably common, particularly in connection with automata theory.

Stacks arise quite frequently in practice. We might, for example, go through a set of data and keep a list of exceptional conditions or things to do later; after we’re done with the original set, we can then do the rest of the processing by coming back to the list, removing entries until it becomes empty. (The “saddle point” problem, exercise 1.3.2–10, is an instance of this situation.) Either a stack or a queue will be suitable for such a list, but a stack is generally more convenient. We all have “stacks” in our minds when we are solving problems: One problem leads to another and this leads to another; we stack up problems and subproblems and remove them as they are solved. Similarly, the process of entering and leaving subroutines during the execution of a computer program has a stack-like behavior. Stacks are particularly useful for the processing of languages with a nested structure, like programming languages, arithmetic expressions, and the literary German “Schachtelsätze.” In general, stacks occur most frequently in connection with explicitly or implicitly recursive algorithms, and we will discuss this connection thoroughly in Chapter 8.

Special terminology is generally used when algorithms refer to these structures: We put an item onto the top of a stack, or take the top item off (see Fig. 3a). The bottom of the stack is the least accessible item, and it will not be removed until all other items have been deleted. (People often say that they push an item down onto a stack, and pop the stack up when the top item is deleted. This terminology comes from an analogy with the stacks of plates often found in cafeterias. The brevity of the words “push” and “pop” has its advantages, but these terms falsely imply a motion of the whole list within computer memory. Nothing is physically pushed down; items are added onto the top, as in haystacks or stacks of boxes.) With queues, we speak of the front and the rear of the queue; things enter at the rear and are removed when they ultimately reach the front position (see Fig. 3b). When referring to deques, we speak of the left and right ends (Fig. 3c). The concepts of top, bottom, front, and rear are sometimes applied todeques that are being used as stacks or queues, with no standard conventions as to whether top, bottom, front, and rear should appear at the left or the right.

Thus we find it easy to use a rich variety of descriptive words from English in our algorithms: “up-down” terminology for stacks, “waiting in line” terminology for queues, and “left-right” terminology for deques.

A little bit of additional notation has proved to be convenient for dealing with stacks and queues: We write

(when A is a stack) to mean that the value x is inserted on top of stack A, or (when A is a queue) to mean that x is inserted at the rear of the queue. Similarly, the notation

is used to mean that the variable x is set equal to the value at the top of stack A or at the front of queue A, and this value is deleted from A. Notation (2) is meaningless when A is empty — that is, when A contains no values.

If A is a nonempty stack, we may write

to denote its top element.

Exercises

1. [06] An input-restricted deque is a linear list in which items may be inserted at one end but removed from either end; clearly an input-restricted deque can operate either as a stack or as a queue, if we consistently remove all items from one of the two ends. Can an output-restricted deque also be operated either as a stack or as a queue?

2. [15] Imagine four railroad cars positioned on the input side of the track in Fig. 1, numbered 1, 2, 3, and 4, from left to right. Suppose we perform the following sequence of operations (which is compatible with the direction of the arrows in the diagram and does not require cars to “jump over” other cars): (i) move car 1 into the stack; (ii) move car 2 into the stack; (iii) move car 2 into the output; (iv) move car 3 into the stack; (v) move car 4 into the stack; (vi) move car 4 into the output; (vii) move car 3 into the output; (viii) move car 1 into the output.

2. [15] Imagine four railroad cars positioned on the input side of the track in Fig. 1, numbered 1, 2, 3, and 4, from left to right. Suppose we perform the following sequence of operations (which is compatible with the direction of the arrows in the diagram and does not require cars to “jump over” other cars): (i) move car 1 into the stack; (ii) move car 2 into the stack; (iii) move car 2 into the output; (iv) move car 3 into the stack; (v) move car 4 into the stack; (vi) move car 4 into the output; (vii) move car 3 into the output; (viii) move car 1 into the output.

As a result of these operations the original order of the cars, 1234, has been changed into 2431. It is the purpose of this exercise and the following exercises to examine what permutations are obtainable in such a manner from stacks, queues, or deques.

If there are six railroad cars numbered 123456, can they be permuted into the order 325641? Can they be permuted into the order 154623? (In case it is possible, show how to do it.)

3. [25] The operations (i) through (viii) in the previous exercise can be much more concisely described by the code SSXSSXXX, where S stands for “move a car from the input into the stack,” and X stands for “move a car from the stack into the output.” Some sequences of S’s and X’s specify meaningless operations, since there may be no cars available on the specified track; for example, the sequence SXXSSXXS cannot be carried out, since we assume that the stack is initially empty.

Let us call a sequence of S’s and X’s admissible if it contains n S’s and n X’s, and if it specifies no operations that cannot be performed. Formulate a rule by which it is easy to distinguish between admissible and inadmissible sequences; show furthermore that no two different admissible sequences give the same output permutation.

4. [M34] Find a simple formula for an, the number of permutations on n elements that can be obtained with a stack like that in exercise 2.

5. [M28] Show that it is possible to obtain a permutation p1p2. . . pn from 12 . . . n using a stack if and only if there are no indices i < j < k such that pj < pk < pi.

5. [M28] Show that it is possible to obtain a permutation p1p2. . . pn from 12 . . . n using a stack if and only if there are no indices i < j < k such that pj < pk < pi.

6. [00] Consider the problem of exercise 2, with a queue substituted for a stack. What permutations of 12 . . . n can be obtained with use of a queue?

7. [25] Consider the problem of exercise 2, with a deque substituted for a stack. (a) Find a permutation of 1234 that can be obtained with an input-restricted deque, but it cannot be obtained with an output-restricted deque. (b) Find a permutation of 1234 that can be obtained with an output-restricted deque but not with an input-restricted deque. [As a consequence of (a) and (b), there is definitely a difference between input-restricted and output-restricted deques.] (c) Find a permutation of 1234 that cannot be obtained with either an input-restricted or an output-restricted deque.

7. [25] Consider the problem of exercise 2, with a deque substituted for a stack. (a) Find a permutation of 1234 that can be obtained with an input-restricted deque, but it cannot be obtained with an output-restricted deque. (b) Find a permutation of 1234 that can be obtained with an output-restricted deque but not with an input-restricted deque. [As a consequence of (a) and (b), there is definitely a difference between input-restricted and output-restricted deques.] (c) Find a permutation of 1234 that cannot be obtained with either an input-restricted or an output-restricted deque.

8. [22] Are there any permutations of 12 . . . n that cannot be obtained with the use of a deque that is neither input-nor output-restricted?

9. [M20] Let bn be the number of permutations on n elements obtainable by the use of an input-restricted deque. (Note that b4 = 22, as shown in exercise 7.) Show that bn is also the number of permutations on n elements with an output-restricted deque.

10. [M25] (See exercise 3.) Let S, Q, and X denote respectively the operations of in-serting an element at the left, inserting an element at the right, and emitting an element from the left, of an output-restricted deque. For example, the sequence QQXSXSXX will transform the input sequence 1234 into 1342. The sequence SXQSXSXX gives the same transformation.

Find a way to define the concept of an admissible sequence of the symbols S, Q, and X, so that the following property holds: Every permutation of n elements that is attainable with an output-restricted deque corresponds to precisely one admissible sequence.

11. [M40] As a consequence of exercises 9 and 10, the number bn is the number of admissible sequences of length 2n. Find a closed form for the generating function ∑n≥0bnzn.

11. [M40] As a consequence of exercises 9 and 10, the number bn is the number of admissible sequences of length 2n. Find a closed form for the generating function ∑n≥0bnzn.

12. [HM34] Compute the asymptotic values of the quantities an and bn in exercises 4 and 11.

13. [M48] How many permutations of n elements are obtainable with the use of a general deque? [See Rosenstiehl and Tarjan, J. Algorithms 5 (1984), 389–390, for an algorithm that decides in O(n) steps whether or not a given permutation is obtainable.]

14. [26] Suppose you are allowed to use only stacks as data structures. How can you implement a queue efficiently with two stacks?

14. [26] Suppose you are allowed to use only stacks as data structures. How can you implement a queue efficiently with two stacks?

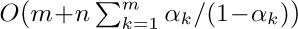

The simplest and most natural way to keep a linear list inside a computer is to put the list items in consecutive locations, one node after the other. Then we will have

LOC(X[ j + 1]) = LOC(X[ j]) + c,

where c is the number of words per node. (Usually c = 1. When c > 1, it is sometimes more convenient to split a single list into c “parallel” lists, so that the kth word of node X[j] is stored a fixed distance from the location of the first word of X[ j], depending on k. We will continually assume, however, that adjacent groups of c words form a single node.) In general,

where L0 is a constant called the base address, the location of an artificially assumed node X[0].

This technique for representing a linear list is so obvious and well-known that there seems to be no need to dwell on it at any length. But we will be seeing many other “more sophisticated” methods of representation later on in this chapter, and it is a good idea to examine the simple case first to see just how far we can go with it. It is important to understand the limitations as well as the power of the use of sequential allocation.

Sequential allocation is quite convenient for dealing with a stack. We simply have a variable T called the stack pointer. When the stack is empty, we let T = 0. To place a new element Y on top of the stack, we set

And when the stack is not empty, we can set Y equal to the top node and delete that node by reversing the actions of (2):

(Inside a computer it is usually most efficient to maintain the value cT instead of T, because of (1). Such modifications are easily made, so we will continue our discussion as though c = 1.)

The representation of a queue or a more general deque is a little trickier. An obvious solution is to keep two pointers, say F and R (for the front and rear of the queue), with F = R = 0 when the queue is empty. Then inserting an element at the rear of the queue would be

Removing the front node (F points just below the front) would be

But note what can happen: If R always stays ahead of F (so that there is always at least one node in the queue) the table entries used are X[1], X[2], . . ., X[1000], . . ., ad infinitum, and this is terribly wasteful of storage space. The simple method of (4) and (5) should therefore be used only in the situation when F is known to catch up to R quite regularly — for example, if all deletions come in spurts that empty the queue.

To circumvent the problem of the queue overrunning memory, we can set aside M nodes X[1], . . ., X[M] arranged implicitly in a circle with X[1] following X[M]. Then processes (4) and (5) above become

We have, in fact, already seen circular queuing action like this, when we looked at input-output buffering in Section 1.4.4.

Our discussion so far has been very unrealistic, because we have tacitly assumed that nothing could go wrong. When we deleted a node from a stack or queue, we assumed that there was at least one node present. When we inserted a node into a stack or queue, we assumed that there was room for it in memory. But clearly the method of (6) and (7) allows at most M nodes in the entire queue, and methods (2), (3), (4), (5) allow T and R to reach only a certain maximum amount within any given computer program. The following specifications show how the actions should be rewritten for the common case where we do not assume that these restrictions are automatically satisfied:

Here we assume that X[1], . . ., X[M] is the total amount of space allowed for the list; OVERFLOW and UNDERFLOW mean an excess or deficiency of items. The initial setting F = R = 0 for the queue pointers is no longer valid when we use (6a) and (7a), because overflow will not be detected when F = 0; we should start with F = R = 1, say.

The reader is urged to work exercise 1, which discusses a nontrivial aspect of this simple queuing mechanism.

The next question is, “What do we do when UNDERFLOW or OVERFLOW occurs?” In the case of UNDERFLOW, we have tried to remove a nonexistent item; this is usually a meaningful condition — not an error situation — that can be used to govern the flow of a program. For example, we might want to delete items repeatedly until UNDERFLOW occurs. An OVERFLOW situation, however, is usually an error; it means that the table is full already, yet there is still more information waiting to be put in. The usual policy in case of OVERFLOW is to report reluctantly that the program cannot go on because its storage capacity has been exceeded; then the program terminates.

Of course we hate to give up in an OVERFLOW situation when only one list has gotten too large, while other lists of the same program may very well have plenty of room remaining. In the discussion above we were primarily thinking of a program with only one list. However, we frequently encounter programs that involve several stacks, each of which has a dynamically varying size. In such a situation we don’t want to impose a maximum size on each stack, since the size is usually unpredictable; and even if a maximum size has been determined for each stack, we will rarely find all stacks simultaneously filling their maximum capacity.

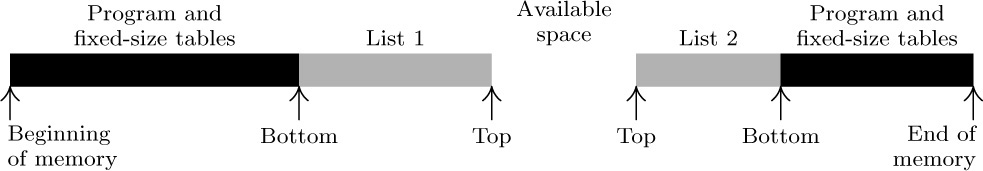

When there are just two variable-size lists, they can coexist together very nicely if we let the lists grow toward each other:

Here list 1 expands to the right, and list 2 (stored in reverse order) expands to the left. OVERFLOW will not occur unless the total size of both lists exhausts all memory space. The lists may independently expand and contract so that the effective maximum size of each one could be significantly more than half of the available space. This layout of memory space is used very frequently.

We can easily convince ourselves, however, that there is no way to store three or more variable-size sequential lists in memory so that (a) OVERFLOW will occur only when the total size of all lists exceeds the total space, and (b) each list has a fixed location for its “bottom” element. When there are, say, ten or more variable-size lists — and this is not unusual — the storage allocation problem becomes very significant. If we wish to satisfy condition (a), we must give up condition (b); that is, we must allow the “bottom” elements of the lists to change their positions. This means that the location L0 of Eq. (1) is not constant any longer; no reference to the table may be made to an absolute memory address, since all references must be relative to the base address L0. In the case of MIX, the coding to bring the Ith one-word node into register A is changed from

where BASE contains  . Such relative addressing evidently takes longer than fixed-base addressing, although it would be only slightly slower if

. Such relative addressing evidently takes longer than fixed-base addressing, although it would be only slightly slower if MIX had an “indirect addressing” feature (see exercise 3).

An important special case occurs when each of the variable-size lists is a stack. Then, since only the top element of each stack is relevant at any time, we can proceed almost as efficiently as before. Suppose that we have n stacks; the insertion and deletion algorithms above become the following, if BASE[i] and TOP[i] are link variables for the ith stack, and if each node is one word long:

Here BASE[i + 1] is the base location of the (i + 1)st stack. The condition TOP[i] = BASE[i] means that stack i is empty.

In (9), OVERFLOW is no longer such a crisis as it was before; we can “repack memory,” making room for the table that overflowed by taking some away from tables that aren’t yet filled. Several ways to do the repacking suggest themselves; we will now consider some of them in detail, since they can be quite important when linear lists are allocated sequentially. We will start by giving the simplest of the methods, and will then consider some of the alternatives.

Assume that there are n stacks, and that the values BASE[i] and TOP[i] are to be treated as in (9) and (10). These stacks are all supposed to share a common memory area consisting of all locations L with L0 < L ≤ L∞. (Here L0 and L∞ are constants that specify the total number of words available for use.) We might start out with all stacks empty, and

We also set BASE[n + 1] = L∞ so that (9) will work properly for i = n.

When OVERFLOW occurs with respect to stack i, there are three possibilities:

a) We find the smallest k for which i < k ≤ n and TOP[k] < BASE[k + 1], if any such k exist. Now move things up one notch:

Set CONTENTS(L + 1) ← CONTENTS(L), for TOP[k] ≥ L > BASE[i + 1].

(This must be done for decreasing, not increasing, values of L to avoid losing information. It is possible that TOP[k] = BASE[i + 1], in which case nothing needs to be moved.) Finally we set BASE[ j] ← BASE[ j] + 1 and TOP[ j] ← TOP[ j] + 1, for i < j ≤ k.

b) No k can be found as in (a), but we find the largest k for which 1 ≤ k < i and TOP[k] < BASE[k + 1]. Now move things down one notch:

Set CONTENTS(L − 1) ← CONTENTS(L), for BASE[k + 1] < L < TOP[i].

(This must be done for increasing values of L.) Then set BASE[ j] ← BASE[ j] − 1 and TOP[ j] ← TOP[ j] − 1, for k < j ≤ i.

c) We have TOP[k] = BASE[k + 1] for all k ≠ i. Then obviously we cannot find room for the new stack entry, and we must give up.

Figure 4 illustrates the configuration of memory for the case n = 4, Lo = 0, L∞ = 20, after the successive actions

(Here Ij and Dj refer to insertion and deletion in stack j, and an asterisk refers to an occurrence of OVERFLOW, assuming that no space is initially allocated to stacks 1, 2, and 3.)

It is clear that many of the first stack overflows that occur with this method could be eliminated if we chose our initial conditions wisely, instead of allocating all space initially to the nth stack as suggested in (11). For example, if we expect each stack to be of the same size, we can start out with

Operating experience with a particular program may suggest better starting values; however, no matter how well the initial allocation is set up, it can save at most a fixed number of overflows, and the effect is noticeable only in the early stages of a program run. (See exercise 17.)

Another possible way to improve the method above would be to make room for more than one new entry each time memory is repacked. This idea has been exploited by J. Garwick, who suggests a complete repacking of memory when overflow occurs, based on the change in size of each stack since the last repacking. His algorithm uses an additional array, called OLDTOP [j], 1 ≤ j ≤ n, which retains the value that TOP [j] had just after the previous allocation of memory. Initially, the tables are set as before, with OLDTOP [j] = TOP [j] . The algorithm proceeds as follows:

Algorithm G (Reallocate sequential tables). Assume that OVERFLOW has occurred in stack i, according to (9). After Algorithm G has been performed, either we will find the memory capacity exceeded or the memory will have been rearranged so that the action CONTENTS (TOP [i]) ← Y may be done. (Notice that TOP [i] has already been increased in (9) before Algorithm G takes place.)

G1. [Initialize.] Set SUM ← L∞ - L0, INC ← 0. Then do step G2 for 1 ≤ j ≤ n. (The effect will be to make SUM equal to the total amount of memory space left, and INC equal to the total amount of increases in table sizes since the last allocation.) After this has been done, go on to step G3.

G2. [Gather statistics.] Set SUM ← SUM − (TOP[ j] − BASE[ j]). If TOP[ j] > OLDTOP[ j], set D[ j] ← TOP[ j] − OLDTOP[ j] and INC ← INC + D[ j]; otherwise set D[ j] ← 0.

G3. [Is memory full?] If SUM < 0, we cannot proceed.

G4. [Compute allocation factors.] Set α ← 0.1 × SUM/n, β ← 0.9 × SUM/INC. (Here α and β are fractions, not integers, which are to be computed to reasonable accuracy. The following step awards the available space to individual lists as follows: Approximately 10 percent of the memory presently available will be shared equally among the n lists, and the other 90 percent will be divided proportionally to the amount of increase in table size since the previous allocation.)

G5. [Compute new base addresses.] Set NEWBASE[1] ← BASE[1] and σ ← 0; then for j = 2, 3, . . ., n set τ ← σ + α + D[ j − 1]β, NEWBASE[ j] ← NEWBASE[ j − 1] + TOP[ j − 1] − BASE[ j − 1] + \lfloor{τ}\rfloor − \lfloor{σ}\rfloor, and σ ← τ .

G6. [Repack.] Set TOP[i] ← TOP[i] − 1. (This reflects the true size of the ith list, so that no attempt will be made to move information from beyond the list boundary.) Perform Algorithm R below, and then reset TOP[i] ←TOP[i] + 1. Finally set OLDTOP[ j] ← TOP[ j] for 1 ≤ j ≤ n.

Perhaps the most interesting part of this whole algorithm is the general repacking process, which we shall now describe. Repacking is not trivial, since some portions of memory shift up and others shift down; it is obviously important not to overwrite any of the good information in memory while it is being moved.

Algorithm R (Relocate sequential tables). For 1 ≤ j ≤ n, the information specified by BASE[j] and TOP[j] in accord with the conventions stated above is moved to new positions specified by NEWBASE[j], and the values of BASE[j] and TOP[j] are suitably adjusted. This algorithm is based on the easily verified fact that the data to be moved downward cannot overlap with any data that is to be moved upward, nor with any data that is supposed to stay put.

R1. [Initialize.] Set j ← 1.

R2. [Find start of shift.] (Now all lists from 1 to j that were to be moved down have been shifted into the desired position.) Increase j in steps of 1 until finding either

a) NEWBASE[ j] < BASE[ j]: Go to R3; or

b) j > n: Go to R4.

R3. [Shift list down.] Set δ ← BASE[ j] − NEWBASE[ j]. Set CONTENTS(L − δ) ← CONTENTS(L), for L = BASE[ j] + 1, BASE[ j] + 2, . . ., TOP[ j]. (It is possible for BASE[ j] to equal TOP[ j], in which case no action is required.) Set BASE[ j] ← NEWBASE[ j], TOP[ j] ← TOP[ j] − δ. Go back to R2.

R4. [Find start of shift.] (Now all lists from j to n that were to be moved up have been shifted into the desired position.) Decrease j in steps of 1 until finding either

a) NEWBASE[ j] > BASE[ j]: Go to R5; or

b) j = 1: The algorithm terminates.

R5. [Shift list up.] Set δ ← NEWBASE[ j] − BASE[ j]. Set CONTENTS(L + δ) ← CONTENTS(L), for L = TOP[ j], TOP[ j] − 1, . . ., BASE[ j] + 1. (As in step R3, no action may actually be needed here.) Set BASE[ j] ← NEWBASE[ j], TOP[ j] ← TOP[ j] + δ. Go back to R4.

Notice that stack 1 never needs to be moved. Therefore we should put the largest stack first, if we know which one will be largest.

In Algorithms G and R we have purposely made it possible to have

OLDTOP[ j] ≡ D[ j] ≡ NEWBASE[ j + 1]

for 1 ≤ j ≤ n; that is, these three tables can share common memory locations since their values are never needed at conflicting times.

We have described these repacking algorithms for stacks, but it is clear that they can be adapted to any relatively addressed tables in which the current information is contained between BASE[ j] and TOP[ j]. Other pointers (for example, FRONT[ j] and REAR[ j]) could also be attached to the lists, making them serve as a queue or deque. See exercise 8, which considers the case of a queue in detail.

The mathematical analysis of dynamic storage-allocation algorithms like those above is extremely difficult. Some interesting results appear in the exercises below, although they only begin to scratch the surface as far as the general behavior is concerned.

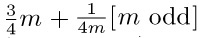

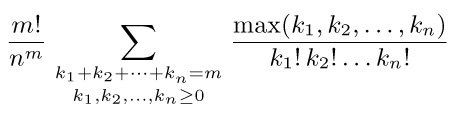

As an example of the theory that can be derived, suppose we consider the case when the tables grow only by insertion; deletions and subsequent insertions that cancel their effect are ignored. Let us assume further that each table is expected to fill at the same rate. This situation can be modeled by imagining a sequence of m insertion operations a1, a2, . . ., am, where each ai is an integer between 1 and n (representing an insertion on top of stack ai). For example, the sequence 1, 1, 2, 2, 1 means two insertions to stack 1, followed by two to stack 2, followed by another onto stack 1. We can regard each of the nm possible specifications a1, a2, . . ., am as equally likely, and then we can ask for the average number of times it is necessary to move a word from one location to another during the repacking operations as the entire table is built. For the first algorithm, starting with all available space given to the nth stack, this question is analyzed in exercise 9. We find that the average number of move operations required is

Thus, as we might expect, the number of moves is essentially proportional to the square of the number of times the tables grow. The same is true if the individual stacks aren’t equally likely (see exercise 10).

The moral of the story seems to be that a very large number of moves will be made if a reasonably large number of items is put into the tables. This is the price we must pay for the ability to pack a large number of sequential tables together tightly. No theory has been developed to analyze the average behavior of Algorithm G, and it is unlikely that any simple model will be able to describe the characteristics of real-life tables in such an environment. However, exercise 18 provides a worst-case guarantee that the running time will not be too bad if the memory doesn’t get too full.

Experience shows that when memory is only half loaded (that is, when the available space equals half the total space), we need very little rearranging of the tables with Algorithm G. The important thing is perhaps that the algorithm behaves well in the half-full case and that it at least delivers the right answers in the almost-full case.

But let us think about the almost-full case more carefully. When the tables nearly fill memory, Algorithm R takes rather long to perform its job. And to make matters worse, OVERFLOW is much more frequent just before the memory space is used up. There are very few programs that will come close to filling memory without soon thereafter completely overflowing it; and those that do overflow memory will probably waste enormous amounts of time in Algorithms G and R just before memory is overrun. Unfortunately, undebugged programs will frequently overflow memory capacity. To avoid wasting all this time, a possible suggestion would be to stop Algorithm G in step G3 if SUM is less than Smin, where the latter is chosen by the programmer to prevent excessive repacking. When there are many variable-size sequential tables, we should not expect to make use of 100 percent of the memory space before storage is exceeded.

Further study of Algorithm G has been made by D. S. Wise and D. C. Watson, BIT 16 (1976), 442–450. See also A. S. Fraenkel, Inf. Proc. Letters 8 (1979), 9–10, who suggests working with pairs of stacks that grow towards each other.

Exercises

1. [15] In the queue operations given by (6a) and (7a), how many items can be in the queue at one time without

1. [15] In the queue operations given by (6a) and (7a), how many items can be in the queue at one time without OVERFLOW occurring?

2. [22] Generalize the method of (6a) and (7a) so that it will apply to any deque with fewer than

2. [22] Generalize the method of (6a) and (7a) so that it will apply to any deque with fewer than M elements. In other words, give specifications for the other two operations, “delete from rear” and “insert at front.”

3. [21] Suppose that MIX is extended as follows: The I-field of each instruction is to have the form 8I1 + I2, where 0 ≤ I1 < 8, 0 ≤ I2 < 8. In assembly language one writes ‘OP ADDRESS,I1 :I2’ or (as presently) ‘OP ADDRESS,I2’ if I1 = 0. The meaning is to perform first the “address modification” I1 on ADDRESS, then to perform the “address modification” I2 on the resulting address, and finally to perform the OP with the new address. The address modifications are defined as follows:

0: M = A

1: M = A + rI1

2: M = A + rI2

. . .

6: M = A + rI6

7: M = resulting address defined from the ‘ADDRESS,I1 :I2’ fields found in location A. The case I1 = I2 = 7 in location A is not allowed. (The reason for the latter restriction is discussed in exercise 5.)

Here A denotes the address before the operation, and M denotes the resulting address after the address modification. In all cases the result is undefined if the value of M does not fit in two bytes and a sign. The execution time is increased by one unit for each “indirect-addressing” (modification 7) operation performed.

As a nontrivial example, suppose that location 1000 contains ‘NOP 1000,1:7’; location 1001 contains ‘NOP 1000,2’; and index registers 1 and 2 respectively contain 1 and 2. Then the command ‘LDA 1000,7:2’ is equivalent to ‘LDA 1004’, because

1000,7:2 = (1000,1:7),2 = (1001,7),2 = (1000,2),2 = 1002,2 = 1004.

a) Using this indirect addressing feature (if necessary), show how to simplify the coding on the right-hand side of (8) so that two instructions are saved per reference to the table. How much faster is your code than (8)?

b) Suppose there are several tables whose base addresses are stored in locations BASE + 1, BASE + 2, BASE + 3, . . .; how can the indirect addressing feature be used to bring the Ith element of the Jth table into register A in one instruction, assuming that I is in rI1 and J is in rI2?

c) What is the effect of the instruction ‘ENT4 X,7’, assuming that the (3 : 3)-field in location X is zero?

4. [25] Assume that MIX has been extended as in exercise 3. Show how to give a single instruction (plus auxiliary constants) for each of the following actions:

a) To loop indefinitely because indirect addressing never terminates.

b) To bring into register A the value LINK(LINK(x)), where the value of link variable x is stored in the (0:2) field of the location whose symbolic address is X, the value of LINK(x) is stored in the (0 : 2) field of location x, etc., assuming that the (3 : 3) fields in these locations are zero.

c) To bring into register A the value LINK(LINK(LINK(x))), under assumptions like those in (b).

d) To bring into register A the contents of location rI1 + rI2 + rI3 + rI4 + rI5 + rI6.

e) To quadruple the current value of rI6.

5. [35] The extension of

5. [35] The extension of MIX suggested in exercise 3 has an unfortunate restriction that “7 : 7” is not allowed in an indirectly addressed location.

a) Give an example to indicate that, without this restriction, it would probably be necessary for the MIX hardware to be capable of maintaining a long internal stack of three-bit items. (This would be prohibitively expensive hardware, even for a mythical computer like MIX.)

b) Explain why such a stack is not needed under the present restriction; in other words, design an algorithm with which the hardware of a computer could perform the desired address modifications without much additional register capacity.

c) Give a milder restriction than that of exercise 3 on the use of 7:7 that alleviates the difficulties of exercise 4(c), yet can be cheaply implemented in computer hardware.

6. [10] Starting with the memory configuration shown in Fig. 4, determine which of the following sequences of operations causes overflow or underflow:

(a) I1; (b) I2; (c) I3; (d) I4I4I4I4I4; (e) D2D2I2I2I2.

7. [12] Step G4 of Algorithm G indicates a division by the quantity INC. Can INC ever be zero at that point in the algorithm?

8. [26] Explain how to modify (9), (10), and the repacking algorithms for the case that one or more of the lists is a queue being handled circularly as in (6a) and (7a).

8. [26] Explain how to modify (9), (10), and the repacking algorithms for the case that one or more of the lists is a queue being handled circularly as in (6a) and (7a).

9. [M27] Using the mathematical model described near the end of the text, prove that Eq. (14) is the expected number of moves. (Note that the sequence 1, 1, 4, 2, 3, 1, 2, 4, 2, 1 specifies 0 + 0 + 0 + 1 + 1 + 3 + 2 + 0 + 3 + 6 = 16 moves.)

9. [M27] Using the mathematical model described near the end of the text, prove that Eq. (14) is the expected number of moves. (Note that the sequence 1, 1, 4, 2, 3, 1, 2, 4, 2, 1 specifies 0 + 0 + 0 + 1 + 1 + 3 + 2 + 0 + 3 + 6 = 16 moves.)

10. [M28] Modify the mathematical model of exercise 9 so that some tables are expected to be larger than others: Let pk be the probability that aj = k, for 1 ≤ j ≤ m, 1 ≤ k ≤ n. Thus p1 + p2 + · · · + pn = 1; the previous exercise considered the special case pk = 1/n for all k. Determine the expected number of moves, as in Eq. (14), for this more general case. It is possible to rearrange the relative order of the n lists so that the lists expected to be longer are put to the right (or to the left) of the lists that are expected to be shorter; what relative order for the n lists will minimize the expected number of moves, based on p1, p2, . . ., pn ?

11. [M30] Generalize the argument of exercise 9 so that the first t insertions in any stack cause no movement, while subsequent insertions are unaffected. Thus if t = 2, the sequence in exercise 9 specifies 0 + 0 + 0 + 0 + 0 + 3 + 0 + 0 + 3 + 6 = 12 moves. What is the average total number of moves under this assumption? [This is an approximation to the behavior of the algorithm when each stack starts with t available spaces.]

12. [M28] The advantage of having two tables coexist in memory by growing towards each other, rather than by having them kept in separate independently bounded areas, may be quantitatively estimated (to a certain extent) as follows. Use the model of exercise 9 with n = 2; for each of the 2m equally probable sequences a1, a2, . . ., am, let there be k1 1s and k2 2s. (Here k1 and k2 are the respective sizes of the two tables after the memory is full. We are able to run the algorithm with m = k1 + k2 locations when the tables are adjacent, instead of 2 max(k1, k2) locations to get the same effect with separate tables.)

What is the average value of max(k1, k2)?

13. [HM42] The value max(k1, k2) investigated in exercise 12 will be even greater if larger fluctuations in the tables are introduced by allowing random deletions as well as random insertions. Suppose we alter the model so that with probability p the sequence value aj is interpreted as a deletion instead of an insertion; the process continues until k1 + k2 (the total number of table locations in use) equals m. A deletion from an empty list causes no effect.

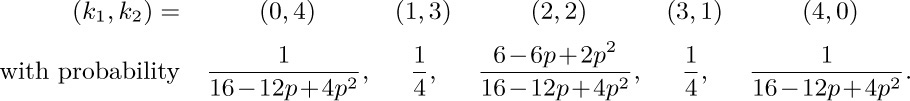

For example, if m = 4 it can be shown that we get the following probability distribution when the process stops:

Thus as p increases, the difference between k1 and k2 tends to increase. It is not difficult to show that in the limit as p approaches unity, the distribution of k1 becomes essentially uniform, and the limiting expected value of max(k1, k2) is exactly  . This behavior is quite different from that in the previous exercise (when p = 0); however, it may not be extremely significant, since when p approaches unity, the amount of time taken to terminate the process rapidly approaches infinity. The problem posed in this exercise is to examine the dependence of max(k1, k2) on p and m, and to determine asymptotic formulas for fixed p (like

. This behavior is quite different from that in the previous exercise (when p = 0); however, it may not be extremely significant, since when p approaches unity, the amount of time taken to terminate the process rapidly approaches infinity. The problem posed in this exercise is to examine the dependence of max(k1, k2) on p and m, and to determine asymptotic formulas for fixed p (like  ) as m approaches infinity. The case

) as m approaches infinity. The case  is particularly interesting.

is particularly interesting.

14. [HM43] Generalize the result of exercise 12 to arbitrary n ≥ 2, by showing that, when n is fixed and m approaches infinity, the quantity

has the asymptotic form m/n+cn +O(1). Determine the constants c2, c3, c4, and c5.

+O(1). Determine the constants c2, c3, c4, and c5.

15. [40] Using a Monte Carlo method, simulate the behavior of Algorithm G under varying distributions of insertions and deletions. What do your experiments imply about the efficiency of Algorithm G? Compare its performance with the algorithm given earlier that shifts up and down one node at a time.

16. [20] The text illustrates how two stacks can be located so they grow towards each other, thereby making efficient use of a common memory area. Can two queues, or a stack and a queue, make use of a common memory area with the same efficiency?

17. [30] If σ is any sequence of insertions and deletions such as (12), let s0 (σ) be the number of stack overflows that occur when the simple method of Fig. 4 is applied to σ with initial conditions (11), and let s1 (σ) be the corresponding number of overflows with respect to other initial conditions such as (13). Prove that s0 (σ) ≤ s1 (σ)+L∞ − L0.

18. [M30] Show that the total running time for any sequence of m insertions and/or deletions by Algorithms G and R is

18. [M30] Show that the total running time for any sequence of m insertions and/or deletions by Algorithms G and R is  , where αk is the fraction of memory occupied on the most recent repacking previous to the kth operation; αk = 0 before the first repacking. (Therefore if the memory never gets more than, say, 90% full, each operation takes at most O(n) units of time in an amortized sense, regardless of the total memory size.) Assume that

, where αk is the fraction of memory occupied on the most recent repacking previous to the kth operation; αk = 0 before the first repacking. (Therefore if the memory never gets more than, say, 90% full, each operation takes at most O(n) units of time in an amortized sense, regardless of the total memory size.) Assume that L∞ – L0 ≥ n2.

19. [16] (0-origin indexing.) Experienced programmers learn that it is generally wise to denote the elements of a linear list by

19. [16] (0-origin indexing.) Experienced programmers learn that it is generally wise to denote the elements of a linear list by X[0], X[1], . . ., X[n − 1], instead of using the more traditional notation X[1], X[2], . . ., X[n]. Then, for example, the base address L0 in (1) points to the smallest cell of the array.

Revise the insertion and deletion methods (2a), (3a), (6a), and (7a) for stacks and queues so that they conform to this convention. In other words, change them so that the list elements will appear in the array X[0], X[1], . . ., X[M − 1], instead of X[1], X[2], . . ., X[M].

Instead of keeping a linear list in sequential memory locations, we can make use of a much more flexible scheme in which each node contains a link to the next node of the list.

Here A, B, C, D, and E are arbitrary locations in the memory, and Λ is the null link (see Section 2.1). The program that uses this table in the case of sequential allocation would have an additional variable or constant whose value indicates that the table is five items in length, or else this information would be specified by a sentinel code within item 5 or in the following location. A program for linked allocation would have a link variable or link constant that points to A; all the other items of the list can be found from address A.

Recall from Section 2.1 that links are often shown simply by arrows, since the actual memory locations occupied are usually irrelevant. The linked table above might therefore be shown as follows:

Here FIRST is a link variable pointing to the first node of the list.

We can make several obvious comparisons between these two basic forms of storage:

1) Linked allocation takes up additional memory space for the links. This can be the dominating factor in some situations. However, we frequently find that the information in a node does not take up a whole word anyway, so there is already space for a link field present. Also, it is possible in many applications to combine several items into one node so that there is only one link for several items of information (see exercise 2.5–2). But even more importantly, there is often an implicit gain in storage by the linked memory approach, since tables can overlap, sharing common parts; and in many cases, sequential allocation will not be as efficient as linked allocation unless a rather large number of additional memory locations are left vacant anyway. For example, the discussion at the end of the previous section explains why the systems described there are necessarily inefficient when memory is densely loaded.

2) It is easy to delete an item from within a linked list. For example, to delete item 3 we need only change the link associated with item 2. But with sequential allocation such a deletion generally implies moving a large part of the list up into different locations.

3) It is easy to insert an item into the midst of a list when the linked scheme is being used. For example, to insert an item 2 into (1) we need to change only two links:

into (1) we need to change only two links:

By comparison, this operation would be extremely time-consuming in a long sequential table.

4) References to random parts of the list are much faster in the sequential case. To gain access to the kth item in the list, when k is a variable, takes a fixed time in the sequential case, but we need k iterations to march down to the right place in the linked case. Thus the usefulness of linked memory is predicated on the fact that in the large majority of applications we want to walk through lists sequentially, not randomly; if items in the middle or at the bottom of the list are needed, we try to keep an additional link variable or list of link variables pointing to the proper places.

5) The linked scheme makes it easier to join two lists together, or to break one apart into two that will grow independently.

6) The linked scheme lends itself immediately to more intricate structures than simple linear lists. We can have a variable number of variable-size lists; any node of the list may be a starting point for another list; the nodes may simultaneously be linked together in several orders corresponding to different lists; and so on.

7) Simple operations, like proceeding sequentially through a list, are slightly faster for sequential lists on many computers. For MIX, the comparison is between ‘INC1 c’ and ‘LD1 0,1(LINK)’, which is only one cycle different, but many machines do not enjoy the property of being able to load an index register from an indexed location. If the elements of a linked list belong to different pages in a bulk memory, the memory accesses might take significantly longer.

Thus we see that the linking technique, which frees us from any constraints imposed by the consecutive nature of computer memory, gives us a good deal more efficiency in some operations, while we lose some capabilities in other cases. It is usually clear which allocation technique will be most appropriate in a given situation, and both methods are often used in different lists of the same program.

In the next few examples we will assume for convenience that a node has one word and that it is broken into the two fields INFO and LINK:

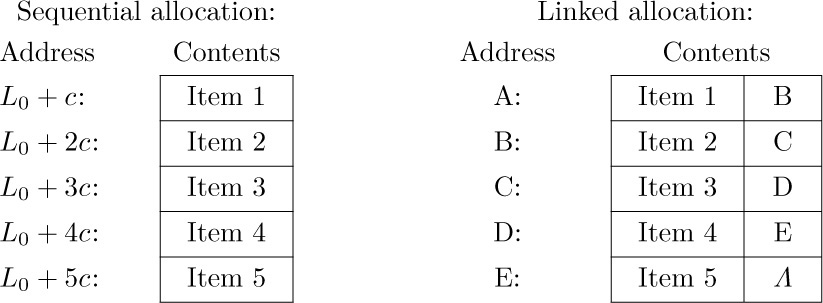

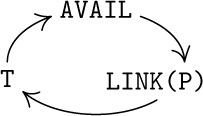

The use of linked allocation generally implies the existence of some mechanism for finding empty space available for a new node, when we wish to insert some newly created information onto a list. This is usually done by having a special list called the list of available space. We will call it the AVAIL list (or the AVAIL stack, since it is usually treated in a last-in-first-out manner). The set of all nodes not currently in use is linked together in a list just like any other list; the link variable AVAIL refers to the top element of this list. Thus, if we want to set link variable X to the address of a new node, and to reserve that node for future use, we can proceed as follows:

This effectively removes the top of the AVAIL stack and makes X point to the node just removed. Operation (4) occurs so often that we have a special notation for it: “X ⇐ AVAIL” will mean X is set to point to a new node.

When a node is deleted and no longer needed, process (4) can be reversed:

This operation puts the node addressed by X back onto the list of raw material; we denote (5) by “AVAIL ⇐ X”.

Several important things have been omitted from this discussion of the AVAIL stack. We did not say how to set it up at the beginning of a program; clearly this can be done by (a) linking together all nodes that are to be used for linked memory, (b) setting AVAIL to the address of the first of these nodes, and (c) making the last node link to Λ. The set of all nodes that can be allocated is called the storage pool.

A more important omission in our discussion was the test for overflow: We neglected to check in (4) if all available memory space has been taken. The operation X ⇐ AVAIL should really be defined as follows:

The possibility of overflow must always be considered. Here OVERFLOW generally means that we terminate the program with regrets; or else we can go into a “garbage collection” routine that attempts to find more available space. Garbage collection is discussed in Section 2.3.5.

There is another important technique for handling the AVAIL stack: We often do not know in advance how much memory space should be used for the storage pool. There may be a sequential table of variable size that wants to coexist in memory with the linked tables; in such a case we do not want the linked memory area to take any more space than is absolutely necessary. So suppose that we wish to place the linked memory area in ascending locations beginning with L0 and that this area is never to extend past the value of variable SEQMIN (which represents the current lower bound of the sequential table). Then we can proceed as follows, using a new variable POOLMAX:

a) Initially set AVAIL ← Λ and POOLMAX ← L0.

b) The operation X ⇐ AVAIL becomes the following:

c) When other parts of the program attempt to decrease the value of SEQMIN, they should sound the OVERFLOW alarm if SEQMIN < POOLMAX.

d) The operation AVAIL ⇐ X is unchanged from (5).

This idea actually represents little more than the previous method with a special recovery procedure substituted for the OVERFLOW situation in (6). The net effect is to keep the storage pool as small as possible. Many people like to use this idea even when all lists occupy the storage pool area (so that SEQMIN is constant), since it avoids the rather time-consuming operation of initially linking all available cells together and it facilitates debugging. We could, of course, put the sequential list on the bottom and the pool on the top, having POOLMIN and SEQMAX instead of POOLMAX and SEQMIN.

Thus it is quite easy to maintain a pool of available nodes, in such a way that free nodes can efficiently be found and later returned. These methods give us a source of raw material to use in linked tables. Our discussion was predicated on the implicit assumption that all nodes have a fixed size, c; the cases that arise when different sizes of nodes are present are very important, but we will defer that discussion until Section 2.5. Now we will consider a few of the most common list operations in the special case where stacks and queues are involved.

The simplest kind of linked list is a stack. Figure 5 shows a typical stack, with a pointer T to the top of the stack. When the stack is empty, this pointer will have the value Λ.

It is clear how to insert (“push down”) new information Y onto the top of such a stack, using an auxiliary pointer variable P.

Conversely, to set Y equal to the information at the top of the stack and to “pop up” the stack:

These operations should be compared with the analogous mechanisms for sequentially allocated stacks, (2a) and (3a) in Section 2.2.2. The reader should study (8) and (9) carefully, since they are extremely important operations.

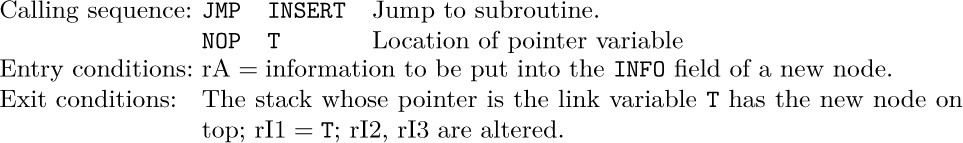

Before looking at the case of queues, let us see how the stack operations can be expressed conveniently in programs for MIX. A program for insertion, with P ≡ rI1, can be written as follows:

This takes 17 units of time, compared to 12 units for the comparable operation with a sequential table (although OVERFLOW in the sequential case would in many cases take considerably longer). In this program, as in others to follow in this chapter, OVERFLOW denotes either an ending routine or a subroutine that finds more space and returns to location rJ − 2.

A program for deletion is equally simple:

It is interesting to observe that each of these operations involves a cyclic permutation of three links. For example, in the insertion operation let P be the value of AVAIL before the insertion; if P ≠ Λ, we find that after the operation

the value of AVAIL has become the previous value of LINK(P),

the value of LINK(P) has become the previous value of T, and

the value of T has become the previous value of AVAIL.

So the insertion process (except for setting INFO(P) ← Y) is the cyclic permutation

Similarly in the case of deletion, where P has the value of T before the operation and we assume that P ≠ Λ, we have Y ← INFO(P) and

The fact that the permutation is cyclic is not really a relevant issue, since any permutation of three elements that moves every element is cyclic. The important point is rather that precisely three links are permuted in these operations.

The insertion and deletion algorithms of (8) and (9) have been described for stacks, but they apply much more generally to insertion and deletion in any linear list. Insertion, for example, is performed just before the node pointed to by link variable T. The insertion of item 2 in (2) above would be done by using operation (8) with

in (2) above would be done by using operation (8) with T = LINK(LINK(FIRST)).

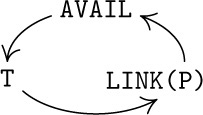

Linked allocation applies in a particularly convenient way to queues. In this case it is easy to see that the links should run from the front of the queue towards the rear, so that when a node is removed from the front, the new front node is directly specified. We will make use of pointers F and R, to the front and rear:

Except for R, this diagram is abstractly identical to Fig. 5 on page 258.

Whenever the layout of a list is designed, it is important to specify all conditions carefully, particularly for the case when the list is empty. One of the most common programming errors connected with linked allocation is the failure to handle empty lists properly; the other common error is to forget about changing some of the links when a structure is being manipulated. In order to avoid the first type of error, we should always examine the “boundary conditions” carefully. To avoid making the second type of error, it is helpful to draw “before and after” diagrams and to compare them, in order to see which links must change.

Let’s illustrate the remarks of the preceding paragraph by applying them to the case of queues. First consider the insertion operation: If (12) is the situation before insertion, the picture after insertion at the rear of the queue should be

(The notation used here implies that a new node has been obtained from the AVAIL list.) Comparing (12) and (13) shows us how to proceed when inserting the information Y at the rear of the queue:

Let us now consider the “boundary” situation when the queue is empty: In this case the situation before insertion is yet to be determined, and the situation “after” is

It is desirable to have operations (14) apply in this case also, even if insertion into an empty queue means that we must change both F and R, not only R. We find that (14) will work properly if R = LOC(F) when the queue is empty, assuming that F ≡ LINK(LOC(F)); the value of variable F must be stored in the LINK field of its location if this idea is to work. In order to make the testing for an empty queue as efficient as possible, we will let F = Λ in this case. Our policy is therefore that

an empty queue is represented by F = Λ and R = LOC(F).

If the operations (14) are applied under these circumstances, we obtain (15).

The deletion operation for queues is derived in a similar fashion. If (12) is the situation before deletion, the situation afterwards is

For the boundary conditions we must make sure that the deletion operation works when the queue is empty either before or after the operation. These considerations lead us to the following way to do queue deletion in general:

Notice that R must be changed when the queue becomes empty; this is precisely the type of “boundary condition” we should always be watching for.

These suggestions are not the only way to represent queues in a linearly linked fashion; exercise 30 describes a somewhat more natural alternative, and we will give other methods later in this chapter. Indeed, none of the operations above are meant to be prescribed as the only way to do something; they are intended as examples of the basic means of operating with linked lists. The reader who has had only a little previous experience with such techniques will find it helpful to reread the present section up to this point before going on.

So far in this chapter we have discussed how to perform certain operations on tables, but our discussions have always been “abstract,” in the sense that we never exhibited actual programs in which the particular techniques were useful. People aren’t generally motivated to study abstractions of a problem until they’ve seen enough special instances of the problem to arouse their interest. The operations discussed so far — manipulations of variable-size lists of information by insertion and deletion, and the use of tables as stacks or queues — are of such wide application, it is hoped that the reader will have encountered them often enough already to grant their importance. But now we will leave the realm of the abstract as we begin to study a series of significant practical examples of the techniques of this chapter.

Our first example is a problem called topological sorting, which is an important process needed in connection with network problems, with so-called PERT charts, and even with linguistics; in fact, it is of potential use whenever we have a problem involving a partial ordering. A partial ordering of a set S is a relation between the objects of S, which we may denote by the symbol “≼”, satisfying the following properties for any objects x, y, and z (not necessarily distinct) in S:

i) If x ≼ y and y ≼ z, then x ≼ z. (Transitivity.)

ii) If x ≼ y and y ≼ x, then x = y. (Antisymmetry.)

iii) x ≼ x. (Reflexivity.)

The notation x ≼ y may be read “x precedes or equals y.” If x ≼ y and x ≠ y, we write x ≺ y and say “x precedes y.” It is easy to see from (i), (ii), and (iii) that we always have

i′) If x ≺ y and y ≺ z, then x ≺ z. (Transitivity.)

ii′) If x ≺ y, then y ⊀ x. (Asymmetry.)

iii′) x ⊀ x. (Irreflexivity.)

The relation denoted by y ⊀ x means “y does not precede x.” If we start with a relation ≺ satisfying properties (i′), (ii′), and (iii′), we can reverse the process above and define x ≼ y if x ≺ y or x = y; then properties (i), (ii), and (iii) are true. Therefore we may regard either properties (i), (ii), (iii) or properties (i′),(ii′), (iii′) as the definition of partial order. Notice that property (ii′) is actually a consequence of (i′) and (iii′), although (ii) does not follow from (i) and (iii).

Partial orderings occur quite frequently in everyday life as well as in mathematics. As examples from mathematics we can mention the relation x ≤ y between real numbers x and y; the relation x ⊆ y between sets of objects; the relation x\backslash y (x divides y) between positive integers. In the case of PERT networks, S is a set of jobs that must be done, and the relation “x ≺ y” means “x must be done before y.”