4. [13] Given the fact that log10 1000! = 2567.60464 ..., determine exactly how many decimal digits are present in the number 1000!. What is the most significant digit? What is the least significant digit?

4. [13] Given the fact that log10 1000! = 2567.60464 ..., determine exactly how many decimal digits are present in the number 1000!. What is the most significant digit? What is the least significant digit?1. [00] How many ways are there to shuffle a 52-card deck?

2. [10] In the notation of Eq. (2), show that $p_{n(n-1)}=p_{nn}$, and explain why this happens.

3. [10] What permutations of ${1, 2, 3, 4, 5}$ would be constructed from the permutation 3 1 2 4 using Methods 1 and 2, respectively?

4. [13] Given the fact that log10 1000! = 2567.60464 ..., determine exactly how many decimal digits are present in the number 1000!. What is the most significant digit? What is the least significant digit?

4. [13] Given the fact that log10 1000! = 2567.60464 ..., determine exactly how many decimal digits are present in the number 1000!. What is the most significant digit? What is the least significant digit?

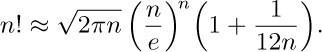

5. [15] Estimate 8! using the following more exact version of Stirling’s approximation:

6. [17] Using Eq. (8), write 20! as a product of prime factors.

6. [17] Using Eq. (8), write 20! as a product of prime factors.

7. [M10] Show that the “generalized termial” function in Eq. (10) satisfies the identity $x?=x+(x-1)$? for all real numbers x.

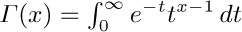

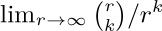

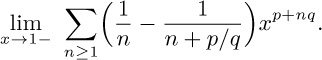

8. [HM15] Show that the limit in Eq. (13) does equal $n!$ when n is a nonnegative integer.

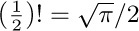

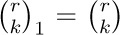

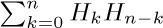

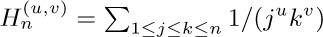

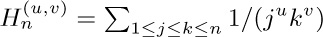

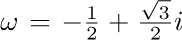

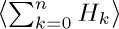

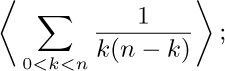

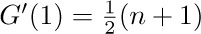

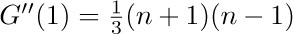

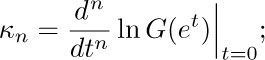

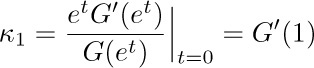

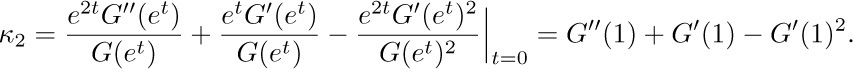

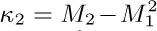

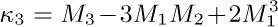

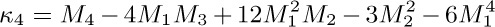

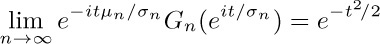

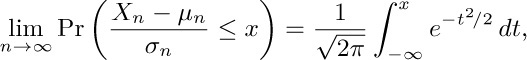

9. [M10] Determine the values of  and

and  , given that

, given that  .

.

10. [HM20] Does the identity $Γ (x+1)=xΓ (x)$ hold for all real numbers x? (See exercise 7.)

10. [HM20] Does the identity $Γ (x+1)=xΓ (x)$ hold for all real numbers x? (See exercise 7.)

11. [M15] Let the representation of n in the binary system be $n=2^{e_{1}}+2^{e_{2}}+...+2^{e_{r}}$ where $e_{1}\gt e_{2}\gt...\gt e_{r} ≥ 0$. Show that $n!$ is divisible by $2^{n-r}$ but not by $2^{n-r+1}$.

12. [M22] (A. Legendre, 1808.) Generalizing the result of the previous exercise, let p be a prime number, and let the representation of n in the p-ary number system be $n=a_{k}p^{k}+a_{k-1}p^{k-1}+...+a_{1}p+a_{0}$. Express the number μ of Eq. (8) in a simple formula involving n, p, and a’s.

12. [M22] (A. Legendre, 1808.) Generalizing the result of the previous exercise, let p be a prime number, and let the representation of n in the p-ary number system be $n=a_{k}p^{k}+a_{k-1}p^{k-1}+...+a_{1}p+a_{0}$. Express the number μ of Eq. (8) in a simple formula involving n, p, and a’s.

13. [M23] (Wilson’s theorem, actually due to Leibniz, 1682.) If p is prime, then $(p-1)!\bmod p=p-1$. Prove this, by pairing off numbers among ${1, 2,..., p-1}$ whose product modulo p is 1.

14. [M28] (L. Stickelberger, 1890.) In the notation of exercise 12, we can determine $n!\bmod p$ in terms of the p-ary representation, for any positive integer n, thus generalizing Wilson’s theorem. In fact, prove that $n!/p^{μ}≡(-1)^{μ}a_{0} ! a_{1} !... a_{k} ! (modulo p)$.

14. [M28] (L. Stickelberger, 1890.) In the notation of exercise 12, we can determine $n!\bmod p$ in terms of the p-ary representation, for any positive integer n, thus generalizing Wilson’s theorem. In fact, prove that $n!/p^{μ}≡(-1)^{μ}a_{0} ! a_{1} !... a_{k} ! (modulo p)$.

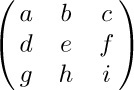

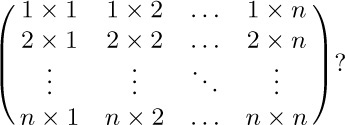

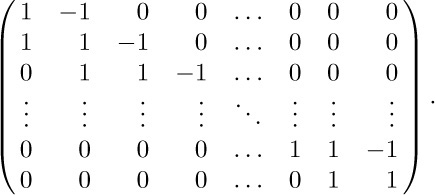

15. [HM15] The permanent of a square matrix is defined by the same expansion as the determinant except that each term of the permanent is given a plus sign while the determinant alternates between plus and minus. Thus the permanent of

is $aei+bfg+cdh+gec+hfa+idb$. What is the permanent of

16. [HM15] Show that the infinite sum in Eq. (11) does not converge unless n is a nonnegative integer.

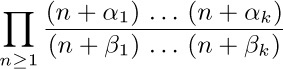

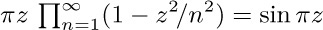

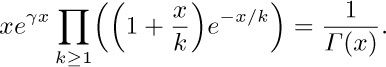

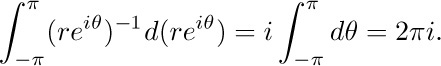

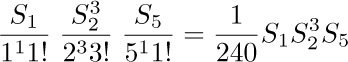

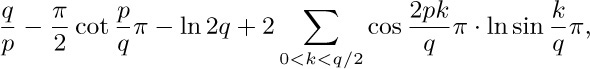

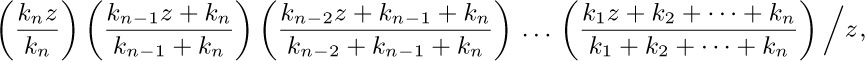

17. [HM20] Prove that the infinite product

equals $Γ (1+β_{1})... Γ (1+β_{k}) /Γ (1+α_{1})... Γ (1+α_{k}), if α_{1}+···+α_{k}=β_{1}+···+β_{k}$ and if none of the β’s is a negative integer.

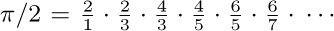

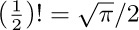

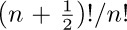

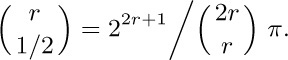

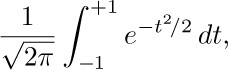

18. [M20] Assume that  . (This is “Wallis’s product,” obtained by J. Wallis in 1655, and we will prove it in exercise 1.2.6–43.) Using the previous exercise, prove that

. (This is “Wallis’s product,” obtained by J. Wallis in 1655, and we will prove it in exercise 1.2.6–43.) Using the previous exercise, prove that  .

.

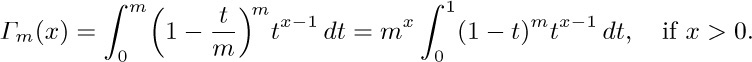

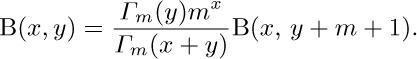

19. [HM22] Denote the quantity appearing after “$\lim_{m→∞}$” in Eq. (15) by $Γ_{m}(x)$. Show that

20. [HM21] Using the fact that $0≤e^{-t}-(1-t/m)^{m}≤t^{2}e^{-t}/m$, if 0 ≤ t ≤ m, and the previous exercise, show that  , if x > 0.

, if x > 0.

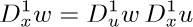

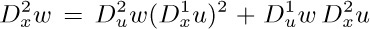

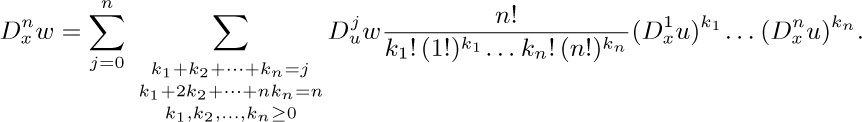

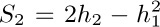

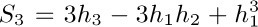

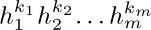

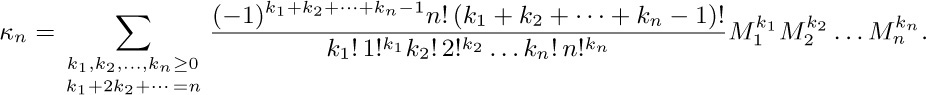

21. [HM25] (L. F. A. Arbogast, 1800.) Let  represent the kth derivative of a function u with respect to x. The chain rule states that

represent the kth derivative of a function u with respect to x. The chain rule states that  . If we apply this to second derivatives, we find

. If we apply this to second derivatives, we find  . Show that the general formula is

. Show that the general formula is

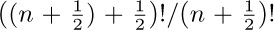

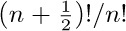

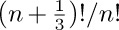

22. [HM20] Try to put yourself in Euler’s place, looking for a way to generalize n! to noninteger values of n. Since

22. [HM20] Try to put yourself in Euler’s place, looking for a way to generalize n! to noninteger values of n. Since  times

times  equals $(n+1)!/n!=n+1$, it seems natural that

equals $(n+1)!/n!=n+1$, it seems natural that  should be approximately

should be approximately  . Similarly,

. Similarly,  should be

should be  . Invent a hypothesis about the ratio $(n+x)!/n!$ as n approaches infinity. Is your hypothesis correct when x is an integer? Does it tell anything about the appropriate value of $x!$ when x is not an integer?

. Invent a hypothesis about the ratio $(n+x)!/n!$ as n approaches infinity. Is your hypothesis correct when x is an integer? Does it tell anything about the appropriate value of $x!$ when x is not an integer?

23. [HM20] Prove (16), given that  .

.

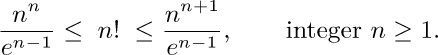

24. [HM21] Prove the handy inequalities

24. [HM21] Prove the handy inequalities

[Hint: $1+x≤e^{x}$ for all real x; hence $(k+1)/k≤e^{1/k}≤k/(k-1)$.]

25. [M20] Do factorial powers satisfy a law analogous to the ordinary law of exponents, $x^{m+n}=x^{m}x^{n}$?

The combinations of n objects taken k at a time are the possible choices of k different elements from a collection of n objects, disregarding order. The combinations of the five objects ${a, b, c, d, e}$ taken three at a time are

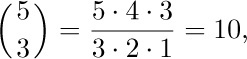

It is a simple matter to count the total number of k-combinations of n objects: Equation (2) of the previous section told us that there are $n(n-1)... (n-k+1)$ ways to choose the first k objects for a permutation; and every k-combination appears exactly $k!$ times in these arrangements, since each combination appears in all its permutations. Therefore the number of combinations, which we denote by  , is

, is

For example,

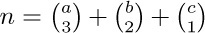

which is the number of combinations we found in (1).

The quantity  , read “n choose k,” is called a binomial coefficient; these numbers have an extraordinary number of applications. They are probably the most important quantities entering into the analysis of algorithms, so the reader is urged to become familiar with them.

, read “n choose k,” is called a binomial coefficient; these numbers have an extraordinary number of applications. They are probably the most important quantities entering into the analysis of algorithms, so the reader is urged to become familiar with them.

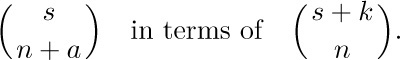

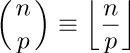

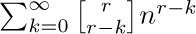

Equation (2) may be used to define  even when n is not an integer. To be precise, we define the symbol

even when n is not an integer. To be precise, we define the symbol  for all real numbers r and all integers k as follows:

for all real numbers r and all integers k as follows:

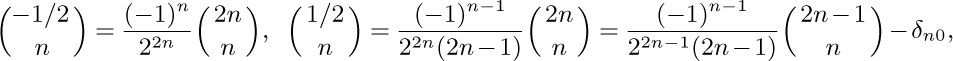

In particular cases we have

Table 1 gives values of the binomial coefficients for small integer values of r and k; the values for 0 ≤ r ≤ 4 should be memorized.

Binomial coefficients have a long and interesting history. Table 1 is called “Pascal’s triangle” because it appeared in Blaise Pascal’s Traité du Triangle Arithmétique in 1653. This treatise was significant because it was one of the first works on probability theory, but Pascal did not invent the binomial coefficients (which were well-known in Europe at that time). Table 1 also appeared in the treatise Szu-yüan Yü-chien (“The Precious Mirror of the Four Elements”) by the Chinese mathematician Chu Shih-Chieh in 1303, where they were said to be an old invention. Yang Hui, in 1261, credited them to Chia Hsien (c. 1100), whose work is now lost. The earliest known detailed discussion of binomial coefficients is in a tenth-century commentary, due to Halāyudha, on an ancient Hindu classic, Pi gala’s Chanda

gala’s Chanda śāstra. [See G. Chakravarti, Bull. Calcutta Math. Soc. 24 (1932), 79–88.] Another Indian mathematician, Mahāvīra, had previously explained rule (3) for computing

śāstra. [See G. Chakravarti, Bull. Calcutta Math. Soc. 24 (1932), 79–88.] Another Indian mathematician, Mahāvīra, had previously explained rule (3) for computing  in Chapter 6 of his Gan. ita Sāra Sa

in Chapter 6 of his Gan. ita Sāra Sa graha, written about 850; and in 1150 Bhāskara repeated Mahāvīra’s rule near the end of his famous book Līlāvatī. For small values of k, binomial coefficients were known much earlier; they appeared in Greek and Roman writings with a geometric interpretation (see Fig. 8). The notation

graha, written about 850; and in 1150 Bhāskara repeated Mahāvīra’s rule near the end of his famous book Līlāvatī. For small values of k, binomial coefficients were known much earlier; they appeared in Greek and Roman writings with a geometric interpretation (see Fig. 8). The notation  was introduced by Andreas von Ettingshausen in §31 of his book Die combinatorische Analysis (Vienna: 1826).

was introduced by Andreas von Ettingshausen in §31 of his book Die combinatorische Analysis (Vienna: 1826).

The reader has probably noticed several interesting patterns in Table 1. Binomial coefficients satisfy literally thousands of identities, and for centuries their amazing properties have been continually explored. In fact, there are so many relations present that when someone finds a new identity, not many people get excited about it any more, except the discoverer. In order to manipulate the formulas that arise in the analysis of algorithms, a facility for handling binomial coefficients is a must, and so an attempt has been made in this section to explain in a simple way how to maneuver with these numbers. Mark Twain once tried to reduce all jokes to a dozen or so primitive kinds (farmer’s daughter, mother-in-law, etc.); we will try to condense the thousands of identities into a small set of basic operations with which we can solve nearly every problem involving binomial coefficients that we will meet.

In most applications, both of the numbers r and k that appear in  will be integers, and some of the techniques we will describe are applicable only in such cases. Therefore we will be careful to list, at the right of each numbered equation, any restrictions on the variables that appear. For example, Eq. (3) mentions the requirement that k is an integer; there is no restriction on r. The identities with fewest restrictions are the most useful.

will be integers, and some of the techniques we will describe are applicable only in such cases. Therefore we will be careful to list, at the right of each numbered equation, any restrictions on the variables that appear. For example, Eq. (3) mentions the requirement that k is an integer; there is no restriction on r. The identities with fewest restrictions are the most useful.

Now let us study the basic techniques for operating on binomial coefficients:

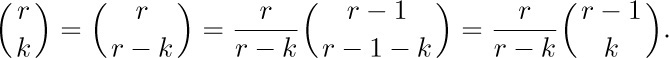

A. Representation by factorials. From Eq. (3) we have immediately

This allows combinations of factorials to be represented as binomial coefficients and conversely.

B. Symmetry condition. From Eqs. (3) and (5), we have

This formula holds for all integers k. When k is negative or greater than n, the binomial coefficient is zero (provided that n is a nonnegative integer).

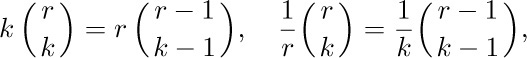

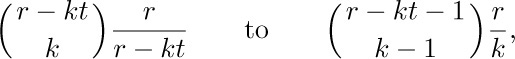

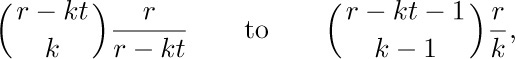

C. Moving in and out of parentheses. From the definition (3), we have

This formula is very useful for combining a binomial coefficient with other parts of an expression. By elementary transformation we have the rules

the first of which is valid for all integers k, and the second when no division by zero has been performed. We also have a similar relation:

Let us illustrate these transformations, by proving Eq. (8) using Eqs. (6) and (7) alternately:

[Note: This derivation is valid only when r is a positive integer ≠ k, because of the constraints involved in Eqs. (6) and (7); yet Eq. (8) claims to be valid for arbitrary r ≠ k. This can be proved in a simple and important manner: We have verified that

for infinitely many values of r. Both sides of this equation are polynomials in r. A nonzero polynomial of degree n can have at most n distinct zeros; so (by subtraction) if two polynomials of degree ≤ n agree at n + 1 or more different points, the polynomials are identically equal. This principle may be used to extend the validity of many identities from integers to all real numbers.]

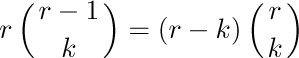

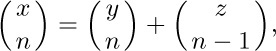

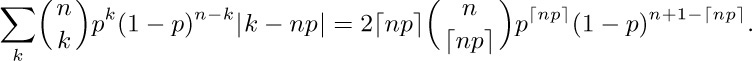

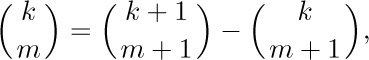

D. Addition formula. The basic relation

is clearly valid in Table 1 (every value is the sum of the two values above and to the left) and we may easily verify it in general from Eq. (3). Alternatively, Eqs. (7) and (8) tell us that

Equation (9) is often useful in obtaining proofs by induction on r, when r is an integer.

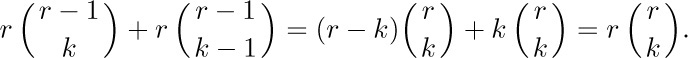

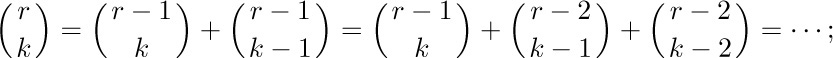

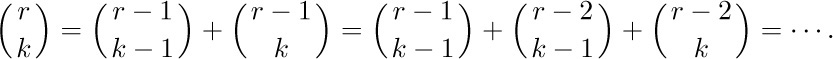

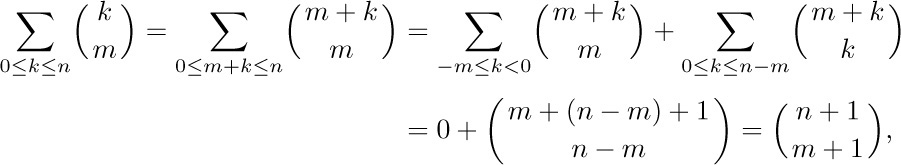

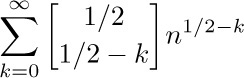

E. Summation formulas. Repeated application of (9) gives

or

Thus we are led to two important summation formulas that can be expressed as follows:

Equation (11) can easily be proved by induction on n, but it is interesting to see how it can also be derived from Eq. (10) with two applications of Eq. (6):

assuming that n ≥ m. If n < m, Eq. (11) is obvious.

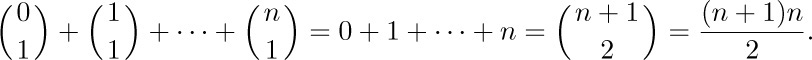

Equation (11) occurs very frequently in applications; in fact, we have already derived special cases of it in previous sections. For example, when m = 1, we have our old friend, the sum of an arithmetic progression:

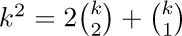

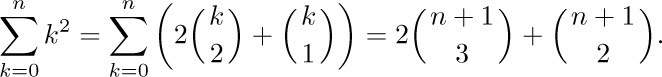

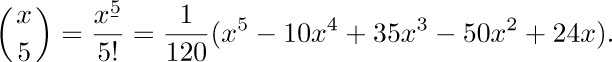

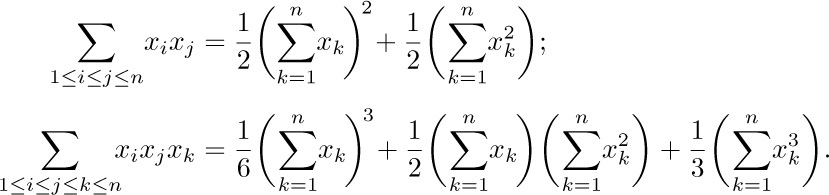

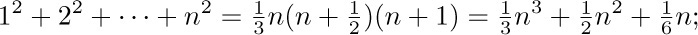

Suppose that we want a simple formula for the sum $1^{2}+2^{2}+···+n^{2}$. This can be obtained by observing that  ; hence

; hence

And this answer, obtained in terms of binomial coefficients, can be put back into polynomial notation if desired:

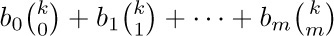

The sum $1^{3}+2^{3}+···+n^{3}$ can be obtained in a similar way; any polynomial $a_{0}+a_{1}k+a_{2}k^{2}+···+a_{m}k^{m}$ can be expressed as  for suitably chosen coefficients $b_{0},..., b_{m}$. We will return to this subject later.

for suitably chosen coefficients $b_{0},..., b_{m}$. We will return to this subject later.

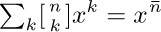

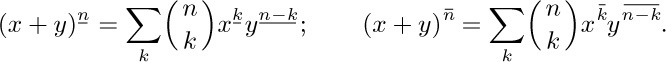

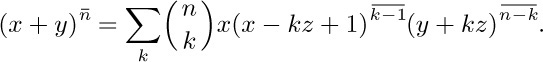

F. The binomial theorem. Of course, the binomial theorem is one of our principal tools:

For example, $(x+y)^{4}=x^{4}+4x^{3}y+6x^{2}y^{2}+4xy^{3}+y^{4}$. (At last we are able to justify the name “binomial coefficient” for the numbers  .)

.)

It is important to notice that we have written ∑k in Eq. (13), rather than  as might have been expected. If no restriction is placed on k, we are summing over all integers, $-∞\lt k\lt +∞$; but the two notations are exactly equivalent in this case, since the terms in Eq. (13) are zero when k < 0 or k > r. The simpler form $∑_{k}$ is to be preferred, since all manipulations with sums are simpler when the conditions of summation are simpler. We save a good deal of tedious effort if we do not need to keep track of the lower and/or upper limits of summation, so the limits should be left unspecified whenever possible. Our notation has another advantage also: If r is not a nonnegative integer, Eq. (13) becomes an infinite sum, and the binomial theorem of calculus states that Eq. (13) is valid for all r, if |x/y| < 1.

as might have been expected. If no restriction is placed on k, we are summing over all integers, $-∞\lt k\lt +∞$; but the two notations are exactly equivalent in this case, since the terms in Eq. (13) are zero when k < 0 or k > r. The simpler form $∑_{k}$ is to be preferred, since all manipulations with sums are simpler when the conditions of summation are simpler. We save a good deal of tedious effort if we do not need to keep track of the lower and/or upper limits of summation, so the limits should be left unspecified whenever possible. Our notation has another advantage also: If r is not a nonnegative integer, Eq. (13) becomes an infinite sum, and the binomial theorem of calculus states that Eq. (13) is valid for all r, if |x/y| < 1.

It should be noted that formula (13) gives

We will use this convention consistently.

The special case y = 1 in Eq. (13) is so important, we state it specially:

The discovery of the binomial theorem was announced by Isaac Newton in letters to Oldenburg on June 13, 1676 and October 24, 1676. [See D. Struik, Source Book in Mathematics (Harvard Univ. Press, 1969), 284–291.] But he apparently had no real proof of the formula; at that time the necessity for rigorous proof was not fully realized. The first attempted proof was given by L. Euler in 1774, although his effort was incomplete. Finally, C. F. Gauss gave the first actual proof in 1812. In fact, Gauss’s work represented the first time anything about infinite sums was proved satisfactorily.

Early in the nineteenth century, N. H. Abel found a surprising generalization of the binomial formula (13):

This is an identity in three variables, x, y, and z (see exercises 50 through 52). Abel published and proved this formula in Volume 1 of A. L. Crelle’s soon-to-be-famous Journal für die reine und angewandte Mathematik (1826), pages 159–160. It is interesting to note that Abel contributed many other papers to the same Volume 1, including his famous memoirs on the unsolvability of algebraic equations of degree 5 or more by radicals, and on the binomial theorem. See H. W. Gould, AMM 69 (1962), 572, for a number of references to Eq. (16).

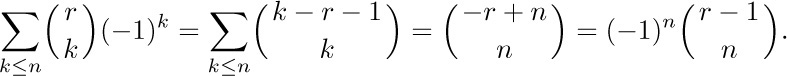

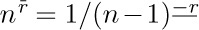

G. Negating the upper index. The basic identity

follows immediately from the definition (3) when each term of the numerator is negated. This is often a useful transformation on the upper index.

One easy consequence of Eq. (17) is the summation formula

This identity could be proved by induction using Eq. (9), but we can use Eqs. (17) and (10) directly:

Another important application of Eq. (17) can be made when r is an integer:

(Set r = n and k = n − m in Eq. (17) and use (6).) We have moved n from the upper position to the lower.

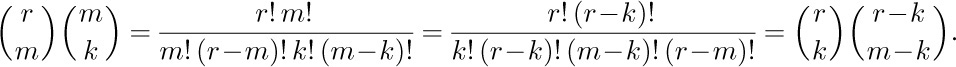

H. Simplifying products. When products of binomial coefficients appear, they can usually be reexpressed in several different ways by expanding into factorials and out again using Eq. (5). For example,

It suffices to prove Eq. (20) when r is an integer ≥ m (see the remarks after Eq. (8)), and when 0 ≤ k ≤ m. Then

Equation (20) is very useful when an index (namely m) appears in both the upper and the lower position, and we wish to have it appear in one place rather than two. Notice that Eq. (7) is the special case of Eq. (20) when k = 1.

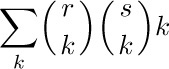

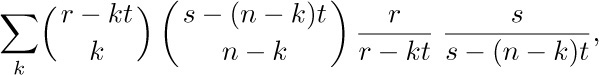

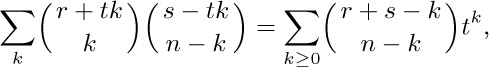

I. Sums of products. To complete our set of binomial-coefficient manipulations, we present the following very general identities, which are proved in the exercises at the end of this section. These formulas show how to sum over a product of two binomial coefficients, considering various places where the running variable k might appear:

Of these identities, Eq. (21) is by far the most important, and it should be memorized. One way to remember it is to interpret the right-hand side as the number of ways to select n people from among r men and s women; each term on the left is the number of ways to choose k of the men and n − k of the women. Equation (21) is commonly called Vandermonde’s convolution, since A. Vandermonde published it in Mém. Acad. Roy. Sciences (Paris, 1772), part 1, 489–498. However, it had appeared already in Chu Shih-Chieh’s 1303 treatise mentioned earlier [see J. Needham, Science and Civilisation in China 3 (Cambridge University Press, 1959), 138–139].

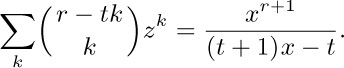

If $r=tk$ in Eq. (26), we avoid the zero denominator by canceling with a factor in the numerator; therefore Eq. (26) is a polynomial identity in the variables r, s, t. Obviously Eq. (21) is a special case of Eq. (26) with t = 0.

We should point out a nonobvious use of Eqs. (23) and (25): It is often helpful to replace the simple binomial coefficient on the right-hand side by the more complicated expression on the left, interchange the order of summation, and simplify. We may regard the left-hand sides as expansions of

Formula (23) is used for negative a, formula (25) for positive a.

This completes our study of binomial-coefficientology. The reader is advised to learn especially Eqs. (5), (6), (7), (9), (13), (17), (20), and (21) — frame them with your favorite highlighter pen!

With all these methods at our disposal, we should be able to solve almost any problem that comes along, in at least three different ways. The following examples illustrate the techniques.

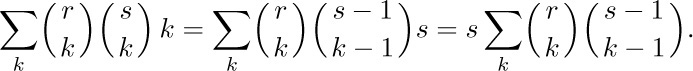

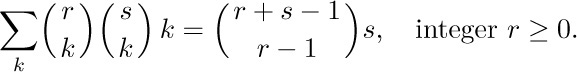

Example 1. When r is a positive integer, what is the value of

Solution. Formula (7) is useful for disposing of the outside k:

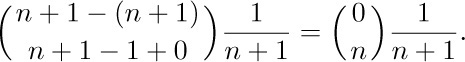

Now formula (22) applies, with m = 0 and n = −1. The answer is therefore

Example 2. What is the value of  , if n is a nonnegative integer?

, if n is a nonnegative integer?

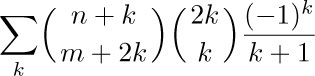

Solution. This problem is tougher; the summation index k appears in six places! First we apply Eq. (20), and we obtain

We can now breathe more easily, since several of the menacing characteristics of the original formula have disappeared. The next step should be obvious; we apply Eq. (7) in a manner similar to the technique used in Example 1:

Good, another k has vanished. At this point there are two equally promising lines of attack. We can replace the  by

by  , assuming that k ≥ 0, and evaluate the sum with Eq. (23):

, assuming that k ≥ 0, and evaluate the sum with Eq. (23):

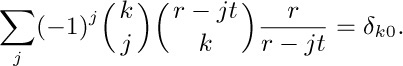

The binomial coefficient  equals zero except when n = 0, in which case it equals one. So we can conveniently state the answer to our problem as [n = 0], using Iverson’s convention (Eq. 1.2.3–(16)), or as δn0, using the Kronecker delta (Eq. 1.2.3–(19)).

equals zero except when n = 0, in which case it equals one. So we can conveniently state the answer to our problem as [n = 0], using Iverson’s convention (Eq. 1.2.3–(16)), or as δn0, using the Kronecker delta (Eq. 1.2.3–(19)).

Another way to proceed from Eq. (27) is to use Eq. (17), obtaining

We can now apply Eq. (22), which yields the sum

Once again we have derived the answer:

Example 3. What is the value of  , for positive integers m and n?

, for positive integers m and n?

Solution. If m were zero, we would have the same formula to work with that we had in Example 2. However, the presence of m means that we cannot even begin to use the method of the previous solution, since the first step there was to use Eq. (20) — which no longer applies. In this situation it pays to complicate things even more by replacing the unwanted  by a sum of terms of the form

by a sum of terms of the form  , since our problem will then become a sum of problems that we know how to solve. Accordingly, we use Eq. (25) with

, since our problem will then become a sum of problems that we know how to solve. Accordingly, we use Eq. (25) with

$r=n+k-1$, $m=2k$, $s=0$, $n=m-1$,

and we have

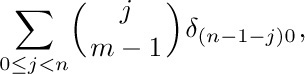

We wish to perform the summation on k first; but interchanging the order of summation demands that we sum on the values of k that are ≥ 0 and $≥ j-n+1$. Unfortunately, the latter condition raises problems, because we do not know the desired sum if j ≥ n. Let us save the situation, however, by observing the terms of (29) are zero when $n≤j≤n+k-1$. This condition implies that k ≥ 1; thus $0≤n+k-1-j≤k-1\lt2k$, and the first binomial coefficient in (29) will vanish. We may therefore replace the condition on the second sum by 0 ≤ j < n, and the interchange of summation is routine. Summing on k by Eq. (28) now gives

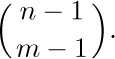

and all terms are zero except when j = n − 1. Hence our final answer is

The solution to this problem was fairly complicated, but not really mysterious; there was a good reason for each step. The derivation should be studied closely because it illustrates some delicate maneuvering with the conditions in our equations. There is actually a better way to attack this problem, however; it is left to the reader to figure out a way to transform the given sum so that Eq. (26) applies (see exercise 30).

where $A_{n}(x, t)$ is the nth degree polynomial in x that satisfies

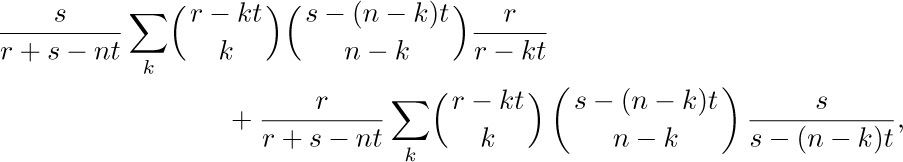

Solution. We may assume that $r ≠ kt ≠ s$ for 0 ≤ k ≤ n, since both sides of (30) are polynomials in r, s, t. Our problem is to evaluate

which, if anything, looks much worse than our previous horrible problems! Notice the strong similarity to Eq. (26), however, and also note the case t = 0.

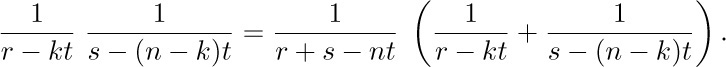

We are tempted to change

except that the latter tends to lose the analogy with Eq. (26) and it fails when k = 0. A better way to proceed is to use the technique of partial fractions, whereby a fraction with a complicated denominator can often be replaced by a sum of fractions with simpler denominators. Indeed, we have

Putting this into our sum we get

and Eq. (26) evaluates both of these formulas if we change k to n − k in the second; the desired result follows immediately. Identities (26) and (30) are due to H. A. Rothe, Formulæ de Serierum Reversione (Leipzig: 1793); special cases of these formulas are still being “discovered” frequently. For the interesting history of these identities and some generalizations, see H. W. Gould and J. Kaucký, Journal of Combinatorial Theory 1 (1966), 233–247.

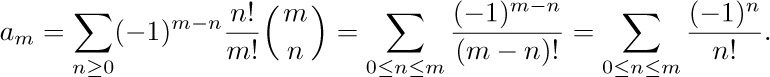

Example 5. Determine the values of $a_{0}, a_{1}, a_{2},...$ such that

for all nonnegative integers n.

Solution. Equation 1.2.5–(11), which was presented without proof in the previous section, gives the answer. Let us pretend that we don’t know it yet. It is clear that the problem does have a solution, since we can set n = 0 and determine $a_{0}$, then set n = 1 and determine $a_{1}$, etc.

First we would like to write Eq. (31) in terms of binomial coefficients:

The problem of solving implicit equations like this for $a_{k}$ is called the inversion problem, and the technique we shall use applies to similar problems as well.

The idea is based on the special case s = 0 of Eq. (23):

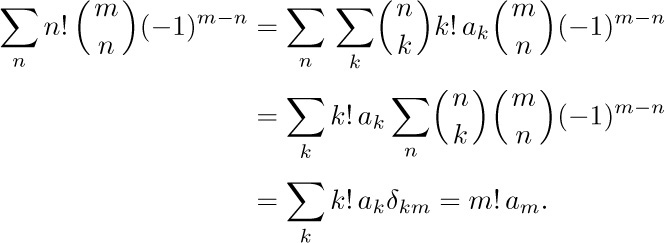

The importance of this formula is that when n ≠ r, the sum is zero; this enables us to solve our problem since a lot of terms cancel out as they did in Example 3:

Notice how we were able to get an equation in which only one value $a_{m}$ appears, by adding together suitable multiples of Eq. (32) for n = 0, 1, ... . We have now

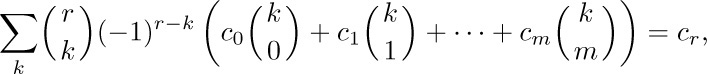

This completes the solution to Example 5. Let us now take a closer look at the implications of Eq. (33): When r and m are nonnegative integers we have

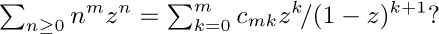

since the other terms vanish after summation. By properly choosing the coefficients $c_{i}$, we can represent any polynomial in k as a sum of binomial coefficients with upper index k. We find therefore that

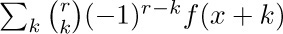

where $b_{0}+···+b_{r}k^{r}$ represents any polynomial whatever of degree r or less. (This formula will be of no great surprise to students of numerical analysis, since  is the “rth difference” of the function $f(x)$.)

is the “rth difference” of the function $f(x)$.)

Using Eq. (34), we can immediately obtain many other relations that appear complicated at first and that are often given very lengthy proofs, such as

It is customary in textbooks such as this to give a lot of impressive examples of neat tricks, etc., but never to mention simple-looking problems where the techniques fail. The examples above may have given the impression that all things are possible with binomial coefficients; it should be mentioned, however, that in spite of Eqs. (10), (11), and (18), there seems to be no simple formula for the analogous sum

when n < m. (For n = m the answer is simple; what is it? See exercise 36.)

On the other hand this sum does have a closed form as a function of n when m is an explicit negative integer; for example,

There is also a simple formula

for a sum that looks as though it should be harder, not easier.

How can we decide when to stop working on a sum that resists simplification? Fortunately, there is now a good way to answer that question in many important cases: An algorithm due to R. W. Gosper and D. Zeilberger will discover closed forms in binomial coefficients when they exist, and will prove the impossibility when they do not exist. The Gosper–Zeilberger algorithm is beyond the scope of this book, but it is explained in CMath §5.8. See also the book A = B by Petkovšek, Wilf, and Zeilberger (Wellesley, Mass.: A. K. Peters, 1996).

The principal tool for dealing with sums of binomial coefficients in a systematic, mechanical way is to exploit the properties of hypergeometric functions, which are infinite series defined as follows in terms of rising factorial powers:

An introduction to these important functions can be found in Sections 5.5 and 5.6 of CMath. See also J. Dutka, Archive for History of Exact Sciences 31 (1984), 15–34, for historical references.

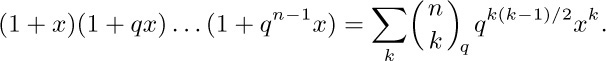

The concept of binomial coefficients has several significant generalizations, which we should discuss briefly. First, we can consider arbitrary real values of the lower index k in  ; see exercises 40 through 45. We also have the generalization

; see exercises 40 through 45. We also have the generalization

which becomes the ordinary binomial coefficient  when q approaches the limiting value 1; this can be seen by dividing each term in numerator and denominator by $1-q$. The basic properties of such “q-nomial coefficients” are discussed in exercise 58.

when q approaches the limiting value 1; this can be seen by dividing each term in numerator and denominator by $1-q$. The basic properties of such “q-nomial coefficients” are discussed in exercise 58.

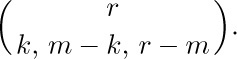

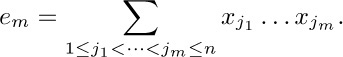

However, for our purposes the most important generalization is the multinomial coefficient

The chief property of multinomial coefficients is the generalization of Eq. (13):

It is important to observe that any multinomial coefficient can be expressed in terms of binomial coefficients:

so we may apply the techniques that we already know for manipulating binomial coefficients. Both sides of Eq. (20) are the trinomial coefficient

For approximations valid when n is large, see L. Moser and M. Wyman, J. London Math. Soc. 33 (1958), 133–146; Duke Math. J. 25 (1958), 29–43; D. E. Barton, F. N. David, and M. Merrington, Biometrika 47 (1960), 439–445; 50 (1963), 169–176; N. M. Temme, Studies in Applied Math. 89 (1993), 233–243; H. S. Wilf, J. Combinatorial Theory A64 (1993), 344–349; H.-K. Hwang, J. Combinatorial Theory A71 (1995), 343–351.

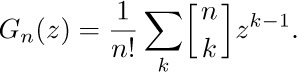

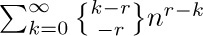

We conclude this section with a brief analysis of the transformation from a polynomial expressed in powers of x to a polynomial expressed in binomial coefficients. The coefficients involved in this transformation are called Stirling numbers, and these numbers arise in the study of numerous algorithms.

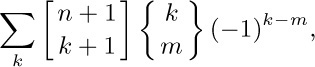

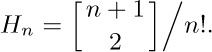

Stirling numbers come in two flavors: We denote Stirling numbers of the first kind by  , and those of the second kind by

, and those of the second kind by  . These notations, due to Jovan Karamata [Mathematica (Cluj) 9 (1935), 164–178], have compelling advantages over the many other symbolisms that have been tried [see D. E. Knuth, AMM 99 (1992), 403–422]. We can remember the curly braces in

. These notations, due to Jovan Karamata [Mathematica (Cluj) 9 (1935), 164–178], have compelling advantages over the many other symbolisms that have been tried [see D. E. Knuth, AMM 99 (1992), 403–422]. We can remember the curly braces in  because curly braces denote sets, and

because curly braces denote sets, and  is the number of ways to partition a set of n elements into k disjoint subsets (exercise 64). The other Stirling numbers

is the number of ways to partition a set of n elements into k disjoint subsets (exercise 64). The other Stirling numbers  also have a combinatorial interpretation, which we will study in Section 1.3.3:

also have a combinatorial interpretation, which we will study in Section 1.3.3:  is the number of permutations on n letters having k cycles.

is the number of permutations on n letters having k cycles.

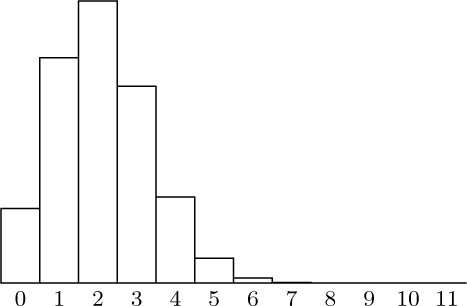

Table 2 displays Stirling’s triangles, which are in some ways analogous to Pascal’s triangle.

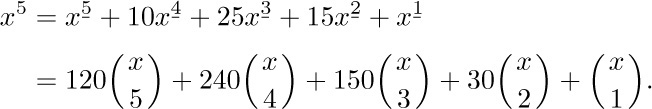

Stirling numbers of the first kind are used to convert from factorial powers to ordinary powers:

For example, from Table 2,

Stirling numbers of the second kind are used to convert from ordinary powers to factorial powers:

This formula was, in fact, Stirling’s original reason for studying the numbers  in his Methodus Differentialis (London: 1730). From Table 2 we have, for example,

in his Methodus Differentialis (London: 1730). From Table 2 we have, for example,

We shall now list the most important identities involving Stirling numbers. In these equations, the variables m and n always denote nonnegative integers.

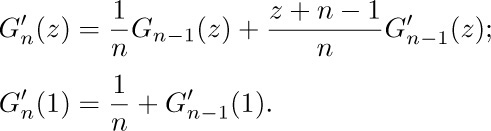

Addition formulas:

Inversion formulas (compare with Eq. (33)):

Special values:

Expansion formulas:

Some other fundamental Stirling number identities appear in exercises 1.2.6–61 and 1.2.7–6, and in Eqs. (23), (26), (27), and (28) of Section 1.2.9.

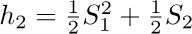

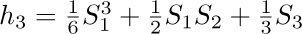

Eq. (49) is just one instance of a general phenomenon: Both kinds of Stirling numbers  and

and  are polynomials in n of degree $2m$, whenever m is a nonnegative integer. For example, the formulas for m = 2 and m = 3 are

are polynomials in n of degree $2m$, whenever m is a nonnegative integer. For example, the formulas for m = 2 and m = 3 are

Therefore it makes sense to define the numbers  and

and  for arbitrary real (or complex) values of r. With this generalization, the two kinds of Stirling numbers are united by an interesting duality law

for arbitrary real (or complex) values of r. With this generalization, the two kinds of Stirling numbers are united by an interesting duality law

which was implicit in Stirling’s original discussion. Moreover, Eq. (45) remains true in general, in the sense that the infinite series

converges whenever the real part of z is positive. The companion formula, Eq. (44), generalizes in a similar way to an asymptotic (but not convergent) series:

(See exercise 65.) Sections 6.1, 6.2, and 6.5 of CMath contain additional information about Stirling numbers and how to manipulate them in formulas. See also exercise 4.7–21 for a general family of triangles that includes Stirling numbers as a very special case.

Exercises

1. [00] How many combinations of n things taken n − 1 at a time are possible?

2. [00] What is  ?

?

3. [00] How many bridge hands (13 cards out of a 52-card deck) are possible?

4. [10] Give the answer to exercise 3 as a product of prime numbers.

5. [05] Use Pascal’s triangle to explain the fact that 114 = 14641.

5. [05] Use Pascal’s triangle to explain the fact that 114 = 14641.

6. [10] Pascal’s triangle (Table 1) can be extended in all directions by use of the addition formula, Eq. (9). Find the three rows that go on top of Table 1 (i.e., for r = −1, −2, and −3).

6. [10] Pascal’s triangle (Table 1) can be extended in all directions by use of the addition formula, Eq. (9). Find the three rows that go on top of Table 1 (i.e., for r = −1, −2, and −3).

7. [12] If n is a fixed positive integer, what value of k makes  a maximum?

a maximum?

8. [00] What property of Pascal’s triangle is reflected in the “symmetry condition,” Eq. (6)?

9. [01] What is the value of  ? (Consider all integers n.)

? (Consider all integers n.)

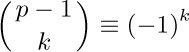

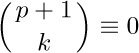

10. [M25] If p is prime, show that:

10. [M25] If p is prime, show that:

a) $ (modulo p)$.

(modulo p)$.

b) $ (modulo p)$, for $1≤k≤p-1$.

(modulo p)$, for $1≤k≤p-1$.

c) $ (modulo p)$, for $0≤k≤p-1$.

(modulo p)$, for $0≤k≤p-1$.

d) $ (modulo p)$, for $2≤k≤p-1$.

(modulo p)$, for $2≤k≤p-1$.

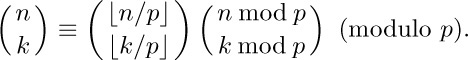

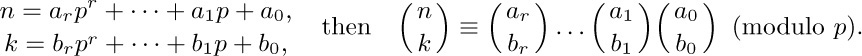

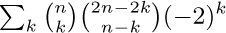

e) (É. Lucas, 1877.)

f) If the p-ary number system representations of n and k are

11. [M20] (E. Kummer, 1852.) Let p be prime. Show that if $p^{n}$ divides

11. [M20] (E. Kummer, 1852.) Let p be prime. Show that if $p^{n}$ divides

but pn+1 does not, then n is equal to the number of carries that occur when a is added to b in the p-ary number system. [Hint: See exercise 1.2.5–12.]

12. [M22] Are there any positive integers n for which all the nonzero entries in the nth row of Pascal’s triangle are odd? If so, find all such n.

13. [M13] Prove the summation formula, Eq. (10).

14. [M21] Evaluate  .

.

15. [M15] Prove the binomial formula, Eq. (13).

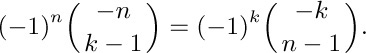

16. [M15] Given that n and k are positive integers, prove the symmetrical identity

17. [M18] Prove the Chu–Vandermonde formula (21) from Eq. (15), using the idea that $(1+x)^{r+s}=(1+x)^{r} (1+x)^{s}$.

17. [M18] Prove the Chu–Vandermonde formula (21) from Eq. (15), using the idea that $(1+x)^{r+s}=(1+x)^{r} (1+x)^{s}$.

18. [M15] Prove Eq. (22) using Eqs. (21) and (6).

19. [M18] Prove Eq. (23) by induction.

20. [M20] Prove Eq. (24) by using Eqs. (21) and (19), then show that another use of Eq. (19) yields Eq. (25).

21. [M05] Both sides of Eq. (25) are polynomials in s; why isn’t that equation an identity in s?

21. [M05] Both sides of Eq. (25) are polynomials in s; why isn’t that equation an identity in s?

22. [M20] Prove Eq. (26) for the special case $s=n-1-r+nt$.

23. [M13] Assuming that Eq. (26) holds for $(r, s, t, n)$ and $(r, s-t, t, n-1)$, prove it for $(r, s+1, t, n)$.

24. [M15] Explain why the results of the previous two exercises combine to give a proof of Eq. (26).

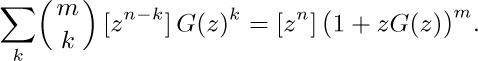

25. [HM30] Let the polynomial $A_{n}(x,t)$ be defined as in Example 4 (see Eq. (30)). Let $z=x^{t+1}-x^{t}$. Prove that $∑_{k}A_{k}(r,t)z^{k}=x^{r}$, provided that x is close enough to 1. [Note: If t = 0, this result is essentially the binomial theorem, and this equation is an important generalization of that theorem. The binomial theorem (15) may be assumed in the proof.] Hint: Start with multiples of a special case of (34),

26. [HM25] Using the assumptions of the previous exercise, prove that

27. [HM21] Solve Example 4 in the text by using the result of exercise 25; and prove Eq. (26) from the preceding two exercises. [Hint: See exercise 17.]

28. [M25] Prove that

if n is a nonnegative integer.

29. [M20] Show that Eq. (34) is just a special case of the general identity proved in exercise 1.2.3–33.

30. [M24] Show that there is a better way to solve Example 3 than the way used in the text, by manipulating the sum so that Eq. (26) applies.

30. [M24] Show that there is a better way to solve Example 3 than the way used in the text, by manipulating the sum so that Eq. (26) applies.

31. [M20] Evaluate

31. [M20] Evaluate

in terms of r, s, m, and n, given that m and n are integers. Begin by replacing

32. [M20] Show that  , where

, where  is the rising factorial power defined in Eq. 1.2.5–(19).

is the rising factorial power defined in Eq. 1.2.5–(19).

33. [M20] (A. Vandermonde, 1772.) Show that the binomial formula is valid also when it involves factorial powers instead of the ordinary powers. In other words, prove that

34. [M23] (Torelli’s sum.) In the light of the previous exercise, show that Abel’s generalization, Eq. (16), of the binomial formula is true also for rising powers:

35. [M23] Prove the addition formulas (46) for Stirling numbers directly from the definitions, Eqs. (44) and (45).

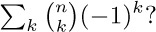

36. [M10] What is the sum  of the numbers in each row of Pascal’s triangle? What is the sum of these numbers with alternating signs,

of the numbers in each row of Pascal’s triangle? What is the sum of these numbers with alternating signs,

37. [M10] From the answers to the preceding exercise, deduce the value of the sum of every other entry in a row,  .

.

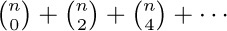

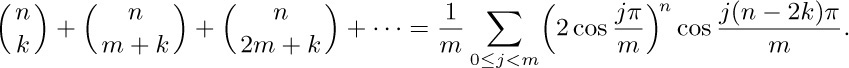

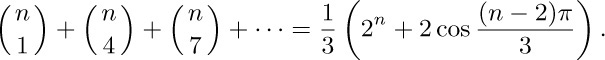

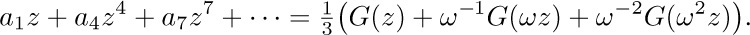

38. [HM30] (C. Ramus, 1834.) Generalizing the result of the preceding exercise, show that we have the following formula, given that 0 ≤ k < m:

For example,

[Hint: Find the right combinations of these coefficients multiplied by mth roots of unity.] This identity is particularly remarkable when m ≥ n.

39. [M10] What is the sum  of the numbers in each row of Stirling’s first triangle? What is the sum of these numbers with alternating signs? (See exercise 36.)

of the numbers in each row of Stirling’s first triangle? What is the sum of these numbers with alternating signs? (See exercise 36.)

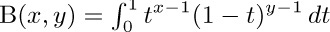

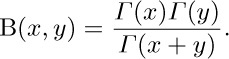

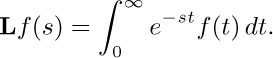

40. [HM17] The beta function {\rm B}(x, y) is defined for positive real numbers x, y by the formula

a) Show that ${\rm B}(x, 1)={\rm B}(1, x)=1/x$.

b) Show that ${\rm B}(x+1, y)+{\rm B}(x, y+1)={\rm B}(x, y)$.

c) Show that ${\rm B}(x, y)=((x+y)/y) {\rm B}(x, y+1)$.

41. [HM22] We proved a relation between the gamma function and the beta function in exercise 1.2.5–19, by showing that $Γ_{m} (x)=m^{x} {\rm B}(x, m+1)$, if m is a positive integer.

a) Prove that

b) Show that

42. [HM10] Express the binomial coefficient  in terms of the beta function defined above. (This gives us a way to extend the definition to all real values of k.)

in terms of the beta function defined above. (This gives us a way to extend the definition to all real values of k.)

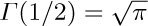

43. [HM20] Show that ${\rm B}(1/2, 1/2)=π$. (From exercise 41 we may now conclude that  .)

.)

44. [HM20] Using the generalized binomial coefficient suggested in exercise 42, show that

45. [HM21] Using the generalized binomial coefficient suggested in exercise 42, find  .

.

46. [M21] Using Stirling’s approximation, Eq. 1.2.5–(7), find an approximate value of

46. [M21] Using Stirling’s approximation, Eq. 1.2.5–(7), find an approximate value of  , assuming that both x and y are large. In particular, find the approximate size of

, assuming that both x and y are large. In particular, find the approximate size of  when n is large.

when n is large.

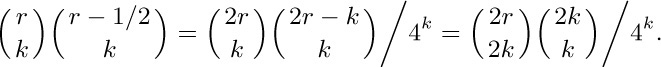

47. [M21] Given that k is an integer, show that

Give a simpler formula for the special case $r=-1/2$.

48. [M25] Show that

48. [M25] Show that

if the denominators are not zero. [Note that this formula gives us the reciprocal of a binomial coefficient, as well as the partial fraction expansion of $1/x(x+1)... (x+n)$.]

49. [M20] Show that the identity $(1+x)^{r}=(1-x^{2})^{r} (1-x)^{-r}$ implies a relation on binomial coefficients.

50. [M20] Prove Abel’s formula, Eq. (16), in the special case x + y = 0.

51. [M21] Prove Abel’s formula, Eq. (16), by writing $y=(x+y)-x$, expanding the right-hand side in powers of $(x+y)$, and applying the result of the previous exercise.

52. [HM11] Prove that Abel’s binomial formula (16) is not always valid when n is not a nonnegative integer, by evaluating the right-hand side when n = x = −1, y = z = 1.

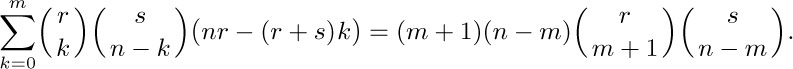

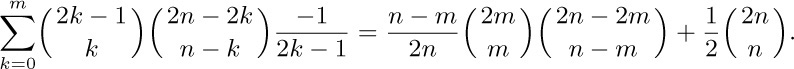

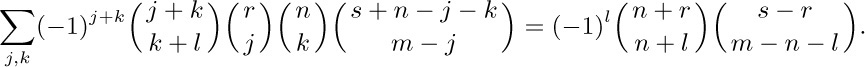

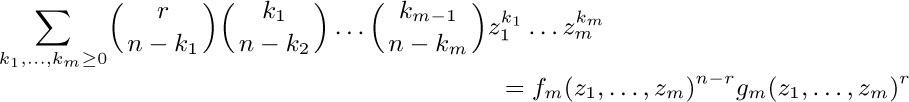

53. [M25] (a) Prove the following identity by induction on m, where m and n are integers:

(b) Making use of important relations from exercise 47,

show that the following formula can be obtained as a special case of the identity in part (a):

(This result is considerably more general than Eq. (26) in the case r = −1, s = 0, t = − 2.)

54. [M21] Consider Pascal’s triangle (as shown in Table 1) as a matrix. What is the inverse of that matrix?

55. [M21] Considering each of Stirling’s triangles (Table 2) as matrices, determine their inverses.

56. [20] (The combinatorial number system.) For each integer $n=0, 1, 2,..., 20$, find three integers a, b, c for which  and a > b > c ≥ 0. Can you see how this pattern can be continued for higher values of n?

and a > b > c ≥ 0. Can you see how this pattern can be continued for higher values of n?

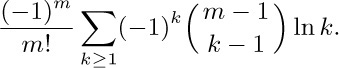

57. [M22] Show that the coefficient a_{m} in Stirling’s attempt at generalizing the factorial function, Eq. 1.2.5–(12), is

57. [M22] Show that the coefficient a_{m} in Stirling’s attempt at generalizing the factorial function, Eq. 1.2.5–(12), is

58. [M23] (H. A. Rothe, 1811.) In the notation of Eq. (40), prove the “q-nomial theorem”:

Also find q-nomial generalizations of the fundamental identities (17) and (21).

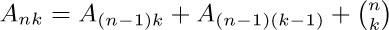

59. [M25] A sequence of numbers $Ank$, $n ≥ 0$, $k ≥ 0$, satisfies the relations $An0=1$, $A0k=δ0k, $ for $nk\gt0$. Find $Ank$.

$ for $nk\gt0$. Find $Ank$.

60. [M23] We have seen that

60. [M23] We have seen that  is the number of combinations of n things, k at a time, namely the number of ways to choose k different things out of a set of n. The combinations with repetitions are similar to ordinary combinations, except that we may choose each object any number of times. Thus, the list (1) would be extended to include also aaa, aab, aac, aad, aae, abb, etc., if we were considering combinations with repetition. How many k-combinations of n objects are there, if repetition is allowed?

is the number of combinations of n things, k at a time, namely the number of ways to choose k different things out of a set of n. The combinations with repetitions are similar to ordinary combinations, except that we may choose each object any number of times. Thus, the list (1) would be extended to include also aaa, aab, aac, aad, aae, abb, etc., if we were considering combinations with repetition. How many k-combinations of n objects are there, if repetition is allowed?

61. [M25] Evaluate the sum

thereby obtaining a companion formula for Eq. (55).

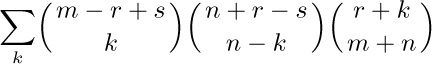

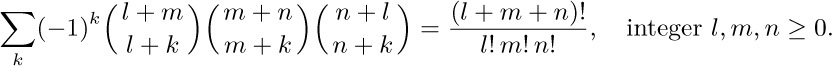

62. [M23] The text gives formulas for sums involving a product of two binomial coefficients. Of the sums involving a product of three binomial coefficients, the following one and the identity of exercise 31 seem to be most useful:

62. [M23] The text gives formulas for sums involving a product of two binomial coefficients. Of the sums involving a product of three binomial coefficients, the following one and the identity of exercise 31 seem to be most useful:

(The sum includes both positive and negative values of k.) Prove this identity. [Hint: There is a very short proof, which begins by applying the result of exercise 31.]

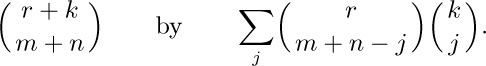

63. [M30] If l, m, and n are integers and n ≥ 0, prove that

64. [M20] Show that

64. [M20] Show that  is the number of ways to partition a set of n elements into m nonempty disjoint subsets. For example, the set $\{1, 2, 3, 4\}$ can be partitioned into two subsets in

is the number of ways to partition a set of n elements into m nonempty disjoint subsets. For example, the set $\{1, 2, 3, 4\}$ can be partitioned into two subsets in  ways: $\{1, 2, 3\}\{4\}$; $\{1, 2, 4\}\{3\}$; $\{1, 3, 4\}\{2\}$; $\{2, 3, 4\}\{1\}$; $\{1, 2\}\{3, 4\}$; $\{1, 3\}\{2, 4\}$; $\{1, 4\}\{2, 3\}$. Hint: Use Eq. (46).

ways: $\{1, 2, 3\}\{4\}$; $\{1, 2, 4\}\{3\}$; $\{1, 3, 4\}\{2\}$; $\{2, 3, 4\}\{1\}$; $\{1, 2\}\{3, 4\}$; $\{1, 3\}\{2, 4\}$; $\{1, 4\}\{2, 3\}$. Hint: Use Eq. (46).

65. [HM35] (B. F. Logan.) Prove Eqs. (59) and (60).

66. [HM30] Suppose x, y, and z are real numbers satisfying

where $x ≥ n-1$, $y ≥ n-1$, $z\gt n-2$, and n is an integer ≥ 2. Prove that

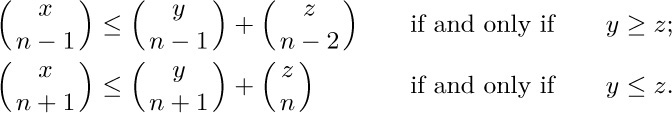

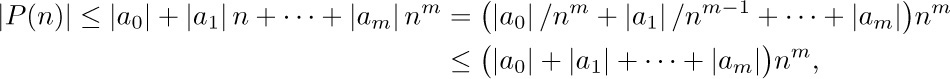

67. [M20] We often need to know that binomial coefficients aren’t too large. Prove the easy-to-remember upper bound

67. [M20] We often need to know that binomial coefficients aren’t too large. Prove the easy-to-remember upper bound

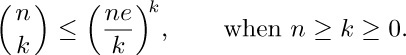

68. [M25] (A. de Moivre.) Prove that, if n is a nonnegative integer,

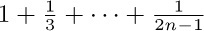

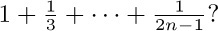

This sum does not occur very frequently in classical mathematics, and there is no standard notation for it; but in the analysis of algorithms it pops up nearly every time we turn around, and we will consistently call it $H_{n}$. Besides $H_{n}$, the notations $h_{n}$ and $S_{n}$ and $Ψ(n+1)+γ$ are found in mathematical literature. The letter H stands for “harmonic,” and we speak of $H_{n}$ as a harmonic number because (1) is customarily called the harmonic series. Chinese bamboo strips written before 186 B.C. already explained how to compute $H10=7381/2520$, as an exercise in arithmetic. [See C. Cullen, Historia Math. 34 (2007), 10–44.]

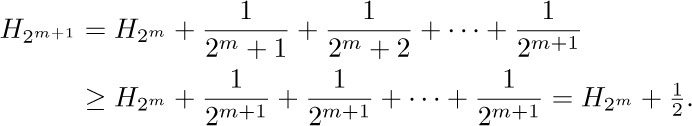

It may seem at first that $H_{n}$ does not get too large when n has a large value, since we are always adding smaller and smaller numbers. But actually it is not hard to see that $H_{n}$ will get as large as we please if we take n to be big enough, because

This lower bound follows from the observation that, for m ≥ 0, we have

So as m increases by 1, the left-hand side of (2) increases by at least  .

.

It is important to have more detailed information about the value of $H_{n}$ than is given in Eq. (2). The approximate size of $H_{n}$ is a well-known quantity (at least in mathematical circles) that may be expressed as follows:

Here $γ=0.5772156649...$ is Euler’s constant, introduced by Leonhard Euler in Commentarii Acad. Sci. Imp. Pet. 7 (1734), 150–161. Exact values of $H_{n}$ for small n, and a 40-place value for γ, are given in the tables in Appendix A. We shall derive Eq. (3) in Section 1.2.11.2.

Thus $H_{n}$ is reasonably close to the natural logarithm of n. Exercise 7(a) demonstrates in a simple way that $H_{n}$ has a somewhat logarithmic behavior.

In a sense, $H_{n}$ just barely goes to infinity as n gets large, because the similar sum

stays bounded for all n, when r is any real-valued exponent greater than unity. (See exercise 3.) We denote the sum in Eq. (4) by  .

.

When the exponent r in Eq. (4) is at least 2, the value of  is fairly close to its maximum value

is fairly close to its maximum value  , except for very small n. The quantity

, except for very small n. The quantity  is very well known in mathematics as Riemann’s zeta function:

is very well known in mathematics as Riemann’s zeta function:

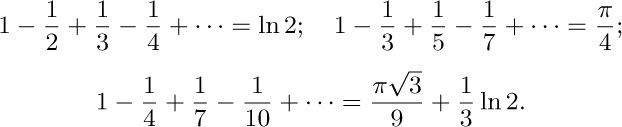

If r is an even integer, the value of $ζ (r)$ is known to be equal to

where $B_{r}$ is a Bernoulli number (see Section 1.2.11.2 and Appendix A). In particular,

These results are due to Euler; for discussion and proof, see CMath, §6.5.

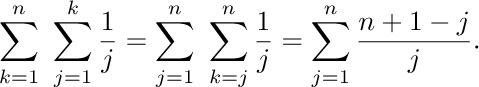

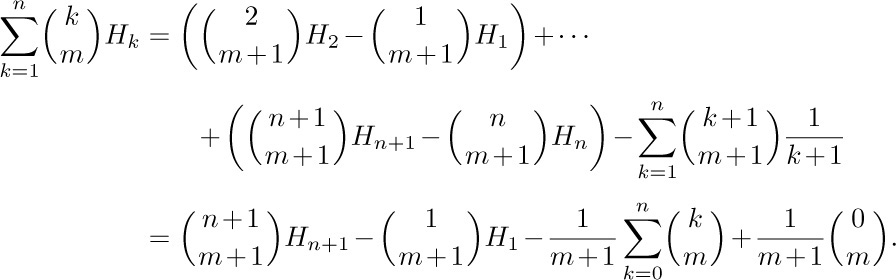

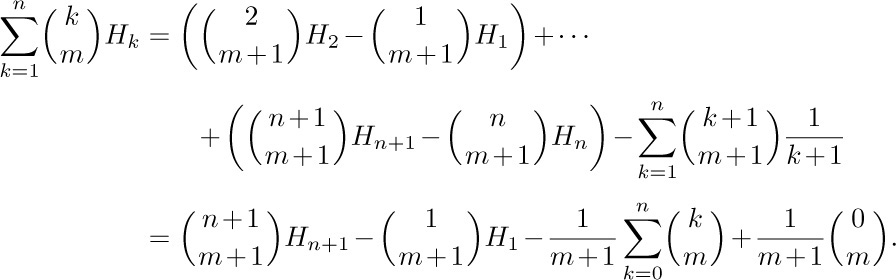

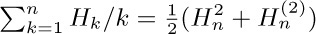

Now we will consider a few important sums that involve harmonic numbers. First,

This follows from a simple interchange of summation:

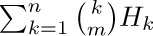

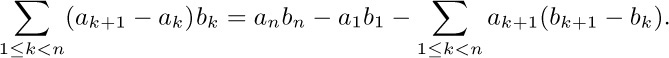

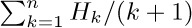

Formula (8) is a special case of the sum  , which we will now determine using an important technique called summation by parts (see exercise 10). Summation by parts is a useful way to evaluate ∑ a_{k}b_{k} whenever the quantities $∑ a_{k}$ and $(b_{k+1}-b_{k})$ have simple forms. We observe in this case that

, which we will now determine using an important technique called summation by parts (see exercise 10). Summation by parts is a useful way to evaluate ∑ a_{k}b_{k} whenever the quantities $∑ a_{k}$ and $(b_{k+1}-b_{k})$ have simple forms. We observe in this case that

and therefore

hence

Applying Eq. 1.2.6–(11) yields the desired formula:

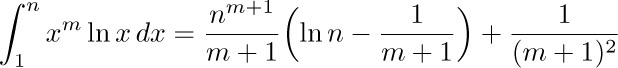

(This derivation and its final result are analogous to the evaluation of

using what calculus books call integration by parts.)

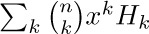

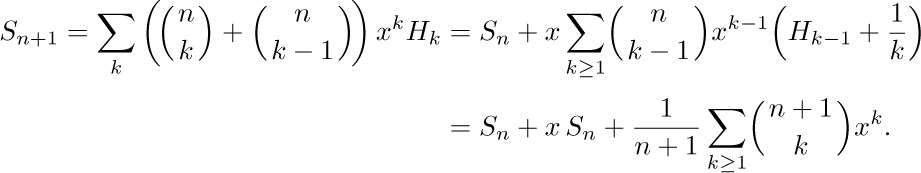

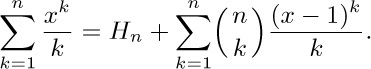

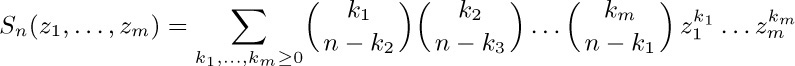

We conclude this section by considering a different kind of sum,  , which we will temporarily denote by $S_{n}$ for brevity. We find that

, which we will temporarily denote by $S_{n}$ for brevity. We find that

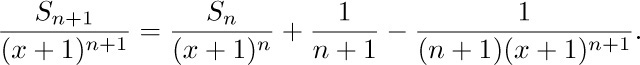

Hence $S_{n+1}=(x+1)S_{n}+((x+1)^{n+1}-1)/(n+1)$, and we have

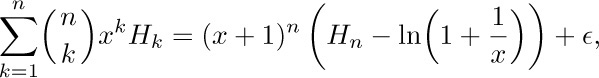

This equation, together with the fact that $S_{1}=x$, shows us that

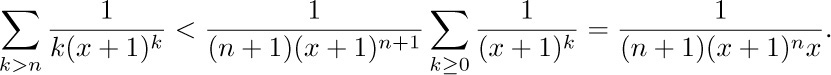

The new sum is part of the infinite series 1.2.9–(17) for $\ln(1/(1-1/(x+1)))=\ln(1+1/x)$, and when x > 0, the series is convergent; the difference is

This proves the following theorem:

where 0 < ∊ < 1/(x(n + 1)).

Exercises

1. [01] What are $H_{0}$, $H_{1}$, and $H_{2}$?

2. [13] Show that the simple argument used in the text to prove that $H2m ≥ 1+m/2$ can be slightly modified to prove that $H2m≤1+m$.

3. [M21] Generalize the argument used in the previous exercise to show that, for r > 1, the sum  remains bounded for all n. Find an upper bound.

remains bounded for all n. Find an upper bound.

4. [10] Decide which of the following statements are true for all positive integers n: (a) $H_{n}\lt\ln n$. (b) $H_{n}\gt\ln n$. (c) $H_{n}\gt\ln n+γ$.

4. [10] Decide which of the following statements are true for all positive integers n: (a) $H_{n}\lt\ln n$. (b) $H_{n}\gt\ln n$. (c) $H_{n}\gt\ln n+γ$.

5. [15] Give the value of $H_{10000}$ to 15 decimal places, using the tables in Appendix A.

6. [M15] Prove that the harmonic numbers are directly related to Stirling’s numbers, which were introduced in the previous section; in fact,

7. [M21] Let $T (m, n)=H_{m}+H_{n}-H_{mn}$. (a) Show that when m or n increases, $T (m, n)$ never increases (assuming that m and n are positive). (b) Compute the minimum and maximum values of $T (m, n)$ for m, n > 0.

8. [HM18] Compare Eq. (8) with  ln k; estimate the difference as a function of n.

ln k; estimate the difference as a function of n.

9. [M18] Theorem A applies only when x > 0; what is the value of the sum considered when $x=-1$?

9. [M18] Theorem A applies only when x > 0; what is the value of the sum considered when $x=-1$?

10. [M20] (Summation by parts.) We have used special cases of the general method of summation by parts in exercise 1.2.4–42 and in the derivation of Eq. (9). Prove the general formula

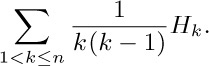

11. [M21] Using summation by parts, evaluate

11. [M21] Using summation by parts, evaluate

12. [M10] Evaluate

12. [M10] Evaluate  correct to at least 100 decimal places.

correct to at least 100 decimal places.

13. [M22] Prove the identity

(Note in particular the special case x = 0, which gives us an identity related to exercise 1.2.6–48.)

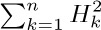

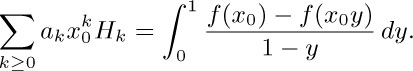

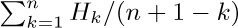

14. [M22] Show that  , and evaluate

, and evaluate  .

.

15. [M23] Express

15. [M23] Express  in terms of n and $H_{n}$.

in terms of n and $H_{n}$.

16. [18] Express the sum  in terms of harmonic numbers.

in terms of harmonic numbers.

17. [M24] (E. Waring, 1782.) Let p be an odd prime. Show that the numerator of $H_{p-1}$ is divisible by p.

18. [M33] (J. Selfridge.) What is the highest power of 2 that divides the numerator of

19. [M30] List all nonnegative integers n for which $H_{n}$ is an integer. [Hint: If $H_{n}$ has odd numerator and even denominator, it cannot be an integer.]

19. [M30] List all nonnegative integers n for which $H_{n}$ is an integer. [Hint: If $H_{n}$ has odd numerator and even denominator, it cannot be an integer.]

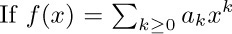

20. [HM22] There is an analytic way to approach summation problems such as the one leading to Theorem A in this section: If  , and this series converges for $x=x_{0}$, prove that

, and this series converges for $x=x_{0}$, prove that

21. [M24] Evaluate  .

.

22. [M28] Evaluate  .

.

23. [HM20] By considering the function $Γ′(x)/Γ(x)$, generalize $H_{n}$ to noninteger values of n. You may use the fact that $Γ′(1)=-γ$, anticipating the next exercise.

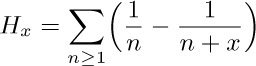

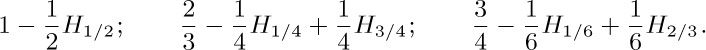

23. [HM20] By considering the function $Γ′(x)/Γ(x)$, generalize $H_{n}$ to noninteger values of n. You may use the fact that $Γ′(1)=-γ$, anticipating the next exercise.

24. [HM21] Show that

(Consider the partial products of this infinite product.)

25. [M21] Let  . What are

. What are  and

and  Prove the general identity

Prove the general identity  .

.

The sequence

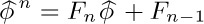

in which each number is the sum of the preceding two, plays an important role in at least a dozen seemingly unrelated algorithms that we will study later. The numbers in the sequence are denoted by $F_{n}$, and we formally define them as

This famous sequence was published in 1202 by Leonardo Pisano (Leonardo of Pisa), who is sometimes called Leonardo Fibonacci (Filius Bonaccii, son of Bonaccio). His Liber Abaci (Book of the Abacus) contains the following exercise: “How many pairs of rabbits can be produced from a single pair in a year’s time?” To solve this problem, we are told to assume that each pair produces a new pair of offspring every month, and that each new pair becomes fertile at the age of one month. Furthermore, the rabbits never die. After one month there will be 2 pairs of rabbits; after two months, there will be 3; the following month the original pair and the pair born during the first month will both usher in a new pair and there will be 5 in all; and so on.

Fibonacci was by far the greatest European mathematician of the Middle Ages. He studied the work of al-Khwārizmī (after whom “algorithm” is named, see Section 1.1) and he added numerous original contributions to arithmetic and geometry. The writings of Fibonacci were reprinted in 1857 [B. Boncompagni, Scritti di Leonardo Pisano (Rome, 1857–1862), 2 vols.; $F_{n}$ appears in Vol. 1, 283– 285]. His rabbit problem was, of course, not posed as a practical application to biology and the population explosion; it was an exercise in addition. In fact, it still makes a rather good computer exercise about addition (see exercise 3); Fibonacci wrote: “It is possible to do [the addition] in this order for an infinite number of months.”

Before Fibonacci wrote his work, the sequence $\langle{F_{n}}\rangle$ had already been discussed by Indian scholars, who had long been interested in rhythmic patterns that are formed from one-beat and two-beat notes or syllables. The number of such rhythms having n beats altogether is $F_{n+1}$; therefore both Gopāla (before 1135) and Hemacandra (c. 1150) mentioned the numbers 1, 2, 3, 5, 8, 13, 21, 34, ... explicitly. [See P. Singh, Historia Math. 12 (1985), 229–244; see also exercise 4.5.3–32.]

The same sequence also appears in the work of Johannes Kepler, 1611, who was musing about the numbers he saw around him [J. Kepler, The Six-Cornered Snowflake (Oxford: Clarendon Press, 1966), 21]. Kepler was presumably unaware of Fibonacci’s brief mention of the sequence. Fibonacci numbers have often been observed in nature, probably for reasons similar to the original assumptions of the rabbit problem. [See Conway and Guy, The Book of Numbers (New York: Copernicus, 1996), 113–126, for an especially lucid explanation.]

A first indication of the intimate connections between $F_{n}$ and algorithms came to light in 1837, when É. Léger used Fibonacci’s sequence to study the efficiency of Euclid’s algorithm. He observed that if the numbers m and n in Algorithm 1.1E are not greater than F_{k}, step E2 will be executed at most k − 1 times. This was the first practical application of Fibonacci’s sequence. (See Theorem 4.5.3F.) During the 1870s the mathematician É. Lucas obtained very profound results about the Fibonacci numbers, and in particular he used them to prove that the 39-digit number $2^{127}-1$ is prime. Lucas gave the name “Fibonacci numbers” to the sequence $\langle{F_{n}}\rangle$, and that name has been used ever since.

We already have examined the Fibonacci sequence briefly in Section 1.2.1 (Eq. (3) and exercise 4), where we found that $φ^{n-2}≤F_{n}≤φ^{n-1}$ if n is a positive integer and if

We will see shortly that this quantity, φ, is intimately connected with the Fibonacci numbers.

The number φ itself has a very interesting history. Euclid called it the “extreme and mean ratio”; the ratio of A to B is the ratio of A + B to A, if the ratio of A to B is φ. Renaissance writers called it the “divine proportion”; and in the last century it has commonly been called the “golden ratio.” Many artists and writers have said that the ratio of φ to 1 is the most aesthetically pleasing proportion, and their opinion is confirmed from the standpoint of computer programming aesthetics as well. For the story of φ, see the excellent article “The Golden Section, Phyllotaxis, and Wythoff’s Game,” by H. S. M. Coxeter, Scripta Math. 19 (1953), 135–143; see also Chapter 8 of The 2nd Scientific American Book of Mathematical Puzzles and Diversions, by Martin Gardner (New York: Simon and Schuster, 1961). Several popular myths about φ have been debunked by George Markowsky in College Math. J. 23 (1992), 2–19. The fact that the ratio Fn+1/F_{n} approaches φ was known to the early European reckoning master Simon Jacob, who died in 1564 [see P. Schreiber, Historia Math. 22 (1995), 422–424].

The notations we are using in this section are a little undignified. In much of the sophisticated mathematical literature, $F_{n}$ is called $u_{n}$ instead, and φ is called $τ$. Our notations are almost universally used in recreational mathematics (and some crank literature!) and they are rapidly coming into wider use. The designation φ comes from the name of the Greek artist Phidias who is said to have used the golden ratio in his sculpture. [See T. A. Cook, The Curves of Life (1914), 420.] The notation $F_{n}$ is in accordance with that used in the Fibonacci Quarterly, where the reader may find numerous facts about the Fibonacci sequence. A good reference to the classical literature about $F_{n}$ is Chapter 17 of L. E. Dickson’s History of the Theory of Numbers 1 (Carnegie Inst. of Washington, 1919).

The Fibonacci numbers satisfy many interesting identities, some of which appear in the exercises at the end of this section. One of the most commonly discovered relations, mentioned by Kepler in a letter he wrote in 1608 but first published by J. D. Cassini [Histoire Acad. Roy. Paris 1 (1680), 201], is

which is easily proved by induction. A more esoteric way to prove the same formula starts with a simple inductive proof of the matrix identity

We can then take the determinant of both sides of this equation.

Relation (4) shows that $F_{n}$ and $F_{n+1}$ are relatively prime, since any common divisor would have to be a divisor of $(-1)^{n}$.

From the definition (2) we find immediately that

$F_{n+3}=F_{n+2}+F_{n+1}=2F_{n+1}+F_{n}$; $F_{n+4}=3F_{n+1}+2F_{n}$;

and, in general, by induction that

for any positive integer m.

If we take m to be a multiple of n in Eq. (6), we find inductively that

$F_{nk}$ is a multiple of $F_{n}$.

Thus every third number is even, every fourth number is a multiple of 3, every fifth is a multiple of 5, and so on.

In fact, much more than this is true. If we write $\gcd(m, n)$ to stand for the greatest common divisor of m and n, a rather surprising theorem emerges:

Theorem A (É. Lucas, 1876). A number divides both $F_{m}$ and $F_{n}$ if and only if it is a divisor of $F_{d}$, where $d=\gcd(m, n)$; in particular,

Proof. This result is proved by using Euclid’s algorithm. We observe that because of Eq. (6) any common divisor of $F_{m}$ and $F_{n}$ is also a divisor of $F_{n+m}$; and, conversely, any common divisor of $F_{n+m}$ and $F_{n}$ is a divisor of $F_{m} F_{n+1}$. Since $F_{n+1}$ is relatively prime to $F_{n}$, a common divisor of $F_{n+m}$ and $F_{n}$ also divides $F_{m}$. Thus we have proved that, for any number d,

We will now show that any sequence $\langle{F_{n}}\rangle$ for which statement (8) holds, and for which $F_{0}=0$, satisfies Theorem A.

First it is clear that statement (8) may be extended by induction on k to the rule

d divides $F_{m}$ and $F_{n}$ if and only if d divides $F_{m+kn}$ and $F_{n}$,

where k is any nonnegative integer. This result may be stated more succinctly:

Now if r is the remainder after division of m by n, that is, if $r=m\bmod n$, then the common divisors of $\{F_{m}, F_{n}\}$ are the common divisors of $\{F_{n}, F_{r}\}$. It follows that throughout the manipulations of Algorithm 1.1E the set of common divisors of $\{F_{m}, F_{n}\}$ remains unchanged as m and n change; finally, when r = 0, the common divisors are simply the divisors of $F_{0}=0$ and $F_{\gcd(m,n)}$.

Most of the important results involving Fibonacci numbers can be deduced from the representation of $F_{n}$ in terms of φ, which we now proceed to derive. The method we shall use in the following derivation is extremely important, and the mathematically oriented reader should study it carefully; we will study the same method in detail in the next section.

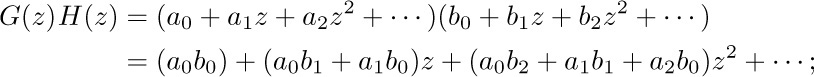

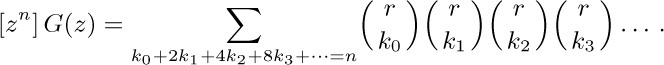

We start by setting up the infinite series

We have no a priori reason to expect that this infinite sum exists or that the function $G(z)$ is at all interesting — but let us be optimistic and see what we can conclude about the function $G(z)$ if it does exist. The advantage of such a procedure is that $G(z)$ is a single quantity that represents the entire Fibonacci sequence at once; and if we find out that $G(z)$ is a “known” function, its coefficients can be determined. We call $G(z)$ the generating function for the sequence $\langle{F_{n}}\rangle$.

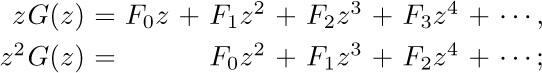

We can now proceed to investigate $G(z)$ as follows:

by subtraction, therefore,

All terms but the second vanish because of the definition of $F_{n}$, so this expression equals z. Therefore we see that, if $G(z)$ exists,

In fact, this function can be expanded in an infinite series in z (a Taylor series); working backwards we find that the coefficients of the power series expansion of Eq. (11) must be the Fibonacci numbers.

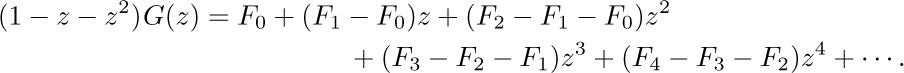

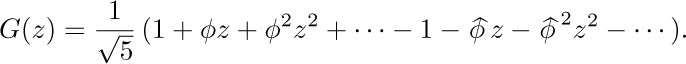

We can now manipulate $G(z)$ and find out more about the Fibonacci sequence. The denominator $1-z-z^{2}$ is a quadratic polynomial with the two roots  ; after a little calculation we find that $G(z)$ can be expanded by the method of partial fractions into the form

; after a little calculation we find that $G(z)$ can be expanded by the method of partial fractions into the form

where

The quantity $1/(1-φz)$ is the sum of the infinite geometric series $1+φz+φ^{2} z^{2}+···$, so we have

We now look at the coefficient of $z^{n}$, which must be equal to $F_{n}$; hence

This is an important closed form expression for the Fibonacci numbers, first discovered early in the eighteenth century. (See D. Bernoulli, Comment. Acad. Sci. Petrop. 3 (1728), 85–100, §7; see also A. de Moivre, Philos. Trans. 32 (1722), 162–178, who showed how to solve general linear recurrences in essentially the way we have derived (14).)

We could have merely stated Eq. (14) and proved it by induction. However, the point of the rather long derivation above was to show how it would be possible to discover the equation in the first place, using the important method of generating functions, which is a valuable technique for solving a wide variety of problems.

Many things can be proved from Eq. (14). First we observe that $\phihat$ is a negative number $(-0.61803...)$ whose magnitude is less than unity, so  gets very small as n gets large. In fact, the quantity

gets very small as n gets large. In fact, the quantity  is always small enough so that we have

is always small enough so that we have

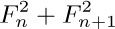

Other results can be obtained directly from $G(z)$; for example,

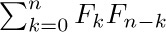

and the coefficient of $z^{n}$ in $G(z)^{2}$ is  . We deduce therefore that

. We deduce therefore that

(The second step in this derivation follows from the result of exercise 11.)

Exercises

1. [10] What is the answer to Leonardo Fibonacci’s original problem: How many pairs of rabbits are present after a year?

2. [20] In view of Eq. (15), what is the approximate value of $F_{1000}$? (Use logarithms found in Appendix A.)

2. [20] In view of Eq. (15), what is the approximate value of $F_{1000}$? (Use logarithms found in Appendix A.)

3. [25] Write a computer program that calculates and prints $F_{1}$ through $F_{1000}$ in decimal notation. (The previous exercise determines the size of numbers that must be handled.)

4. [14] Find all n for which $F_{n}=n$.

4. [14] Find all n for which $F_{n}=n$.

5. [20] Find all n for which $F_{n}=n^{2}$.

7. [15] If n is not a prime number, $F_{n}$ is not a prime number (with one exception). Prove this and find the exception.

7. [15] If n is not a prime number, $F_{n}$ is not a prime number (with one exception). Prove this and find the exception.

8. [15] In many cases it is convenient to define $F_{n}$ for negative n, by assuming that $F_{n+2}=F_{n+1}+F_{n}$ for all integers n. Explore this possibility: What is $F_{-1}$? What is $F_{-2}$? Can $F_{-n}$ be expressed in a simple way in terms of $F_{n}$?

9. [M20] Using the conventions of exercise 8, determine whether Eqs. (4), (6), (14), and (15) still hold when the subscripts are allowed to be any integers.

10. [15] Is  greater than $F_{n}$ or less than $F_{n}$?

greater than $F_{n}$ or less than $F_{n}$?

11. [M20] Show that $φ^{n}=F_{nφ}+F_{n-1}$ and  , for all integers n.

, for all integers n.

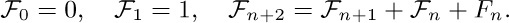

12. [M26] The “second order” Fibonacci sequence is defined by the rule

12. [M26] The “second order” Fibonacci sequence is defined by the rule

Express  in terms of $F_{n}$ and $F_{n+1}$. [Hint: Use generating functions.]

in terms of $F_{n}$ and $F_{n+1}$. [Hint: Use generating functions.]

13. [M22] Express the following sequences in terms of the Fibonacci numbers, when r, s, and c are given constants:

13. [M22] Express the following sequences in terms of the Fibonacci numbers, when r, s, and c are given constants:

a) $a_{0}=r$, $a_{1}=s$; $a_{n+2}=a_{n+1}+a_{n}$, for n ≥ 0.

b) $b_{0}=0$, $b_{1}=1$; $b_{n+2}=b_{n+1}+b_{n}+c$, for n ≥ 0.

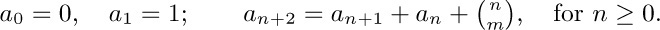

14. [M28] Let m be a fixed positive integer. Find $a_{n}$, given that

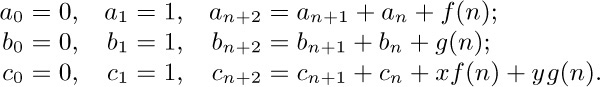

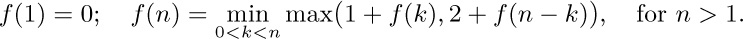

15. [M22] Let $f(n)$ and $g(n)$ be arbitrary functions, and for n ≥ 0 let

Express $c_{n}$ in terms of x, y, $a_{n}$, $b_{n}$, and $F_{n}$

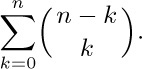

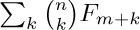

16. [M20] Fibonacci numbers appear implicitly in Pascal’s triangle if it is viewed from the right angle. Show that the following sum of binomial coefficients is a Fibonacci number:

16. [M20] Fibonacci numbers appear implicitly in Pascal’s triangle if it is viewed from the right angle. Show that the following sum of binomial coefficients is a Fibonacci number:

17. [M24] Using the conventions of exercise 8, prove the following generalization of Eq. (4): $F_{n+k}F_{m-k}-F_{n}F_{m}=(-1)^{n}F_{m-n-k}F_{k}$.

18. [20] Is  always a Fibonacci number?

always a Fibonacci number?

19. [M27] What is $\cos 36^{°}$?

19. [M27] What is $\cos 36^{°}$?

20. [M16] Express  in terms of Fibonacci numbers.

in terms of Fibonacci numbers.

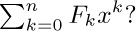

21. [M25] What is

22. [M20] Show that

22. [M20] Show that  is a Fibonacci number.

is a Fibonacci number.

23. [M23] Generalizing the preceding exercise, show that  is always a Fibonacci number.

is always a Fibonacci number.

24. [HM20] Evaluate the $n × n$ determinant

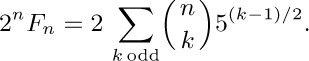

25. [M21] Show that

26. [M20] Using the previous exercise, show that $F_{p}≡5^{(p-1)/2} (modulo p)$ if p is an odd prime.

26. [M20] Using the previous exercise, show that $F_{p}≡5^{(p-1)/2} (modulo p)$ if p is an odd prime.

27. [M20] Using the previous exercise, show that if p is a prime different from 5, then either $F_{p-1}$ or $F_{p+1}$ (not both) is a multiple of p.

28. [M21] What is $F_{n+1}-φF_{n}$?

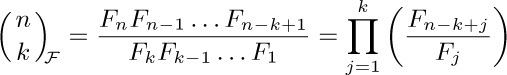

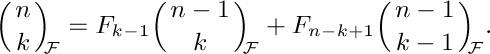

29. [M23] (Fibonomial coefficients.) Édouard Lucas defined the quantities

29. [M23] (Fibonomial coefficients.) Édouard Lucas defined the quantities

in a manner analogous to binomial coefficients. (a) Make a table of  for 0 ≤ k ≤ n ≤ 6. (b) Show that

for 0 ≤ k ≤ n ≤ 6. (b) Show that  is always an integer because we have

is always an integer because we have

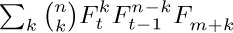

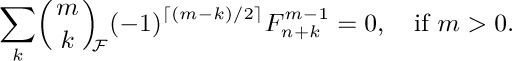

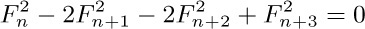

30. [M38] (D. Jarden, T. Motzkin.) The sequence of mth powers of Fibonacci numbers satisfies a recurrence relation in which each term depends on the preceding m + 1 terms. Show that

30. [M38] (D. Jarden, T. Motzkin.) The sequence of mth powers of Fibonacci numbers satisfies a recurrence relation in which each term depends on the preceding m + 1 terms. Show that

For example, when m = 3 we get the identity  .

.

31. [M20] Show that $F_{2n}φ\bmod 1=1-φ^{-2n}$ and $F_{2n+1}φ\bmod 1=φ^{-2n-1}$.

32. [M24] The remainder of one Fibonacci number divided by another is ± a Fibonacci number: Show that, modulo $F_{n}$,

33. [HM24] Given that $z=π/2+i\ln φ$, show that $\sin nz/\sin z=i^{1-n}F_{n}$.

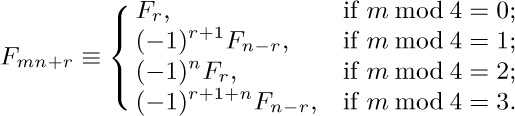

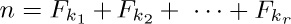

34. [M24] (The Fibonacci number system.) Let the notation k ≫ m mean that $k ≥ m+2$. Show that every positive integer n has a unique representation

34. [M24] (The Fibonacci number system.) Let the notation k ≫ m mean that $k ≥ m+2$. Show that every positive integer n has a unique representation  where $k_{1}≫ k_{2}≫···≫ k_{r}≫ 0$.

where $k_{1}≫ k_{2}≫···≫ k_{r}≫ 0$.

35. [M24] (A phi number system.) Consider real numbers written with the digits 0 and 1 using base φ; thus $(100.1)_{φ}=φ^{2}+φ^{-1}$. Show that there are infinitely many ways to represent the number 1; for example, $1=(.11)_{φ}=(.011111...)_{φ}$. But if we require that no two adjacent 1s occur and that the representation does not end with the infinite sequence 01010101..., then every nonnegative number has a unique representation. What are the representations of integers?

36. [M32] (Fibonacci strings.) Let $S_{1}=“a”$, $S_{2}=“b”$, and $S_{n+2}=S_{n+1}S_{n}$, n > 0; in other words, $S_{n+2}$ is formed by placing $S_{n}$ at the right of $S_{n+1}$. We have $S_{3}=“ba”$, $S_{4}=“bab”$, $S_{5}=“babba”$, etc. Clearly $S_{n}$ has $F_{n}$ letters. Explore the properties of $S_{n}$. (Where do double letters occur? Can you predict the value of the kth letter of $S_{n}$? What is the density of the b’s? And so on.)

36. [M32] (Fibonacci strings.) Let $S_{1}=“a”$, $S_{2}=“b”$, and $S_{n+2}=S_{n+1}S_{n}$, n > 0; in other words, $S_{n+2}$ is formed by placing $S_{n}$ at the right of $S_{n+1}$. We have $S_{3}=“ba”$, $S_{4}=“bab”$, $S_{5}=“babba”$, etc. Clearly $S_{n}$ has $F_{n}$ letters. Explore the properties of $S_{n}$. (Where do double letters occur? Can you predict the value of the kth letter of $S_{n}$? What is the density of the b’s? And so on.)

37. [M35] (R. E. Gaskell, M. J. Whinihan.) Two players compete in the following game: There is a pile containing n chips; the first player removes any number of chips except that he cannot take the whole pile. From then on, the players alternate moves, each person removing one or more chips but not more than twice as many chips as the preceding player has taken. The player who removes the last chip wins. (For example, suppose that n = 11; player A removes 3 chips; player B may remove up to 6 chips, and he takes 1. There remain 7 chips; player A may take 1 or 2 chips, and he takes 2; player B may remove up to 4, and he picks up 1. There remain 4 chips; player A now takes 1; player B must take at least one chip and player A wins in the following turn.)

37. [M35] (R. E. Gaskell, M. J. Whinihan.) Two players compete in the following game: There is a pile containing n chips; the first player removes any number of chips except that he cannot take the whole pile. From then on, the players alternate moves, each person removing one or more chips but not more than twice as many chips as the preceding player has taken. The player who removes the last chip wins. (For example, suppose that n = 11; player A removes 3 chips; player B may remove up to 6 chips, and he takes 1. There remain 7 chips; player A may take 1 or 2 chips, and he takes 2; player B may remove up to 4, and he picks up 1. There remain 4 chips; player A now takes 1; player B must take at least one chip and player A wins in the following turn.)

What is the best move for the first player to make if there are initially 1000 chips?

38. [35] Write a computer program that plays the game described in the previous exercise and that plays optimally.

39. [M24] Find a closed form expression for $a_{n}$, given that $a_{0}=0$, $a_{1}=1$, and $a_{n+2}=a_{n+1}+6a_{n}$ for n ≥ 0.

40. [M25] Solve the recurrence

41. [M25] (Yuri Matiyasevich, 1990.) Let $f(x)=\lfloor{x+φ^{-1}}\rfloor$. Prove that if $n=F_{k_{1}}+···+F_{k_{r}}$ is the representation of n in the Fibonacci number system of exercise 34, then $Fk_{1}+1+···+F_{k_{r} +1}=f(φn)$. Find a similar formula for $Fk_{1}-1+···+F_{k_{r}-1}$.

41. [M25] (Yuri Matiyasevich, 1990.) Let $f(x)=\lfloor{x+φ^{-1}}\rfloor$. Prove that if $n=F_{k_{1}}+···+F_{k_{r}}$ is the representation of n in the Fibonacci number system of exercise 34, then $Fk_{1}+1+···+F_{k_{r} +1}=f(φn)$. Find a similar formula for $Fk_{1}-1+···+F_{k_{r}-1}$.

42. [M26] (D. A. Klarner.) Show that if m and n are nonnegative integers, there is a unique sequence of indices $k_{1}≫ k_{2}≫···≫ k_{r}$ such that

$m=F_{k_{1}}+F_{k_{2}}+···+F_{k_{r}}$, $n=F_{k_{1}+1}+F_{k_{2}+1}+···+F_{k_{r}+1}$.

(See exercise 34. The k’s may be negative, and r may be zero.)

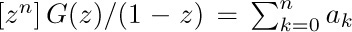

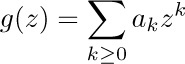

Whenever we want to obtain information about a sequence of numbers $\langle{a_{n}}\rangle=a_{0}, a_{1}, a_{2},...$, we can set up an infinite sum in terms of a “parameter” z

We can then try to obtain information about the function G. This function is a single quantity that represents the whole sequence; if the sequence $\langle{a_{n}}\rangle$ has been defined inductively (that is, if $a_{n}$ has been defined in terms of $a_{0}, a_{1},..., a_{n-1}$) this is an important advantage. Furthermore, we can recover the individual values of a_{0}, a_{1}, ... from the function $G(z)$, assuming that the infinite sum in Eq. (1) exists for some nonzero value of z, by using techniques of differential calculus.

We call $G(z)$ the generating function for the sequence $a_{0}, a_{1}, a_{2},...$. The use of generating functions opens up a whole new range of techniques, and it broadly increases our capacity for problem solving. As mentioned in the previous section, A. de Moivre introduced generating functions in order to solve the general linear recurrence problem. De Moivre’s theory was extended to slightly more complicated recurrences by James Stirling, who showed how to apply differentiation and integration as well as arithmetic operations [Methodus Differentialis (London: 1730), Proposition 15]. A few years later, L. Euler began to use generating functions in several new ways, for example in his papers on partitions [Commentarii Acad. Sci. Pet. 13 (1741), 64–93; Novi Comment. Acad. Sci. Pet. 3 (1750), 125–169]. Pierre S. Laplace developed the techniques further in his classic work Théorie Analytique des Probabilités (Paris: 1812).

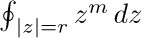

The question of convergence of the infinite sum (1) is of some importance. Any textbook about the theory of infinite series will prove that:

a) If the series converges for a particular value of z = z_{0}, then it converges for all values of z with $|z|\lt |z_{0}|$.